Edge Impulse is proud to be part of the 1 % for the planet initiative. In 2023, one of the organizations we supported was Smart Savannahs, a nonprofit that supplies African wildlife parks and rangers with innovative surveillance technology.

Besides a financial contribution, Edge Impulse employees Sara Olsson (that’s me) and Emile Bosch joined the Rhino Monitoring Unit for two weeks at Solio Game Reserve, one of the parks getting support from Smart Savannahs.

Earlier research at Solio has been investigating the uniqueness of the wrinkle pattern around rhino eyes, and how it can be used as a fingerprint to identify individuals. In 2011, Rhino Resource Center published a paper on this topic, concluding that it was possible to identify individual rhinos through manual visual comparisons of photographs of wrinkles around each rhino’s eyes, even taken over a span of four years. This in contrast to tracking rhinos from their horns, which might seem like a good characteristic, but they do change in terms of size and shape, and sometimes drastically due to breakage.

Our aim for this expedition was, instead of manually comparing photographs, to automate the identification undertaking through image processing and machine learning. Having access to such an identification tool would facilitate the behavioral research conducted by the Rhino Monitoring Unit.

Field work: Photographing rhino eyes

Given the requirement for a highly specific dataset of multiple high-resolution images of each rhino eye, the first activity of the project was going into the fields and photographing encountered rhinos — sometimes data collection can be tedious, and other times it’s more of a life experience! We aimed at getting at least 15 photos per eye, with variations in angle and lighting conditions. Ideally, more images would be beneficial, but moving rhinos can easily escape the scene, and those that remain too stationary tend to produce very similar photos. In the ideal case, the training and validation data would be collected on different days to ensure variability in settings such as lighting and temporary conditions like mud or scars. Capturing such data was achieved for a couple of rhinos, with the help of park rangers that could identify some rhinos based on their horns or other appearance.

The resulting range of images captured varied from five to 30 per eye. For the shyer rhinos, from which fewer than 15 images were obtained, their data were excluded from the experiments, since validation with so few samples would give very limited insights.

Assessing identification models

For the identification, we aimed for a method that would not require retraining each time a new rhino is added to the system. We focused thus on approaches for image similarity search rather than classification models. The primary task of the algorithm or machine learning model is then to extract features that distinctively characterize each image.

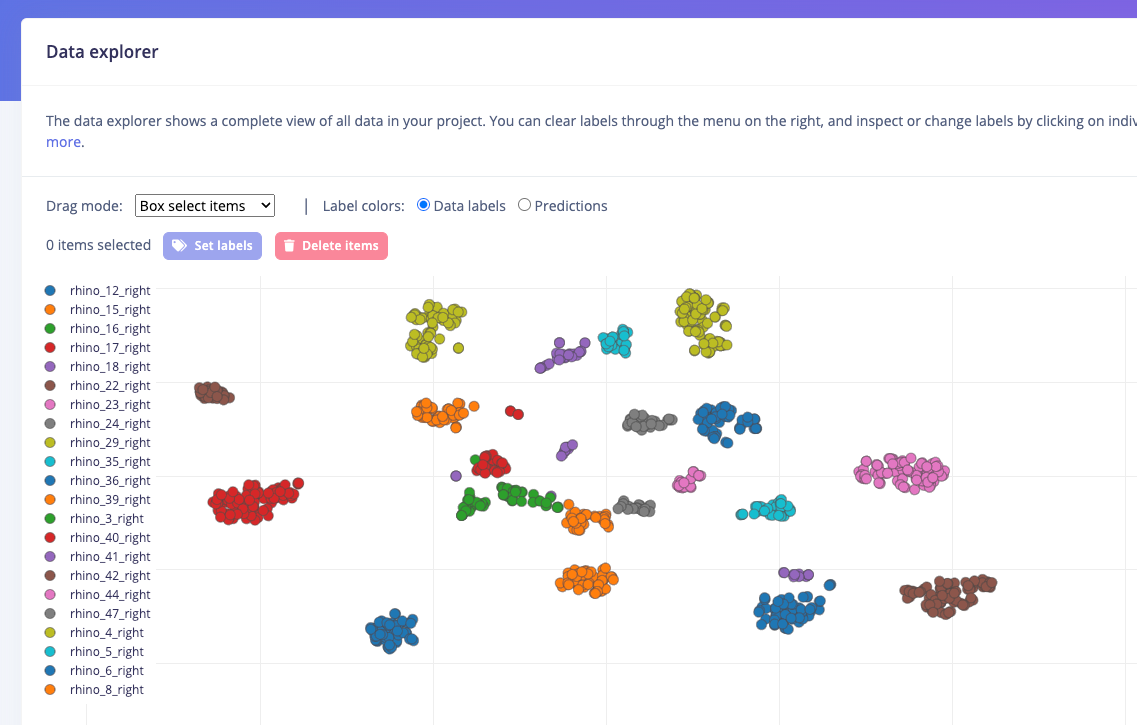

One experiment involved extracting features using an EfficientNet B0 model, i.e. retrieving the features before the classification layer, and using these in search of the nearest neighbors. In a test with this approach involving 22 individuals, 43% of the samples matched the top individual presented, and 77% matched one of the top five individuals presented. The graph below illustrates the clustering of images after fine-tuning the EfficientNet B0 model in Studio and applying t-SNE for dimension reduction in the data explorer.

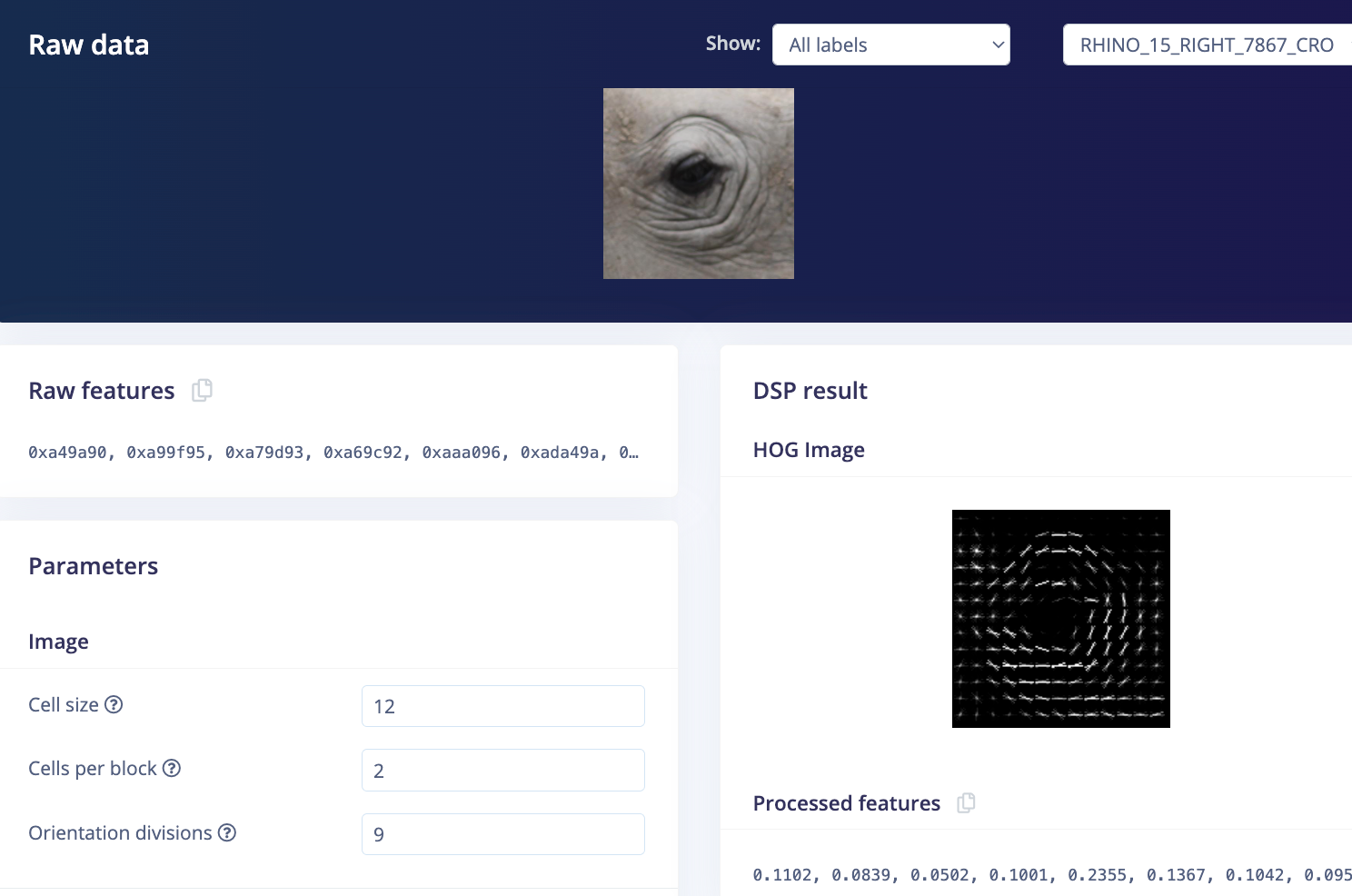

Another approach we tried for feature extraction was using HOG (Histogram of Oriented Gradients), which is effective in capturing the gradient structures of images, such as edges and texture patterns. This method diverges from CNNs, as HOG generates a 1D representation of each image. HOG was tested in Studio as a custom DSP block with adjustable parameters. Using this DSP block together with a classification model showed promising results for a limited number of individuals (<10). However, a similarity search approach likely remains the better strategy for an expanding dataset.

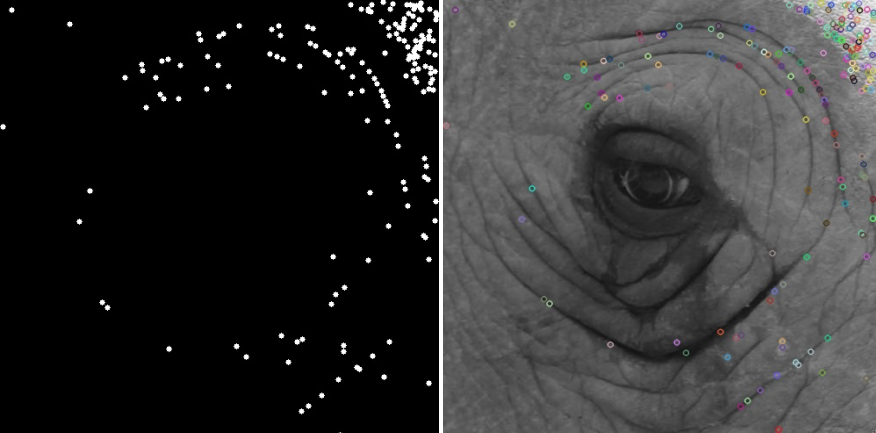

Yet another experiment was to run SIFT (Scale-Invariant Feature Transform) on the rhino eye images. SIFT is widely used for feature detection, matching, and image stitching, as its capability to identify points of interest characterized by significant variation in all directions, typically at corners or edges. When comparing two images, keypoints between them are matched based on the similarity of their descriptors. This method has been successfully employed for identifying various species in the Wildbook project by WildMe, and definitely interesting to further explore for this case of rhino eyes.

Of course, we are curious to consider the feasibility of individual animal identification on edge devices. A similar use case is biometrics identification on smartphones, however they typically handle a finite number of individuals, whereas this number is expected to continuously grow for a device surveilling animals roaming in the wild. This is likely a tradeoff between the device's size and capacity, and how much data is allowed to be stored and processed on the device before being offloaded. Additionally, one should consider optimization of the data representation for each individual; for instance, instead of storing the features from every encounter, it might be sufficient to save a set number of representative samples, or perhaps a single aggregated feature profile.

Looking forward

Building on the methods covered in this blog, the next step is to integrate these into a unified tool that utilizes one or several algorithms to process images. For the specific task of identifying individuals and reporting behavioral insights, full automation is not essential. Instead we envision a human-in-the-loop approach where the system presents the most similar matches for user confirmation. The primary goal is to make match validation the only task for the user and remove the need for manual searches across large datasets. If the method proves highly accurate, it could also facilitate batch processing, such as clustering a large dataset of unidentified images into suggested categories for the user to review and confirm.

I'm very grateful for the opportunity to support this initiative, and eager to contribute to the evolution of wildlife identification tools within the edge domain. For instance, I envision a portable device with offline capabilities that rangers can easily carry into the field and identify individuals as they are monitoring.

Read more about our 1% commitment here.

On the visit, I spent a day in the Ol Pejeta Conservancy with James Mwenda, who was the caregiver/protector of the last northern white rhinos for many years. We went to the memorial place of the lost rhinos (majority by poaching), and also got to meet the two last females Najin and Fatu. A day that will really stick with me forever.