Sensor fusion is a popular technique in embedded systems where you combine data from different sensors to get a more encompassing or accurate view of the world around your device. This process might include using multiple sensors of the same type to build a better representation of the environment, such as using two separate cameras to create a stereoscopic image that estimates three dimensions. Alternatively, you might combine information from different sensors. For example, an inertial measurement unit (IMU) can estimate absolute orientation by combining data from an accelerometer, gyroscope, and magnetometer.

Sensor fusion techniques often rely on algorithms such as the Central Limit Theorem or Kalman filtering. In many circumstances, a data-driven approach using neural networks may work well, and Edge Impulse now supports combining sensor data to help you make classification decisions or even predict continuous outcomes through regression!

Ready to get started? Check out this tutorial and the accompanying example project!

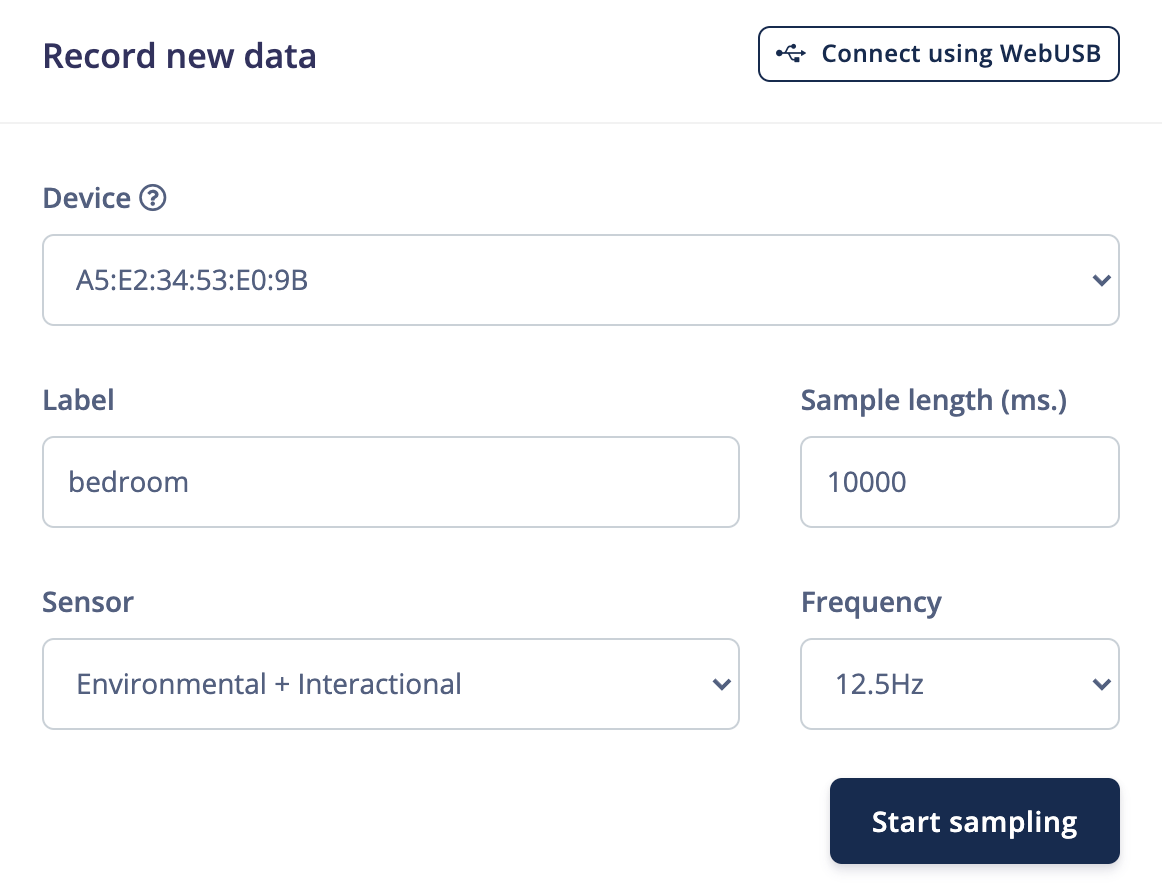

Right now, you can use the Arduino Nano 33 BLE Sense to try out these sensor fusion techniques on Edge Impulse. When you collect data, you have the option to enable different sensors on the Arduino board. For example, you can collect temperature, humidity, pressure, and light data using a couple of sensors.

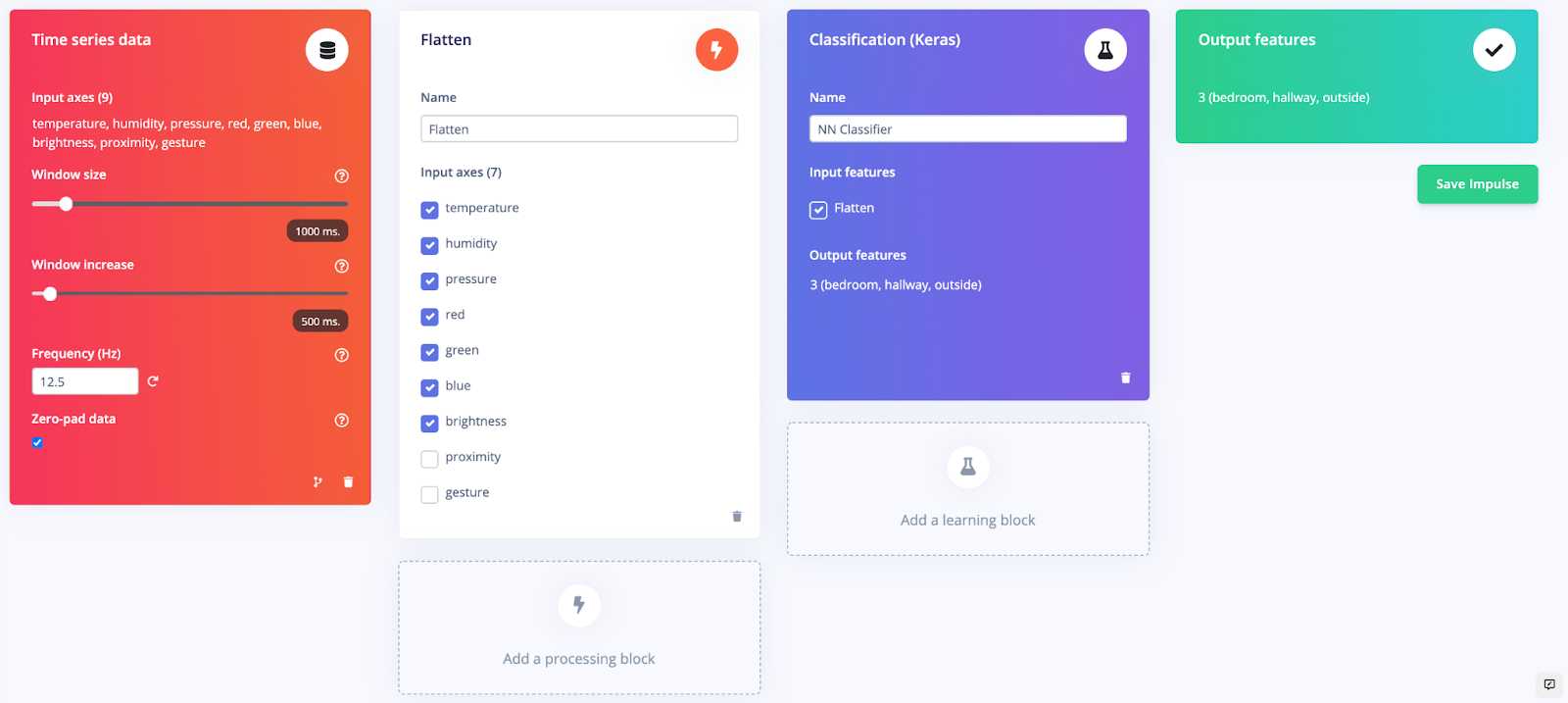

When you create an impulse, you have the option of selecting which data should be included in the training and inference process.

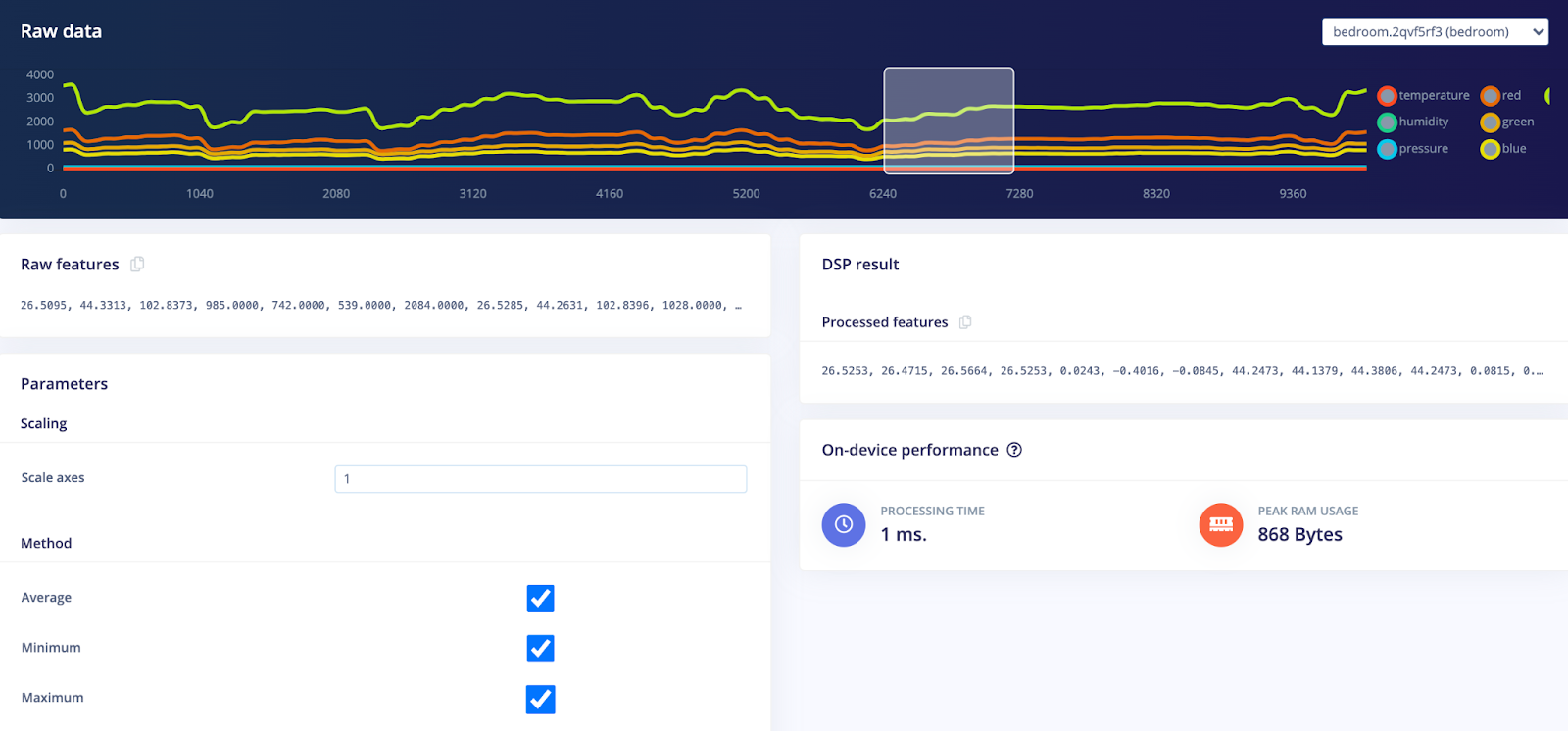

We want to classify the location of the board based on various environmental factors, so we include everything except the proximity and gesture data. We use the Flatten preprocessing block, as this type of data is slow-moving. We just care about things like the average, minimum, and maximum.

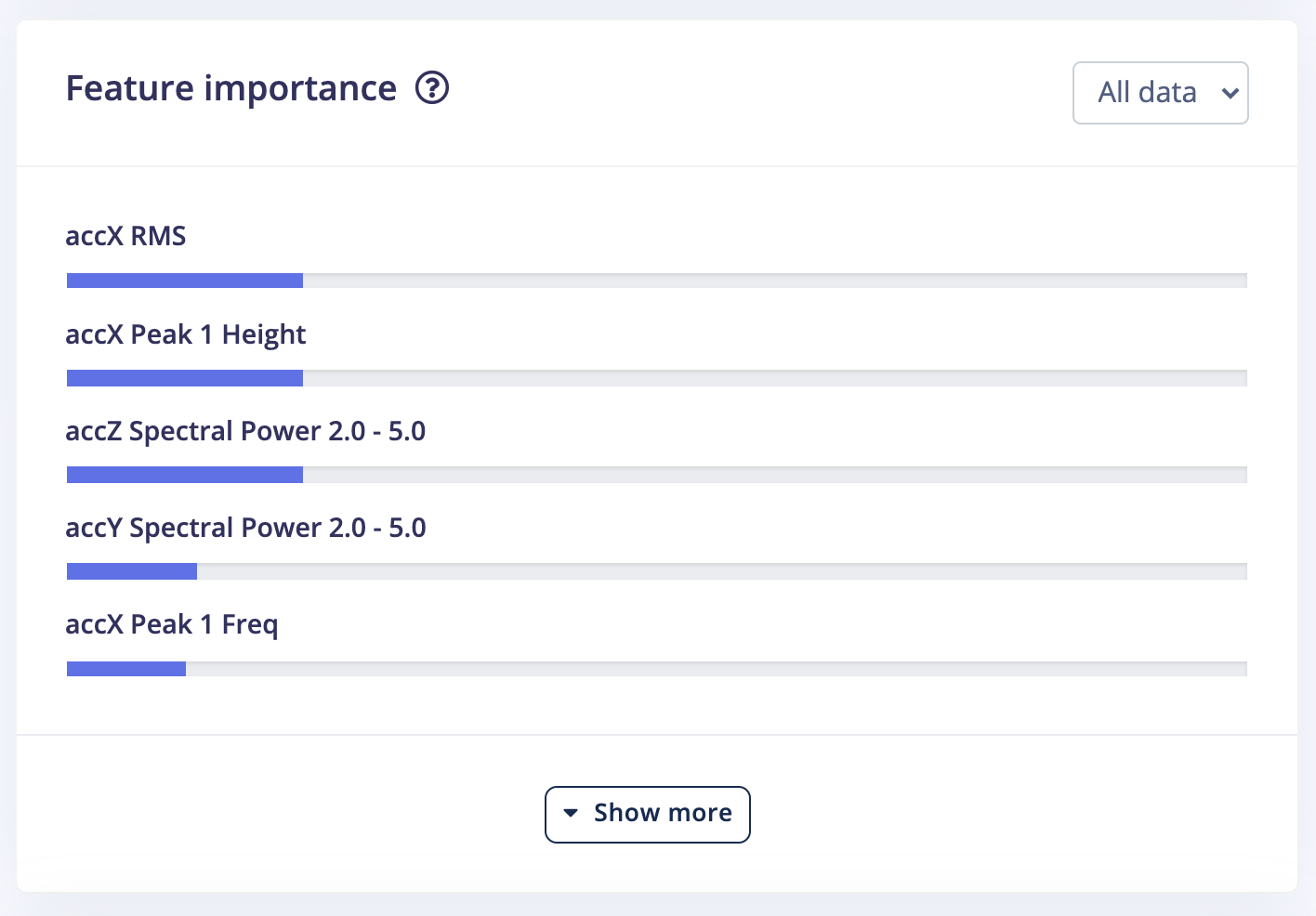

Sensor fusion is also a great candidate for the new anomaly detection and feature importance feature! You can see which of the various sensor inputs and calculations are the most important for determining class membership.

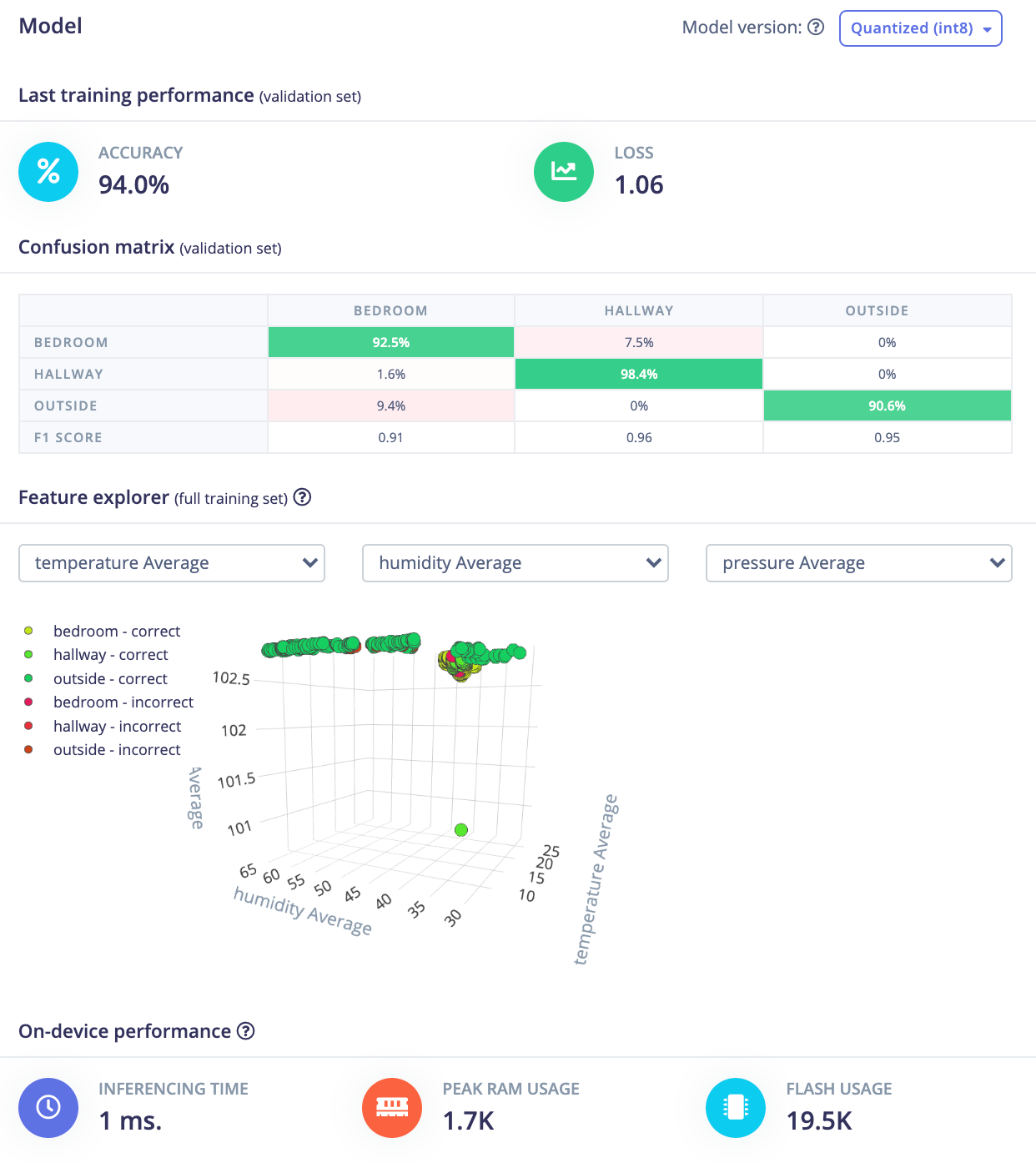

A neural network does not care where the input data came from, so long as it’s in the same order for training, testing, and inference. That means we can use the default dense neural network architecture for predicting the room in which the Arduino board is located.

When trained, you can see that the model could tell the difference between a bedroom, the hallway, and outside using a combination of sensor data.

Note that using the above environmental data may not be the best approach to predict location. Some rooms (e.g. bedrooms, kitchen, living rooms) may exhibit very similar properties, so you may need to use other data if room localization is your goal. Additionally, you might need to create a custom DSP block to assist with the sensor fusion process. Check out our tutorials on building custom processing blocks to learn more.

If you’d like to try all of this yourself in Edge Impulse, head to the sensor fusion tutorial.

We are excited to see what you build with sensor fusion. Please post your project on our forum or tag us on social media @EdgeImpulse!