Monitoring traffic conditions plays a crucial role in assisting governments and urban planners in managing traffic and improving urban mobility. By employing advanced technologies and data analysis techniques, authorities can gather real-time information about traffic flow, congestion levels, and transportation patterns within cities. This data serves as a valuable resource for understanding the dynamics of urban transportation systems and enables informed decision-making to address traffic challenges effectively.

One of the key benefits of monitoring traffic conditions is the ability to identify areas of congestion and traffic bottlenecks. By analyzing data from various sources such as traffic cameras, GPS devices, and vehicle sensors, authorities can pinpoint areas where traffic congestion is most prevalent. This information allows them to take proactive measures, such as implementing traffic signal optimization, adjusting lane configurations, or introducing new infrastructure projects like road expansions or flyovers, to alleviate congestion and enhance traffic flow. By addressing these bottlenecks, governments and urban planners can significantly reduce travel times, enhance commuter experiences, and improve overall urban mobility.

Furthermore, monitoring traffic conditions enables the identification of traffic patterns and trends. By analyzing historical traffic data, authorities can discern peak travel times, popular commuting routes, and traffic demand fluctuations. This information is invaluable for urban planners when designing transportation networks and making decisions related to public transit routes, bus schedules, and the placement of bike lanes and pedestrian walkways. By aligning infrastructure development and public transportation systems with observed traffic patterns, governments can create efficient and sustainable urban mobility solutions, reducing reliance on private vehicles and promoting alternative modes of transportation.

However, it can be challenging to detect moving vehicles with low-powered devices. And for proper monitoring of a large city’s traffic, many such devices are needed. But with the right hardware and software platform choices, it is an achievable goal, as engineer Naveen Kumar recently demonstrated with his proof of concept project. He showed off a low-power traffic monitoring system that leverages a neuromorphic processor and the Edge Impulse Studio machine learning development platform.

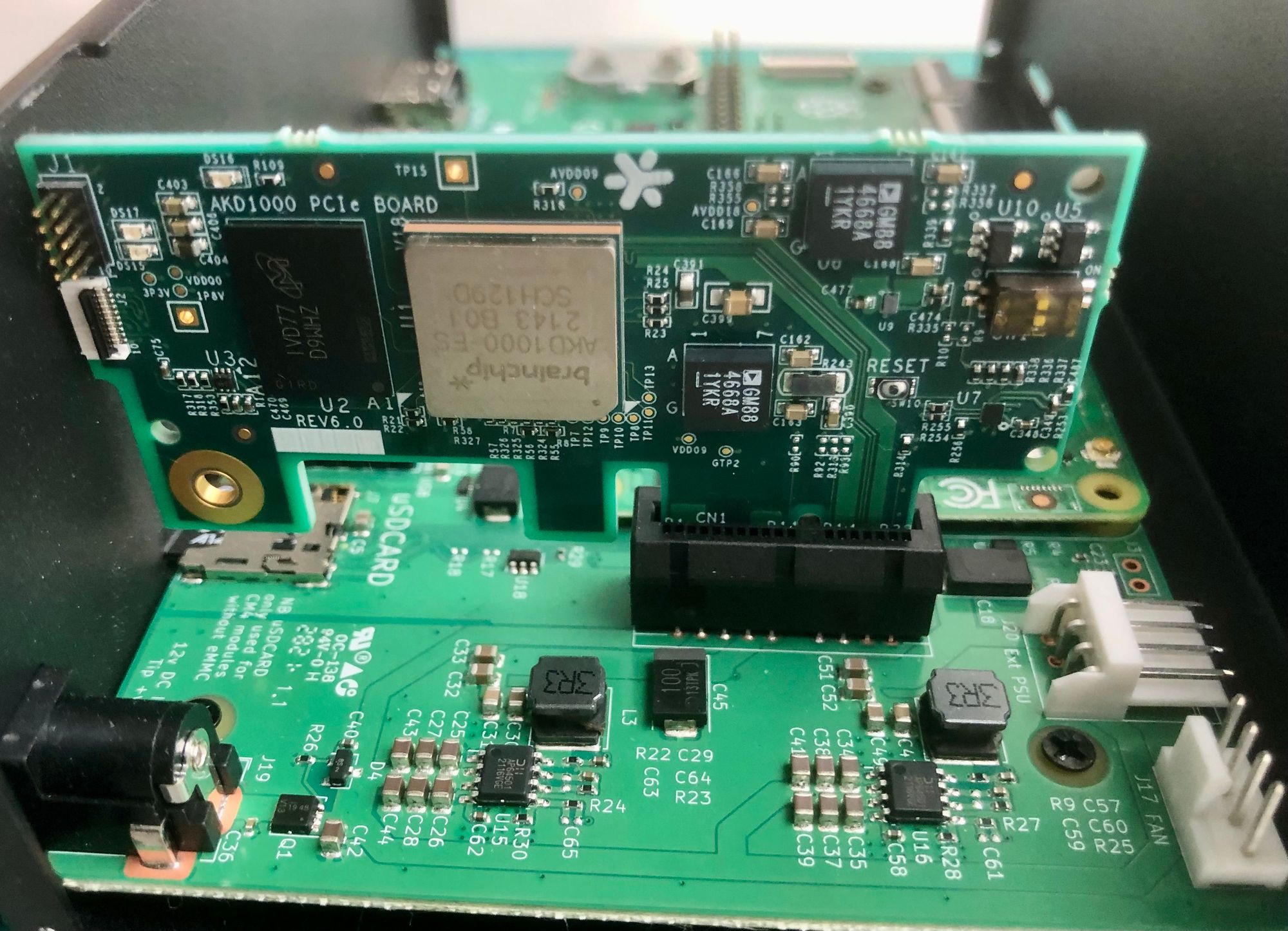

Kumar’s plan was to build an object detection pipeline, which makes it possible to detect and count all manner of vehicles in images, and also to determine their locations. BrainChip’s Akida Development Kit was chosen as the hardware platform for this task, which contains a Raspberry Pi Compute Module 4 with Wi-Fi and 8 GB of RAM. The kit also includes an Akida neuromorphic processor that leverages an event-based technology for increased energy efficiency. It allows for incremental learning and high-speed inference for various applications with exceptional performance.

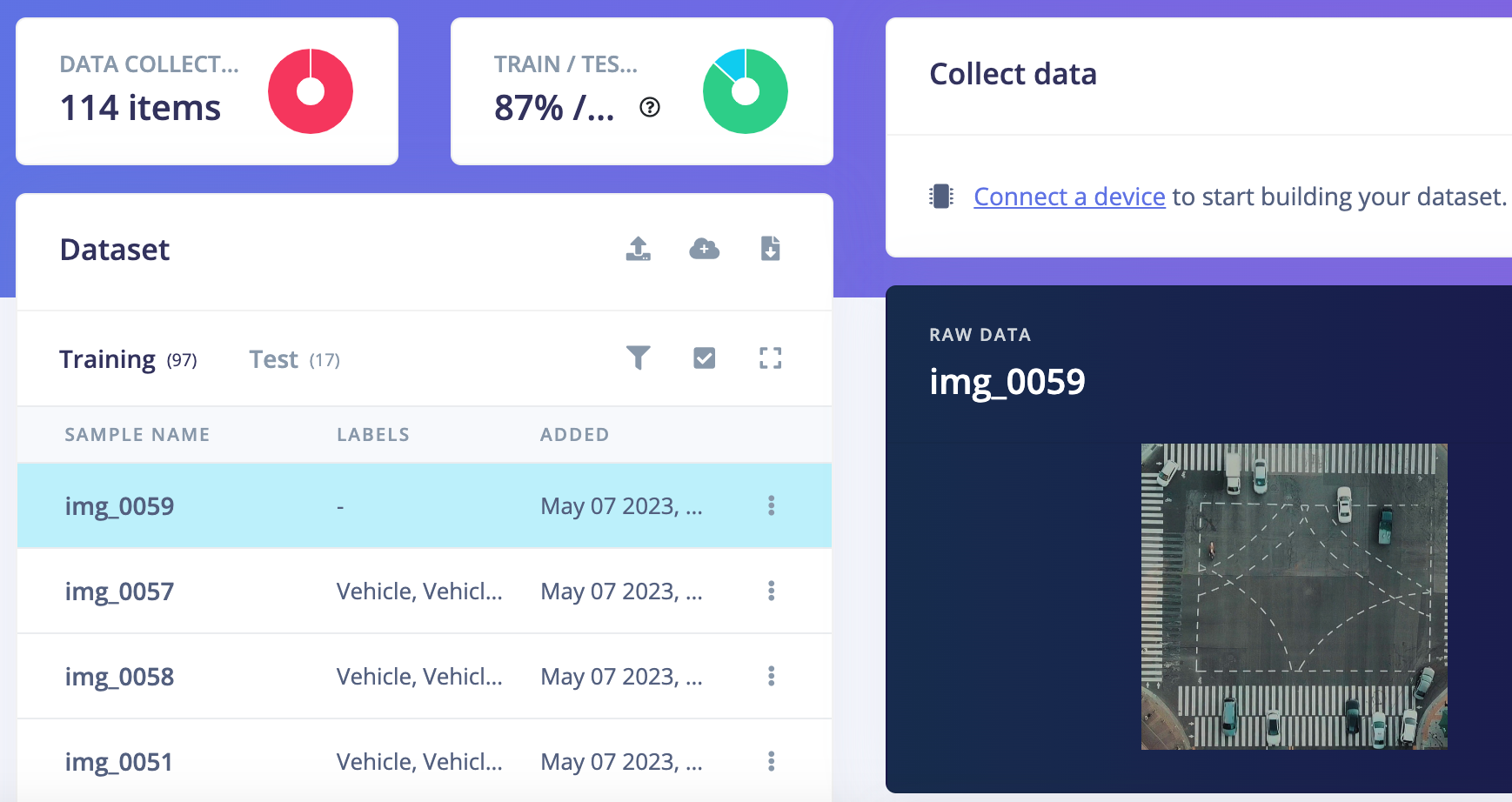

Next, a dataset of urban traffic images needed to be collected to train the object detection model. Local laws did not allow Kumar to capture videos of traffic from a drone, but fortunately publicly available datasets of this sort do exist. He was able to download some video footage that fit the bill perfectly, then he wrote a Python script to extract every fifth frame to create the raw data. The Edge Impulse CLI Uploader was then used to upload the data to an Edge Impulse Studio project and split it into training and test sets.

In order to finish the dataset preparation, the labeling queue tool was used to draw bounding boxes around all of the vehicles in each image. This can be a very laborious task, but the labeling queue tool offers an AI-powered boost that will automatically draw suggested bounding boxes. One only needs to confirm that the suggestion is correct, or make a minor tweak.

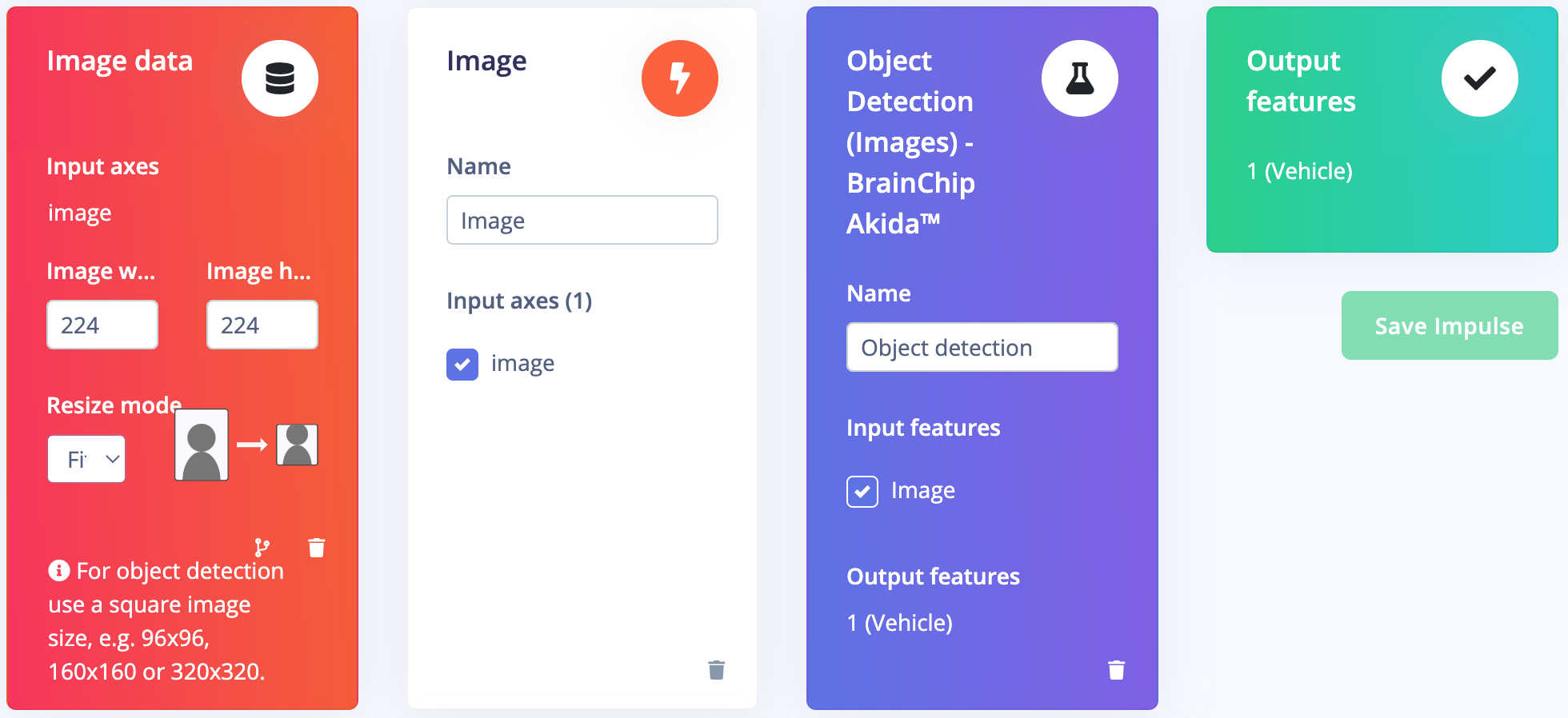

The impulse was then designed — this defines how data flows through the machine learning pipeline, from raw data all the way to a prediction. This began with a preprocessing step to resize the images, which is important for reducing the computational complexity of downstream algorithms. Features were then calculated and forwarded into the ground-breaking FOMO object detection algorithm that is ideal for resource-constrained platforms. FOMO is 30 times faster than MobileNet SSD and requires less than 200 KB of RAM. In this case, a specific FOMO model that is optimized for running on the Akida processor was selected.

This model is capable of locating the position of any vehicles present in an image, but before doing so, it must be trained. The training process was initiated with the click of a button, then after a short time, the results were displayed to help assess the model’s performance level. An F1 score of 94% was observed, which is excellent for a first pass. To confirm that this result did not stem from the model overfitting to the training data, the more stringent model testing tool was checked. This showed the accuracy rate to be 100%, which leaves no room for improvement, so Kumar moved on to model deployment.

Deploying a model to physical hardware removes the requirement for a network connection, and also eliminates the privacy and latency concerns that come along with using cloud computing resources. Since the Akida Python SDK was to be used to run inferencing, the Meta TF model was downloaded from Edge Impulse Studio’s Dashboard. This file was copied over to the Akida Development Kit, then a Python script was written to leverage this model in running real-time inferences.

The finished device was tested on some traffic images, and it was found to accurately detect vehicles at a rate of 24 frames per second. The inferences ran in about 40 milliseconds, which is impressive for object detection on an edge computing platform. Power consumption was at just over 37 milliwatts during these tests.

Whether traffic monitoring is your thing, or you are more into autonomous vehicles, quality control, or medical diagnostics, reading Kumar’s project documentation will give you plenty of tips and tricks to get your own project off the ground. And if you want a boost, try cloning his public Edge Impulse Studio project as a starting point. Don’t worry, copying isn’t against the rules.

Want to see Edge Impulse in action? Schedule a demo today.