According to a recent study, deaf pedestrians are at a higher risk of being involved in a traffic accident than their hearing counterparts. The study, conducted by the National Association of the Deaf (NAD), found that deaf individuals are three times more likely to be hit by a car while crossing the street.

One of the main reasons for this increased risk is the lack of auditory cues that deaf pedestrians rely on to navigate the streets. Emergency vehicle sirens and car horns, for example, are crucial warning systems for hearing pedestrians, but they are not accessible to those who are deaf.

The NAD is calling on car manufacturers and city planners to take steps to make the streets safer for deaf pedestrians. This includes incorporating visual cues, such as flashing lights, into traffic signals and cars, as well as providing more accessible pedestrian crossing signals. Unfortunately, even if these sorts of measures are adopted, they will have a limited impact because they have to be seen to be of any use.

Machine learning enthusiast Solomon Githu believes that deaf pedestrians would be better served by personal, wearable assistive devices that provide haptic feedback. Such a device could provide the wearer with a warning that would not require them to take an active role to observe — the alert could not be missed. He demonstrated what he had in mind by building a proof of concept device that leverages Edge Impulse Studio to create an audio classification algorithm that can recognize the sound of emergency vehicle sirens and car horns. When one of these sounds has been detected, it triggers a tactile alert to warn the wearer.

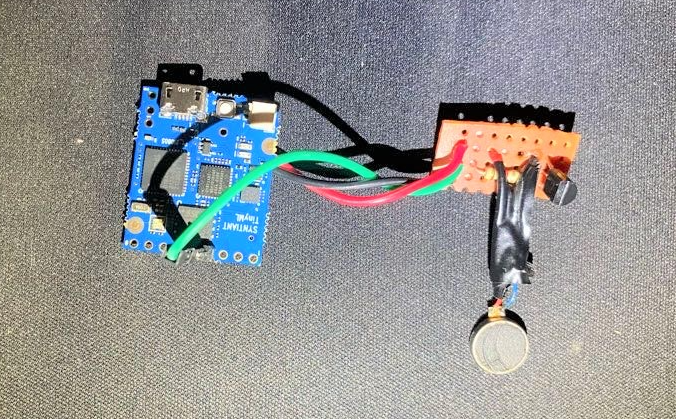

A powerful, yet small and energy efficient hardware platform was needed to bring this device to life. Githu chose Syntiant’s TinyML Board for this reason. The board comes with a SAM D21 Cortex-M0+ 32-bit low-poower Arm processor, and also the NDP101 Neural Decision Processor, which has been designed specifically to accelerate machine learning algorithm execution. It also has an on-board, high quality microphone that makes it easy to capture environmental audio samples. The final essential component was a vibration motor, which provides the tactile feedback.

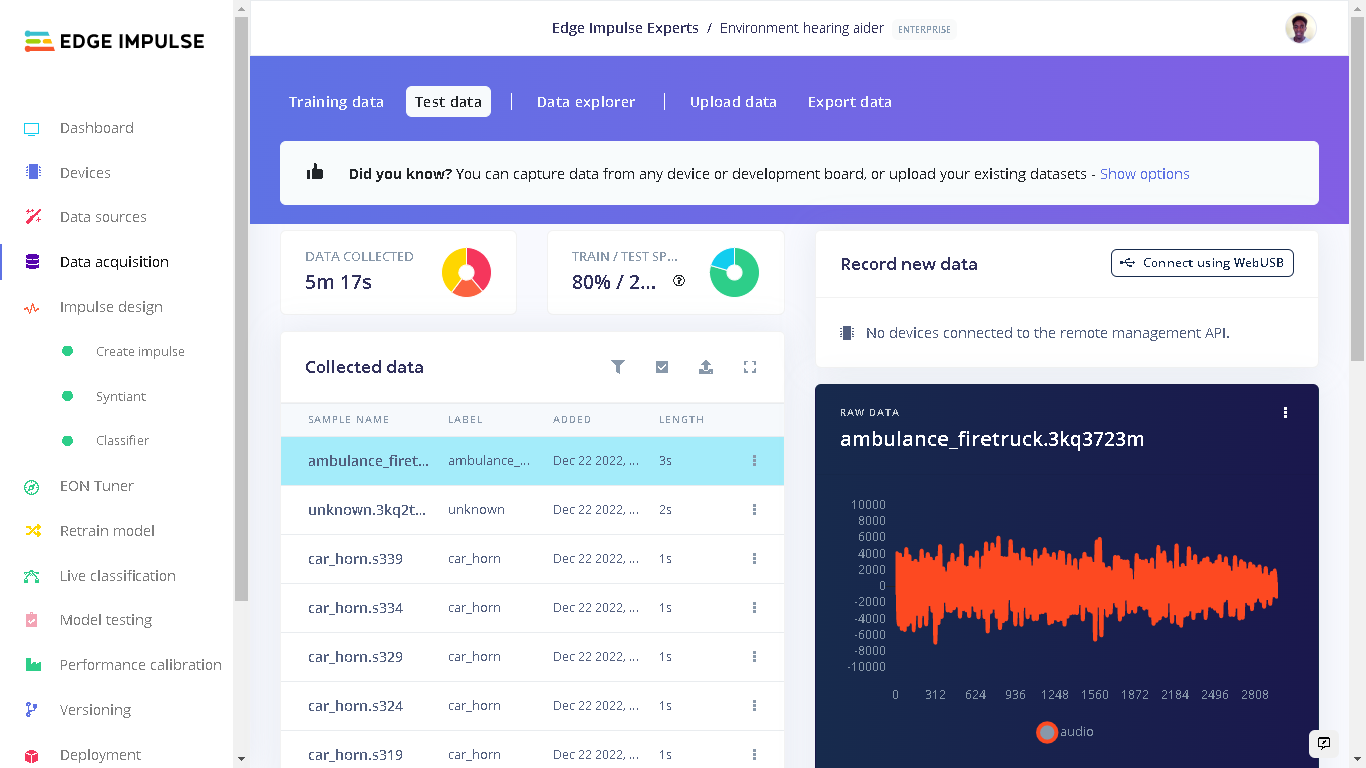

An audio classification model must be trained to recognize certain sounds of interest before it can be put to work, so Githu got busy building a training dataset. Collecting a robust dataset of emergency vehicle siren and car horn sounds would be a challenge, so existing, publicly available datasets were sought out. Fortunately, such datasets are not hard to come by, and a pair of large audio databases were quickly tracked down. In addition to the two classes previously mentioned, a third background noise class, consisting of the sounds of traffic, people speaking, machines, and more was created. A total of 25 minutes of audio was collected, with 20 minutes set aside for training, and five minutes for model validation.

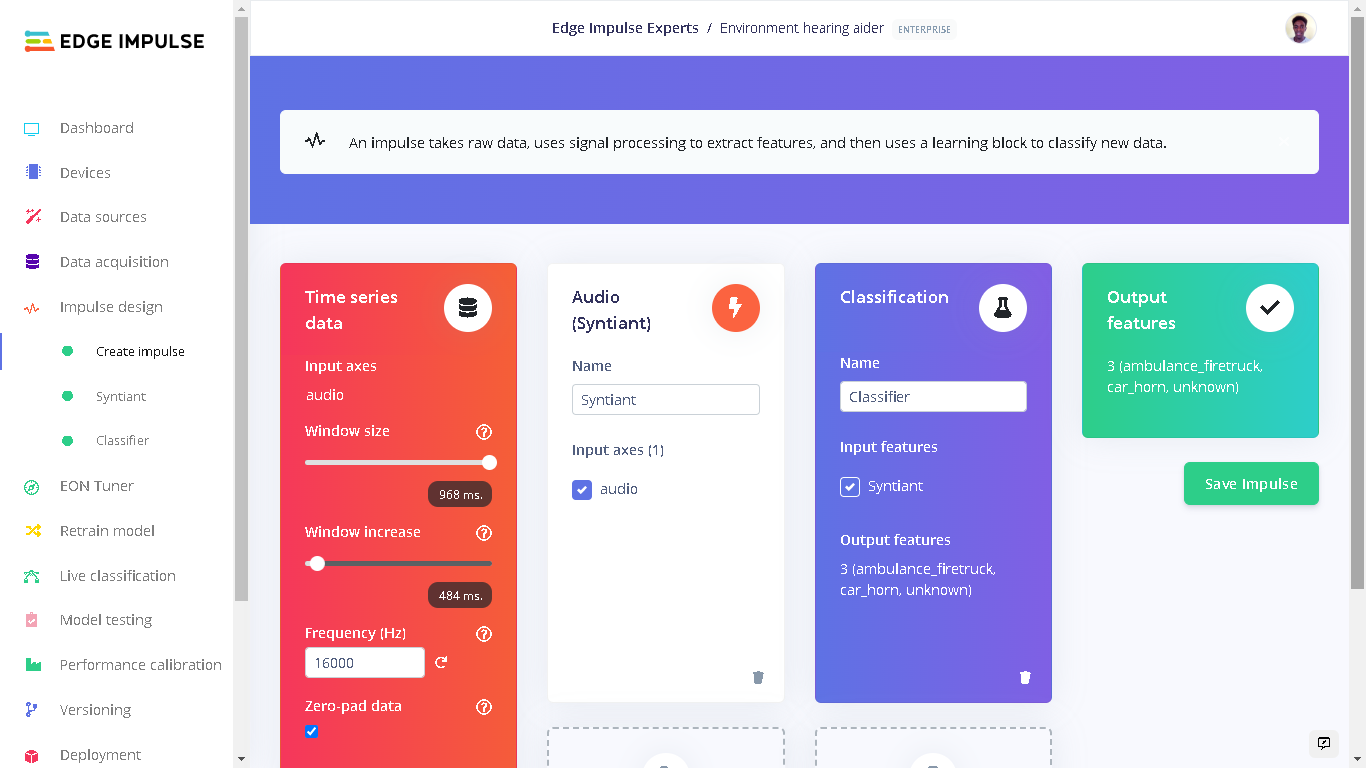

The data was uploaded to Edge Impulse Studio using the data acquisition tool, then the impulse design tab was used to start building the analysis pipeline. The Syntiant TinyML Board is fully supported by Edge Impulse, so there are some preprocessing steps designed specifically to deal with sensor data from this board. These preprocessing steps slice the input audio data into 968 millisecond segments, then calculate the Log Mel-filterbank energy features. Extracting features from the data reduces memory and processing requirements, and also tends to increase the accuracy of the resulting model. Finally, a neural network classifier was added to translate the audio features into a prediction of what class (siren, horn, background noise) it belongs to.

The number of training cycles and the learning rate hyperparameter were adjusted, then the model training process was initiated. A minute or so later, training was complete, and a confusion matrix was displayed to help in assessing how well the model was performing. Right off the bat, the model was classifying samples with a greater than 97% average accuracy rate. To confirm that this result was not simply due to overfitting of the model to the training data, the model testing tool was also used. This validates the model’s performance against a dataset that was excluded from the training process. In this case, the average accuracy was again reported as being over 97%, so the project was looking like a success!

A few additional tests were performed using data that Githu recorded while playing siren sounds from his computer. These were accurately classified, so the only thing left to do was deploy the model to the hardware. This is a very important step for a wearable device with a microphone that is continuously collecting audio. Sending that data to the cloud would have some very serious privacy implications, but by running the model locally, the audio is not recorded and never leaves the device.

As previously mentioned the Syntiant TinyML Board is fully supported by Edge Impulse, so deployment was as easy as clicking on the deployment tab, then selecting the “Syntiant TinyML” option. This builds a firmware image containing the entire machine learning classification pipeline that can be flashed to the board. During the deployment process, a chance to configure posterior parameters is given, which is used to tune the precision and recall of the neural network activations and make for an even more robust classification pipeline.

With the work all wrapped up, a small device that can be strapped to the wrist was created that successfully gives a tactile warning when sirens or car horns are detected. While it may work great, the appearance is not exactly something anyone would want to wear on their wrist every day, so Githu is working to package the components up in a smartwatch-style case.

Did this project spark some ideas for audio classification-based devices of your own? If so, reading through Githu’s documentation would be a great next step to take. Or if you have some experience with Edge Impulse Studio already, you can jump right into the public project and make a clone.

Want to see Edge Impulse in action? Schedule a demo today.