The Food and Drug Administration (FDA) regulates the most sensitive and impactful medical drugs and substances that the public consumes and utilizes. To ensure public safety they have developed some of the most stringent review and approval procedures. The meticulous steps for bringing a new medical device to market include preclinical research, risk classification, clinical trials, marketing submissions (510(k), PMA, or De Novo), post-market surveillance, and other processes that can take considerable time and resources. With conventional medical devices, this system has been refined and is understood by product makers in the medical industry. The latest technologies, however, utilize advanced software applications, such as artificial intelligence (AI) and machine learning (ML), which are more complicated to review and regulate. The FDA refers to this category as “Software as a Medical Device” (SaMD).

To help determine the best practices for FDA of the SaMD submissions, the administration has created and distributed a draft guidance plan: “Marketing Submission Recommendations for a Predetermined Change Control Plan for Artificial Intelligence/Machine Learning (AI/ML)-Enabled Device Software Functions” (fda.gov/media/166704/download). Intended to solicit comments from stakeholders in the category, Edge Impulse has reviewed the suggested guidelines; here is our summary, along with our own perspectives on implementing machine learning effectively when building devices.

SaMD Marketing Submission Recommendations: Executive Summary

This draft guidance from the FDA is designed to provide recommendations for a Predetermined Change Control Plan (PCCP) for AI/ML-Enabled Device Software Functions. The FDA’s commitment is to develop and apply innovative regulatory approaches to ensure the safety and effectiveness of medical device software and other digital health technologies. The focus is particularly on software that incorporates AI and ML, which are becoming increasingly important in many medical devices.

Definitions: Several key terms are defined in the guidance:

- Device Software Function (DSF): A component or feature of a medical device, recognized by official health standards, designed to diagnose, treat, or prevent disease in humans or animals.

- Machine Learning-Enabled Device Software Function (ML-DSF): A DSF that implements an ML model trained with ML techniques.

- Predetermined Change Control Plan (PCCP): A document describing the modifications to the ML-DSF. Containing a Description of Modifications, Modification Protocol, and Impact Assessment.

- Training Data: Data used by the ML-DSF manufacturer in procedures and ML training algorithms to build an ML model.

- Tuning Data: Data used by the ML-DSF manufacturer to evaluate a small number of trained ML-DSFs to explore different architectures or hyperparameters.

Modification Protocol: Considerations that manufacturers should address in a Modification Protocol include:

- Re-training: What could prompt re-training, such as when new data reaches a certain size, when a drift is observed, or at regular intervals?

- Performance Evaluation: What are the triggers for initiating performance evaluation of a re-trained ML model or modified ML-DSF?

- Update Procedures: Does the proposed modification necessitate a different software verification and validation plan from that used for the version of the device without any modifications implemented?

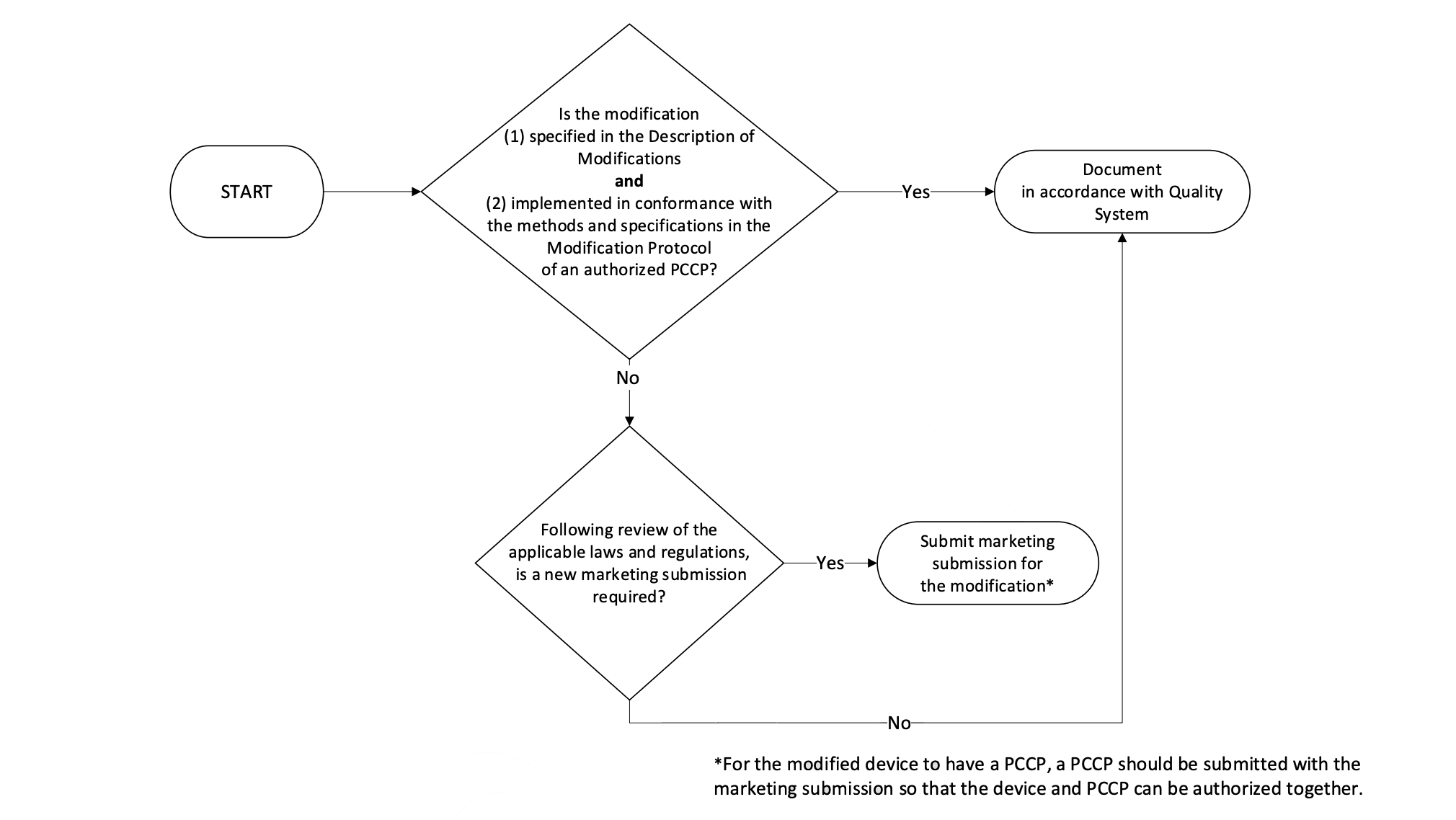

- Implementation of Modifications: A flowchart is provided to guide manufacturers on whether a new marketing submission is required for modifications to a device with an Authorized PCCP. If the modification is specified in the Description of Modifications Document and implemented in conformance with the Quality System of an authorized PCCP, a new marketing submission may not be required.

Modifying a PCCP for an Authorized Device: It is stated that modifications to an authorized PCCP will generally require a new marketing submission for the device, which will include the modified PCCP. This is because the modifications described in the PCCP include device changes that would otherwise require a PMA supplement, De Novo submission, or new 510(k) premarket notification.

Post-Authorization Modification Scenarios: Examples of modification scenarios and how they should be handled are provided. For instance, if a modification related to device’s use and performance was not specified in the PCCP, a new marketing submission would be required.

Edge Impulse Perspectives: MLOps

To help ensure that devices continue to work as described after updates have been made, the PCCP requires specific practices to be described, accounted for, and adhered to in order for changes to be allowed. These practices are represented in the four components of the modification protocol:

- Data management practices

- Re-training practices

- Performance evaluation

- Update procedures

In machine learning, the definition and execution of repeatable systems for dataset and model improvement and evaluation is part of an increasingly important industry practice known as Machine Learning Operations — or MLOps.

Google describes MLOps as “an ML engineering culture and practice that aims at unifying ML system development (Dev) and ML system operation (Ops). Practicing MLOps means that you advocate for automation and monitoring at all steps of ML system construction, including integration, testing, releasing, deployment and infrastructure management.”

In practice, MLOps is implemented as a combination of policy, procedure, and tooling. It provides both structure and a technical foundation for managing the complexity and risk involved with machine learning engineering.

The PCCP requirements are essentially a codification of MLOps into regulation: they require that an organization has implemented key MLOps processes and is documenting their application. The components of the modification protocol reinforce important best practices for machine learning engineering, making them helpful guidelines that will increase the likelihood of project success.

Many sophisticated software tools are available that can help implement MLOps practices, including some — like Edge Impulse — that are designed specifically to help with projects involving health applications, sensor datasets, and embedded software engineering.

The close alignment between the PCCP and MLOps best practices means that MLOps tools will be invaluable in defining, documenting, and adhering to PCCPs, since they provide the technological underpinning for satisfying the four components of the modification protocol.

In the remainder of this article, we’ll explore the four components of the PCCP modification protocol and how MLOps tools can support their implementation.

Data management practices

The FDA guidance describes data management practices in the following way:

“The data management practices in a Modification Protocol should outline how those new data will be collected, annotated, curated, stored, retained, controlled, and used by the manufacturer for each modification. The data management practices in a Modification Protocol should also clarify the relationship between the Modification Protocol data and the data used to train and test the initial and subsequent versions of the ML-DSF. It should also describe the control methods employed to curb the potential for data or performance information leaking into the development process during modification development or assessment.“

In practical terms, it specifies the following information that must be included in the submission:

In general, this information should describe: how data will be collected, including clinical study protocols with inclusion/exclusion criteria; information on how data will be processed, stored, and retained; the process that will be followed to determine the reference standard; the quality assurance process related to the data; the data sequestration strategies that will be followed during data collection to separate the data into training and testing sets; and the protocols in place to prevent access during the training and tuning process to data intended for performance testing.

These are all elements that MLOps tools are designed to help with. For example, MLOps tools can be used to:

- Track the evolution of datasets over time, including the origins of individual datapoints and the studies they originate from.

- Define how data is stored and retained.

- Describe the pipelines that are used to transform raw data into extracted features suitable for training.

- Provide traceability that shows which data was used to train which model.

- Prove that there is no leakage of data, for example between test and training datasets.

- Provide accounting of how validation is used, and whether leakage may have occurred through improper use of validation procedures.

- Store and analyze metadata to show that the data is complete, balanced, and representative.

Re-training practices

The FDA has the following to say about re-training practices:

“The re-training practices component of a Modification Protocol should identify the processing steps that are subject to change for each modification and the methods that will be used by the manufacturer to implement modifications to the ML-DSF. In addition, if re-training involves ML architecture modifications (e.g., in a neural network, modifications to training hyperparameters or the number of nodes, layers, etc.), the re-training practices component of a Modification Protocol should also describe the rationale or the justification for each specific architecture modification (e.g. 8-bit quantization to support microcontroller architectures, as a trade off for running models more efficiently).

The submission must include the following information:

In general, this information should identify the objective of the re-training process, provide a description of the ML model, identify the device components that may be modified, outline the practices that will be followed (e.g., data sequestration strategies during re-training), and identify any triggers for re-training (e.g., when new data reaches a certain size or when a drift in data is observed over time).

MLOps tools are designed to help with these specific needs. For example, they can:

- Track training runs, including the resulting metrics (and how they improve after successive re-training) and the exact data that was used for each run.

- Keep track of model architecture changes, and their impact on evaluation, providing justification for modifications as required by the Modification Protocol.

- Allow auditing of changes to ensure they are in line with what is permitted according to the submitted PCCP.

Performance evaluation

Evaluation is described as follows:

“Performance evaluation should include the plans for verification and validation of the entire device following ML-DSF modifications for each individual modification and in aggregate for all implemented modifications. This includes, but is not limited to, ML model testing protocols comparing the newly modified device to both the original device (the version of the device without any modifications implemented) and the last modified version of the device. For example, for device software functions that drive hardware functionality, performance evaluation should include not only the device software functions, but also the effect of the modifications on hardware functionality. The content of this section in a Modification Protocol should provide details on the study design, performance metrics, pre-defined acceptance criteria, and statistical tests for each planned modification. More comprehensive testing can potentially support a broader set of proposed modifications.”

In terms of the submission, the following is required:

“In general, this information should describe how performance evaluation will be triggered; how sequestered test data representative of the clinical population and intended use will be applied for testing; what performance metrics will be computed; and what statistical analysis plans will be employed to test hypotheses relevant to performance objectives for each modification. In addition, the Modification Protocol should affirmatively state that if there is a failure in performance evaluation for a specific modification, the failure(s) will be recorded, and the modification will not be implemented.”

MLOps tools are ideal for providing the required information. They are able to:

- Codify and automate the evaluation process, ensuring that evaluation is always performed in the appropriate way for every variant of the model that is produced. Some metrics that can be utilized for this include accuracy, precision, recall and F1 score.

- Track the results of evaluation over time, including performance across different slices of the populations of concern.

- Provide convenient reporting of evaluation results to stakeholders, including domain experts, clinical researchers, and the FDA.

Update procedures

The FDA requires that the Modification Protocol governs updates to device software:

The update procedures in a Modification Protocol should describe how manufacturers will update their devices to implement the modifications, provide appropriate transparency to users, and, if appropriate, updated user training about the modifications and perform real-world monitoring, including notification requirements if the device does not function as intended pursuant to the authorized PCCP. The PCCP should include a description of any labeling changes that will result from the implementation of the modifications. The available labeling must include adequate directions for use and reflect information about the current version(s) of the ML-DSF available to the user, including information regarding site-specific modifications. The labeling should not reflect information on modifications to the ML-DSF that have not been implemented in the available version, because it could cause confusion and would be misleading. If such information is included in the labeling, the ML-DSF could be deemed misbranded.

The submission itself must include the following details:

1) confirmation that the verification and validation plans for the modified version of the device are the same as those that have been performed for the version of the device prior to the implementation of the modifications, or identification of any differences between the two plans; 2) a description of how software updates will be implemented; 3) a description of how legacy users will be affected by the software update (if applicable); and 4) a description of how modifications will be communicated to the users, including transparency on any differences in performance, differences in performance testing methods, and/or known issues that were addressed in the update (e.g., whether there is an improvement in performance in a subpopulation of patients).

Once again, MLOps tools are well suited to enforce and enform this in practice:

- Ensure verification and validation plans remain stable and consistent through software automation.

- Track model performance across cohorts, for example legacy users vs. new users.

- Quantify performance changes across different subpopulations of the dataset.

Conclusion

Datasets and algorithms must evolve over time to ensure they still reflect real world conditions. Our view of this draft of the PCCP’s modification protocols is that it has the potential to enable this evolution while ensuring that a product continues to function as described. To do this, they rely on careful planning, tracking and justification of potential changes.

MLOps tools are specifically designed to codify model development processes and track changes in performance in ways that facilitate adherence to the PCCP. They are a proven way of managing complexity risk, and reflect industry best practices. Without MLOps tools, device makers may risk missing details that result in violation of PCCPs and additional costs or wasted development time.