Healthcare providers wear many hats in caring for patients — performing examinations, making diagnoses, developing treatment plans, and so on. But one thing that these providers cannot do is read minds. So when a patient is unable to verbally communicate due to illness, language barriers, or being placed on a ventilator, it makes all of these jobs very difficult and can lead to poor patient outcomes. A study published last year looked at how nurses communicate with non-speaking patients in critical care settings and found that there are few established standards. The authors recommended that augmentative and alternative communication techniques be adopted to facilitate better communications in these situations.

Since there are presently no widely accepted standards for this type of device, we have a blank page in front of us. But for widespread adoption, such a device would need to be intuitive, inexpensive, and accurate. Engineer Manivannan Sivan threw his hat in the ring with an idea he came up with that leverages Edge Impulse and a low-cost development board to translate simple finger gestures into requests that can be seen by healthcare providers. For the prototype, Sivan trained the device to recognize five different types of motions.

The Silicon Labs Thunderboard Sense 2 development board was chosen to power the device because it has more than enough processing power to run a highly-optimized Edge Impulse machine learning model, and also comes standard with an accelerometer and a Bluetooth Low Energy radio. Everything that was needed was already onboard — the accelerometer was used to capture raw measurements of motion to feed into a neural network classifier, and the Bluetooth radio was used to communicate the gesture classifications to healthcare providers. That communication could be with any arbitrary device, but for this initial version, Sivan chose to test it with the Light Blue smartphone app.

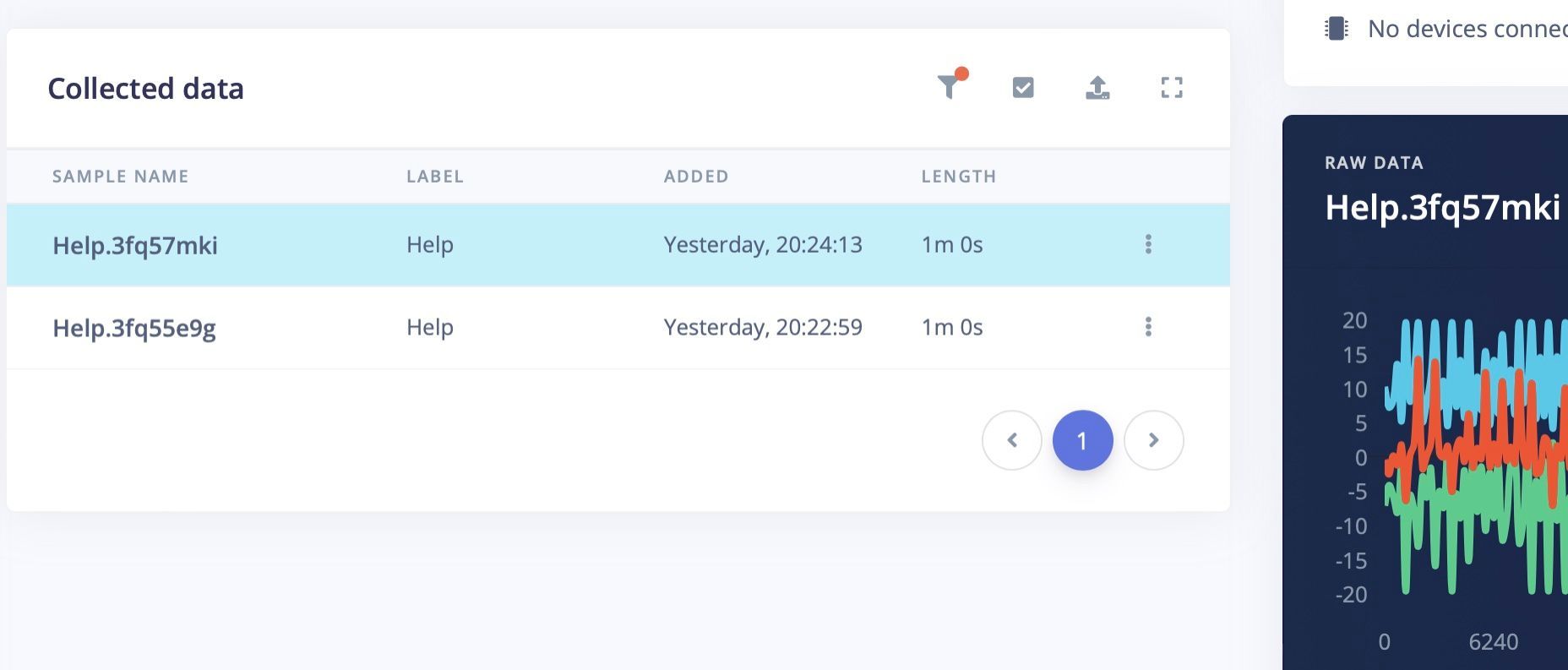

To collect the data needed to train the machine learning model, Sivan first linked the Thunderboard Sense 2 board to his Edge Impulse project by flashing it with a custom firmware image downloaded from Edge Impulse. After doing so, accelerometer data was automatically uploaded to the project, so Sivan was able to perform a gesture for two minutes to collect training data, and an additional 20 seconds to collect data for the testing set. This process was repeated for each of the five classes — help, emergency, water, idle, and random movements. This was sufficient to prove the concept, but a much larger, more diverse dataset containing more types of gestures would need to be collected before the device could be used in real clinical settings.

With data available to train a model, it was time to create the impulse. This started with a step that splits the accelerometer measurements into windows, followed by a preprocessing block that performs a spectral analysis. This extracts frequency and power characteristics of a signal, and is ideal for analyzing repetitive patterns, as are common in accelerometer data. By extracting these features up front, downstream analyses require less computational resources, which is important when working with resource-constrained platforms like microcontrollers. Finally, the features are forwarded into a neural network classifier that learns to translate them into finger gestures.

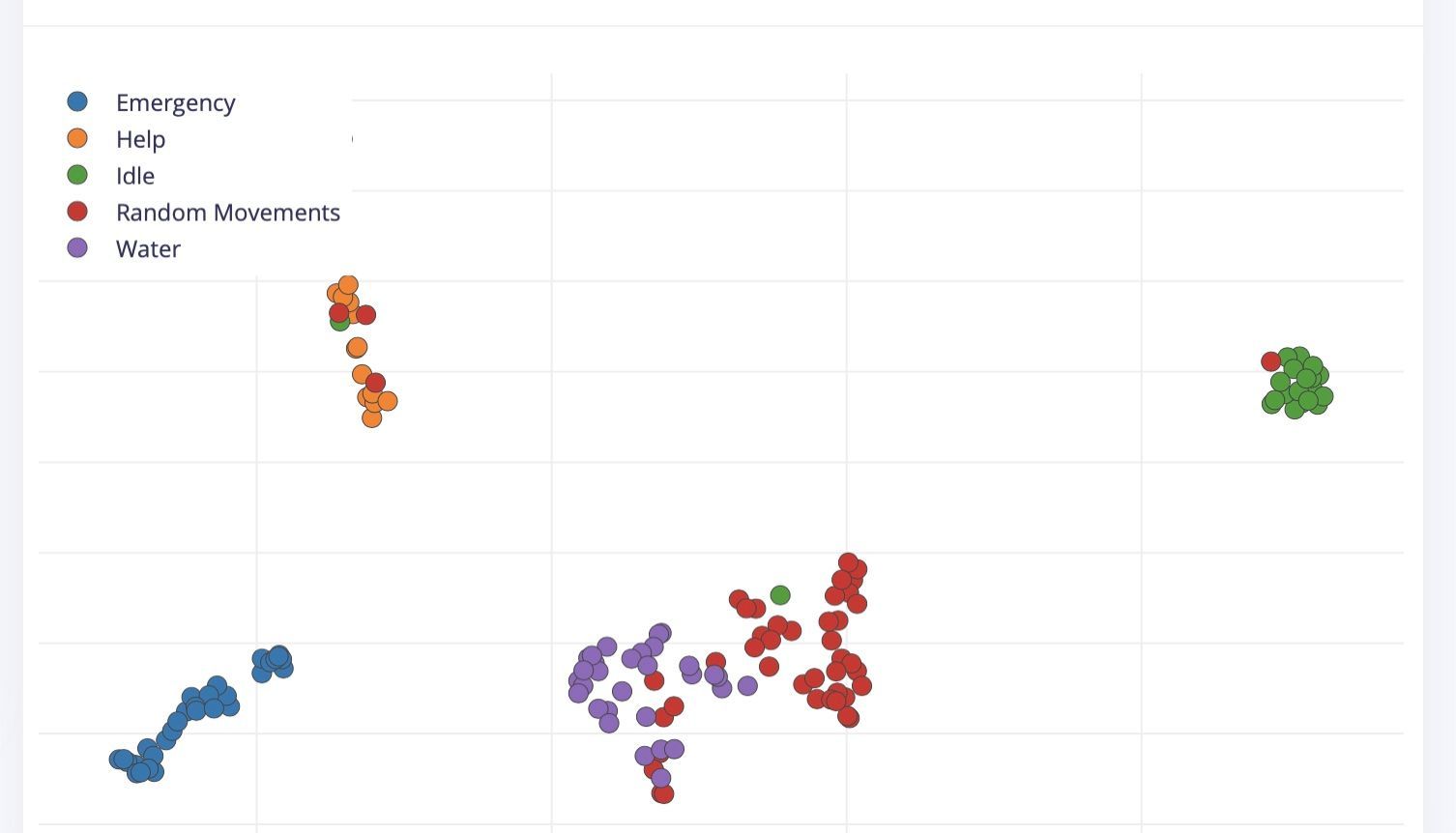

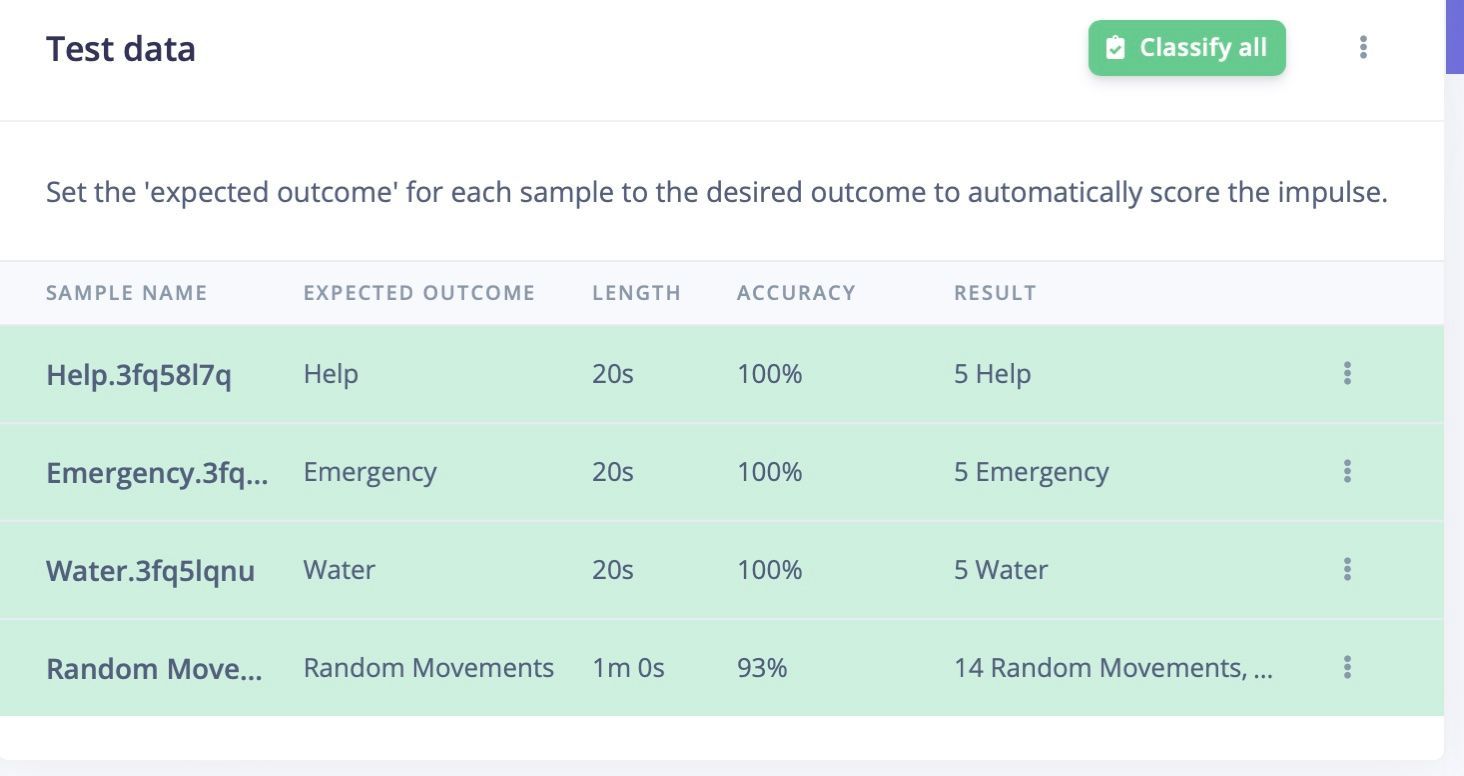

The Feature Explorer tool was used to check how data points cluster, and it was found that there was a clear separation between classes, so the training process was kicked off. After a short time, the training was complete, and a confusion matrix was presented to show how well the model was performing its classification duties. A 100% average classification accuracy was reported, which is obviously excellent, but could be a sign of overfitting. To further validate the model, a more stringent test was performed that uses a dataset that was not involved in the training process. This test revealed that an average classification accuracy of better than 96% had been achieved.

There is little room for improvement, so Sivan was ready to deploy the machine learning classification pipeline to the physical hardware. Running the model directly on the hardware circumvents the sort of privacy issues that can arise by transmitting patient data to the cloud for processing, and it also minimizes latency. This was accomplished by using the deployment tool in Edge Impulse Studio. Since the Thunderboard Sense 2 is fully supported by Edge Impulse, it was as simple as downloading a custom firmware image and flashing it to the board.

With the prototype being complete, Sivan tested it out by attaching it to his finger and running through the gestures. He found that it consistently recognized the finger motions with a high degree of accuracy. Each gesture classification was transmitted via Bluetooth and was viewable in the Light Blue app.

Going from idea to a working proof of concept device in an afternoon is a pretty impressive feat, but is a very realistic goal using Edge Impulse and accessible hardware like the Thunderboard Sense 2. After a quick read through the project documentation, you will be well on your way to bringing your own machine learning-powered creations to life.

Want to see Edge Impulse in action? Schedule a demo today.