We frequently highlight projects where Edge Impulse tools were used to simplify the entire process of creating a machine learning analysis pipeline. Whether it is via our primary Edge Impulse graphical interface or the Edge Impulse API, the powerful features that are available allow developers to focus on solving real-world problems rather than spending their time in the muck and mire of complex machine learning frameworks.

That is all well and good if you are building a new application from scratch — but what if you already have a trained model that is exactly what you need? When you have got a good thing going, why mess with it? After all, training a new model takes time, and in some cases, while the trained model is available, the training data is nowhere to be found to reproduce it. If you wanted to optimize these models and deploy them to edge computing platforms, the familiar Edge Impulse interfaces would certainly come in handy. If only it was possible to import existing models into Edge Impulse…

Well, now, it is possible! Earlier this year, the Edge Impulse team announced the release of our bring your own model (BYOM) capability. This feature allows developers to import common pre-trained model formats, like TensorFlow SavedModel and ONNX, into Edge Impulse for further processing and deployment.

Recently, community member Roni Bandini found himself in a situation where he wanted to import an existing model into Edge Impulse, so he decided to give BYOM a whirl and write about the experience. Bandini’s plan was to build a computer vision-based game that helps to teach American Sign Language. And with a pre-trained model that can classify hand gestures already built with another tool in hand, the first step was to import it into an Edge Impulse project. Doing so would make the job of optimizing the model and deploying it to physical hardware a snap.

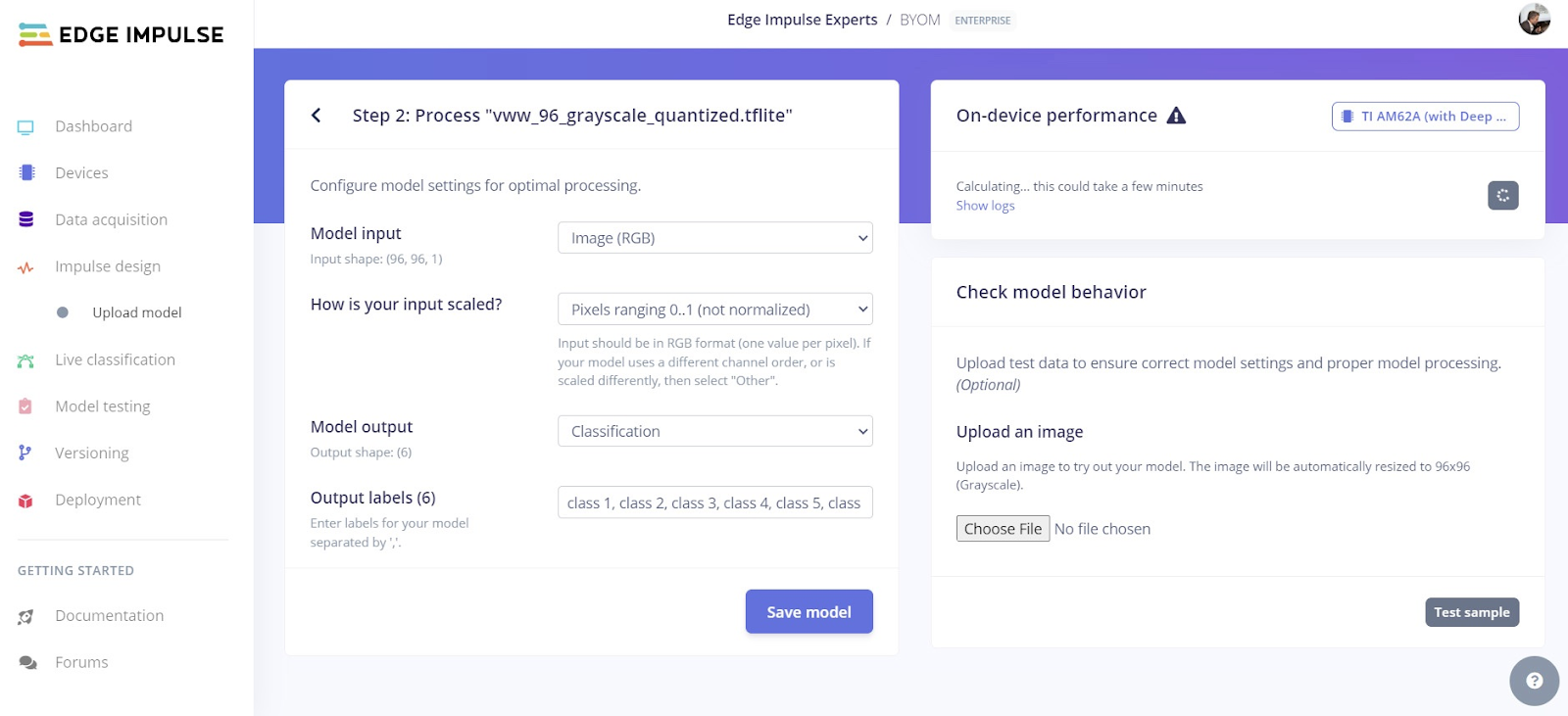

After creating a project, the import process begins by clicking the “Upload model” link. After answering a few questions about the model and the type of output it is expected to produce, the BYOM importer is all set for you to test the model. You can supply inputs — images in this case — and see inference results immediately to verify that everything is working as expected. If everything looks good, then that is all there is to it. The model is now ready to use just as if it had been trained using Edge Impulse tools.

Fresh off of another project, Bandini had a powerful Texas Instruments AM62A development kit available. With an AI vision processor that can process five megapixel images at a rate of 60 per second, this platform is perfect for computer vision applications where low power consumption is a requirement. After installing the device’s operating system and setting up Edge Impulse for Linux, deploying the model was as easy as entering a few commands.

With the hand gesture detection pipeline available on the Texas Instruments AM62A board, Bandini wrote an application that will randomly prompt a user with a letter, and ask them to perform the corresponding sign. From there, the camera captures an image, and it is classified by the model. The result is used to score the user’s performance. And if the sign is incorrect, the system can tell the user which sign they were actually performing to help them learn from their mistakes.

If BYOM is exactly what you have been looking for, be sure to check out Bandini’s project write-up for all the details you need to get going. Feel free to check out the publicly available Edge Impulse project as well if you need a bit more guidance along the way.