People with hearing impairments face many challenges in navigating the world due to their inability to hear important auditory cues. One of the most concerning issues is their difficulty in detecting sounds like approaching emergency vehicles, fire alarms, and oncoming traffic. These sounds are critical warnings that alert people to potentially life-threatening situations. For the hearing-impaired, the absence of such cues can result in dangerous situations, as they may not be able to react quickly to emergencies or hazards.

Not being able to hear emergency vehicles, such as ambulances and fire trucks, can be very dangerous. These vehicles use sirens to clear the way and signal their urgency, allowing other drivers and pedestrians to yield and make way. Unfortunately, hearing-impaired individuals may not be aware of these approaching vehicles, putting themselves and others at risk. Similarly, fire alarms, which warn of smoke or fire, may go unnoticed by those with hearing impairments, delaying a prompt evacuation of the building.

Another challenge often encountered is the unexpected approach of other people. Hearing-impaired individuals might not hear approaching footsteps or voices, leaving them prone to being started or caught off guard. This lack of awareness could lead to discomfort, misunderstanding, or even safety concerns in various social situations.

Most of us may not think about these issues on a daily basis, but they represent a big, largely unseen problem. According to the World Health Organization, around 466 million people worldwide have a disabling hearing loss, which accounts for approximately 5% of the global population. In the United States alone, the Centers for Disease Control and Prevention reported that about 15% of adults aged 18 and over experience some degree of hearing loss.

In an attempt to address this problem, I have created what I call SonicSight AR. This device uses a machine learning algorithm built with Edge Impulse Studio to recognize sounds, like emergency vehicles and various types of alarms. When these sounds are detected, the user of SonicSight AR is alerted to that fact via a message that floats within their field of vision through the use of a low-cost augmented reality display. SonicSight AR also detects footsteps and talking to prevent the wearer from being startled when someone approaches them unexpectedly.

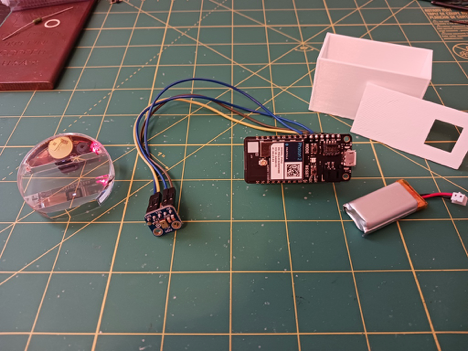

For sensing and processing, I chose to use a Particle Photon 2 development board in conjunction with the Edge ML Kit for Photon 2. The Photon 2 is ideal where the energy budget is of concern, as it generally is for a wearable like SonicSight AR. Yet the board also has plenty of power to run advanced machine learning algorithms with an Arm Cortex-M33 CPU running at 200 MHz, 3 MB of RAM, and 2 MB of Flash memory. Since I was able to pick up the Photon 2 on sale for $9, it was the obvious choice.

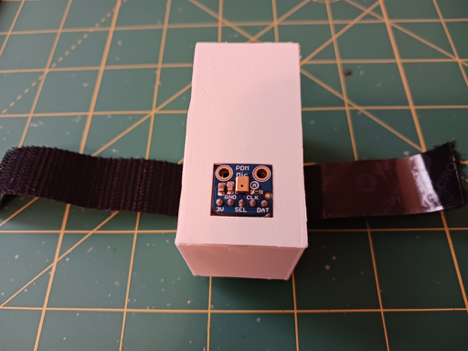

A PDM MEMS microphone from the Edge ML kit was wired to the Photon 2, and this hardware, along with a 400 mAh LiPo battery, was placed inside a custom 3D-printed case with an opening for the microphone. With a velcro strap, this case can unobtrusively be worn on a belt. Using a wireless Bluetooth Low Energy connection, this device can communicate with a Brilliant Labs Monocle to convey visual information to the wearer. Using the Monocle’s clip, I attached it to a pair of glasses.

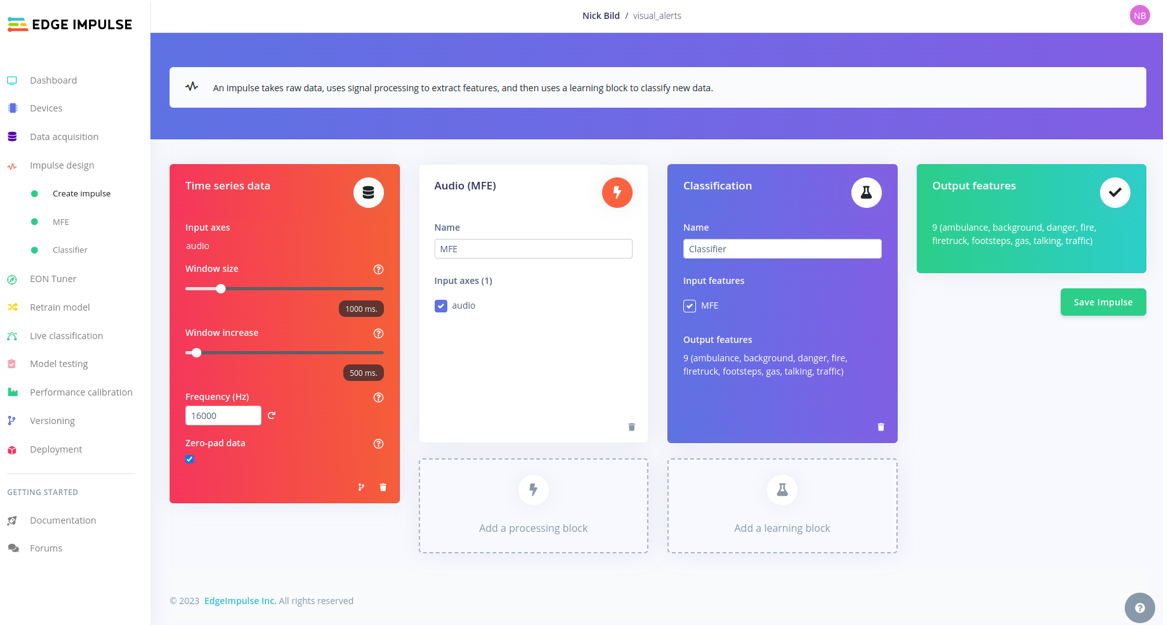

My intent was to build an audio classification neural network to distinguish between eight types of sounds (ambulance, danger alarm, fire alarm, firetruck, footsteps, gas alarm, talking, traffic sounds), as well as normal background noises. So, I sought out some publicly available datasets and extracted 50 audio clips to represent each class. The files were then uploaded to Edge Impulse Studio via the Data Acquisition tool. Labels were applied during the upload, and an 80%/20% train/test dataset split was automatically applied

To analyze the data and make classifications, an impulse was designed in Edge Impulse Studio. It consists of preprocessing steps that split the incoming audio data into one second segments, then extract the most meaningful features. The features are then forwarded into a convolutional neural network that determines which one of the classes the sample most likely belongs to.

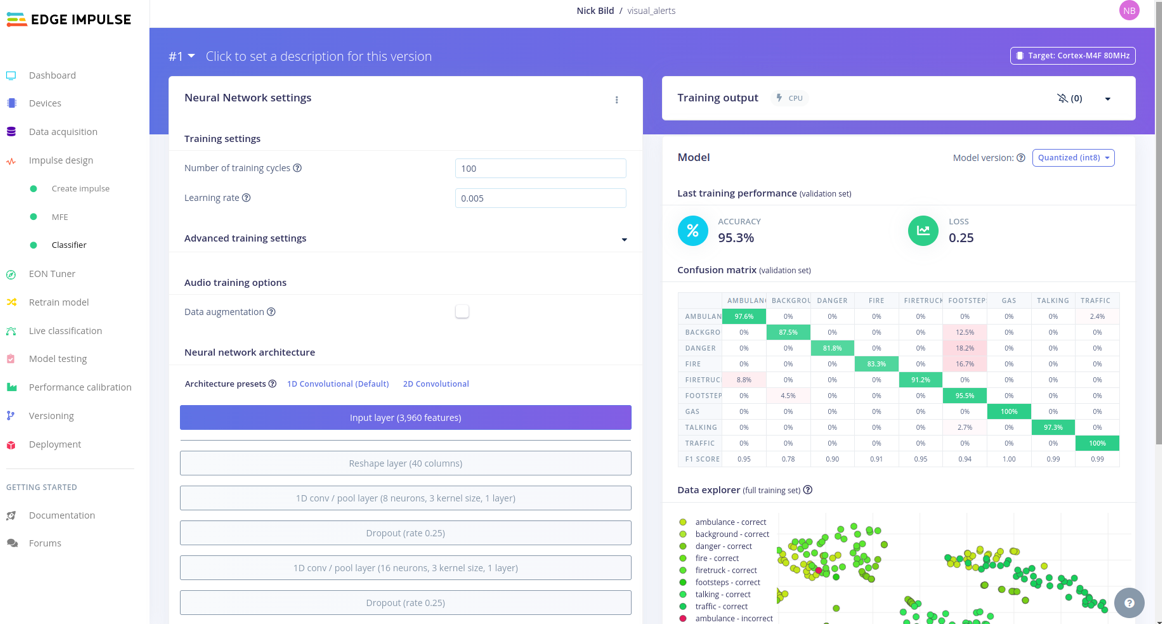

The training accuracy was quite good considering I supplied such a small training dataset, coming in at 95.3%. Model testing showed that this result was not simply overfitting of the model to the training data, with a reported classification accuracy of better than 88%. This is better than what is needed to prove the concept, but by supplying more data, and more diverse data, I believe that the accuracy could be improved significantly.

I certainly did not want users of the system to have to rely on a wireless connection to the cloud for it to operate, not to mention the privacy concerns and latency that would come with that approach. As such, I deployed the model directly to the Photon 2 as a Particle library using the Deployment tool. This was easily imported into Particle Workbench, where I could further customize the code to fit my needs.

In this case, that meant that I needed to modify the code to connect to the Monocle via Bluetooth Low Energy, then when sounds are recognized, send the Monocle a message to display in front of the user. Using Particle Workbench the program was compiled and flashed to the Photon 2 in a single step.

For a proof of concept, I found SonicSight AR to work surprisingly well. And given that it is battery powered, completely wireless, self-contained, and unobtrusive to use, I could see a device like this being used in real-world scenarios with just a bit of refinement. Not bad for a few hundred dollars in parts and a couple days of work.

If you would like to reproduce this project, or get a headstart in building something like it, be sure to check out the project write-up. I have also made my Edge Impulse Studio project public, and released the source code on GitHub.

Want to see Edge Impulse in action? Schedule a demo today.