One of core goals of edge-based ML/AI is to provide high performance hardware, that consumes as little power as possible. Achieving both high performance and low power consumption offers significant benefits to solution developers as it enables use in more power-constrained applications.

BrainChip recently announced their range of neuromorphic processor IP, Akida. If you are not familiar with neuromorphic computing, it is an approach to computing that mimics the human brain by building circuits that operate similar to neurons and synapses. Drawing inspiration from the brain, Akida IP implements an event-based AI neural processor, which is comprised of SRAM and configurable Neural Processing Engines (NPEs) that process neural networks in an energy and memory efficient manner. Within Akida, eight neural processing engines are grouped to create a neural processor. While four NPs are combined to create a node and there can be up 256 nodes that interconnect with each other over an intelligent mesh network, though most likely edge configurations will be 1-8 nodes, which have plenty of horsepower for edge AI workloads As the NPs are configurable each once can be configured to implement either a fully connected or convolutional layer. As Akida is a neural processor it is activation-based, which offers developers a lower power solution as you do not have logic constantly running at a high clock frequency implementing the AI pipeline.

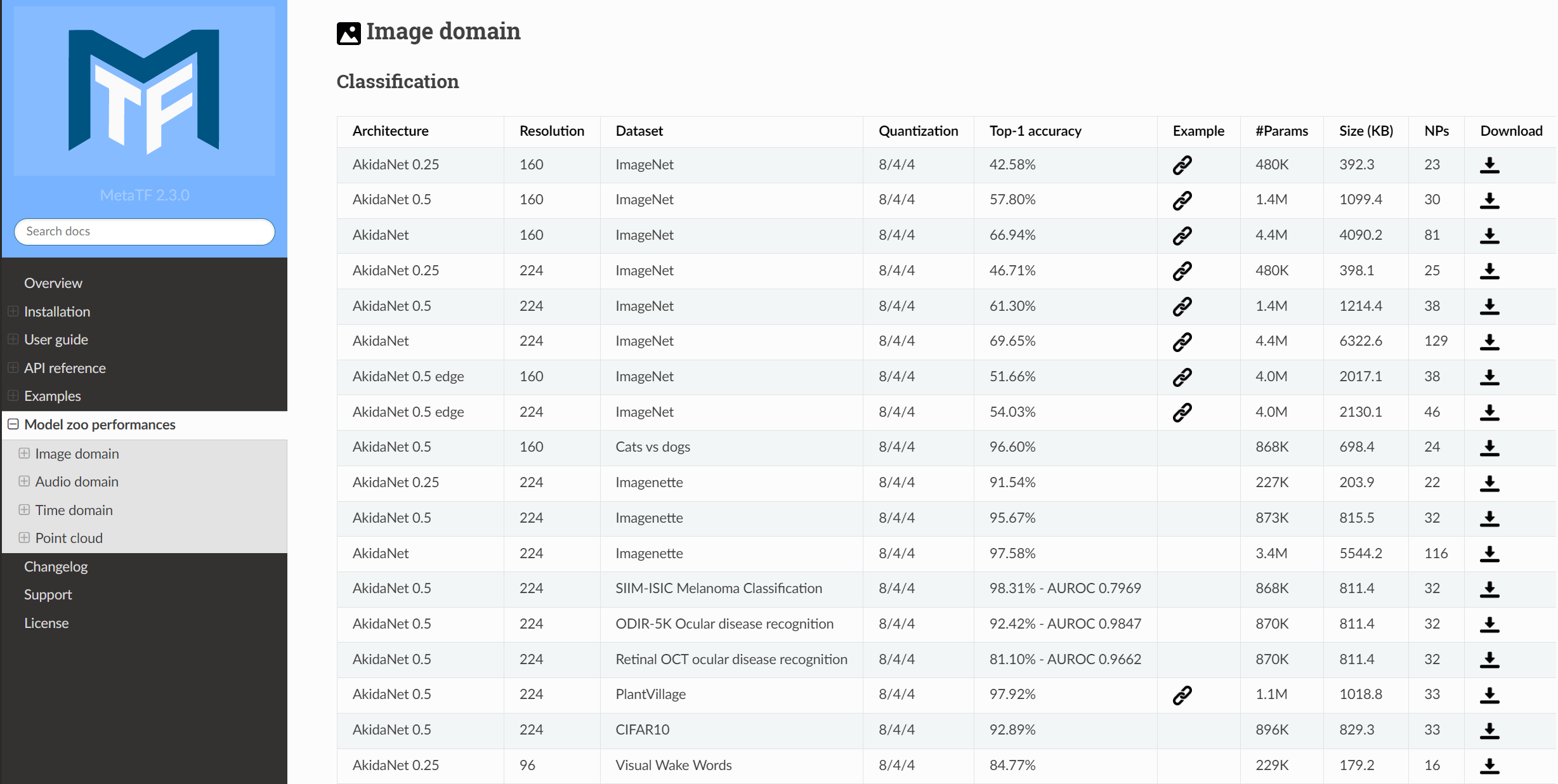

The BrainChip results for Akida look stunning in terms of accuracy and are very low power, to enable engineers to build complex AI solutions in a very constrained environments. For developers to try out the Akida processor, BrainChip has an Akida SoC on a PCIe card that can be deployed in your development machine or within a standalone Raspberry Pi development kit.

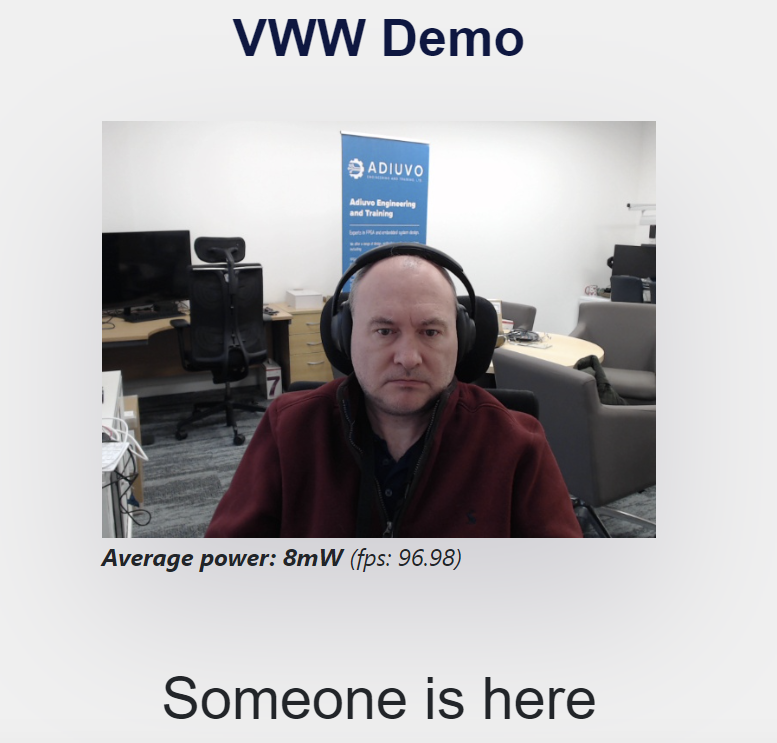

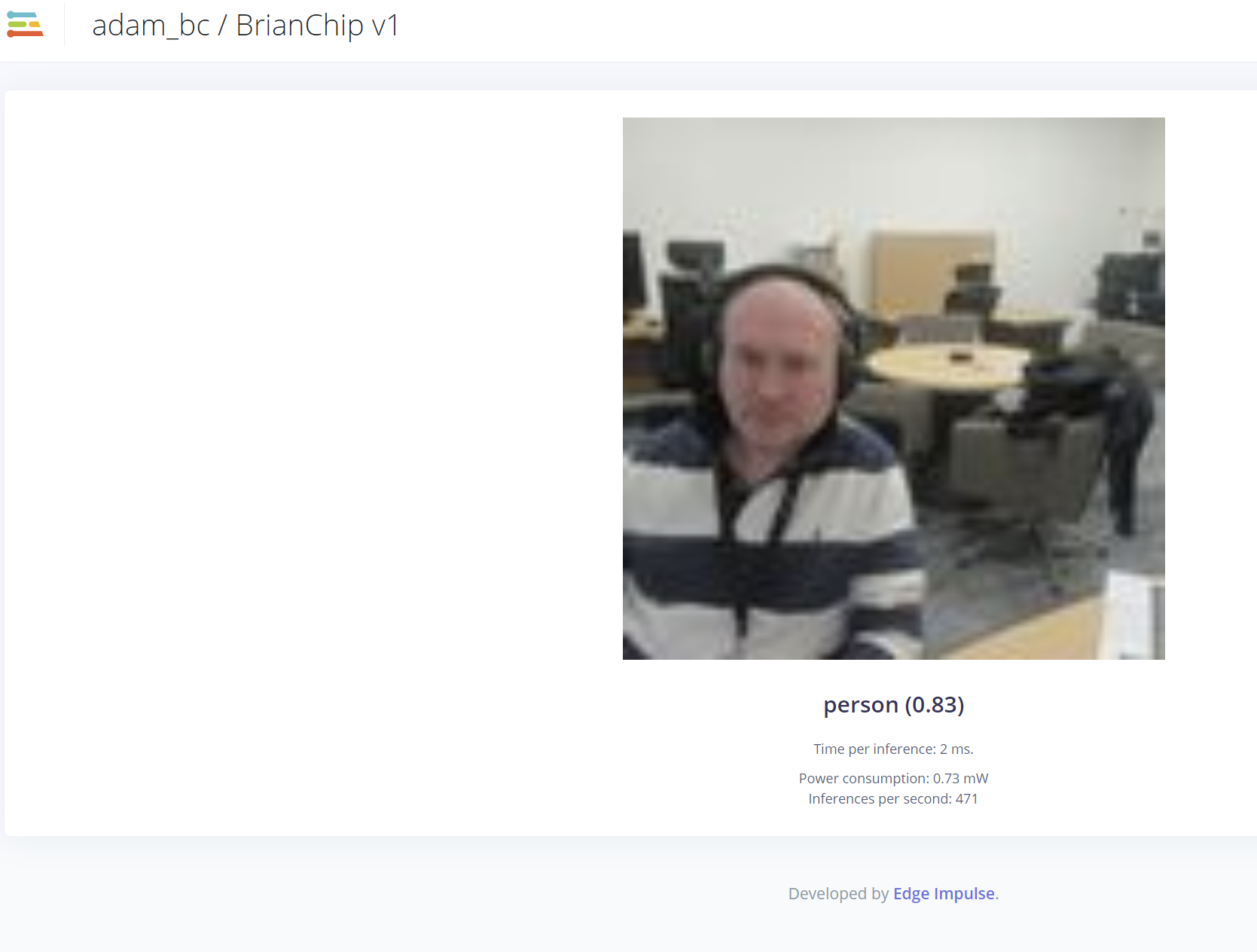

Running the out-of-the-box demos on the Akida Raspberry Pi development kit I have was very impressive achieving, according to the statistics, approximately 100 FPS for a 9-mW power dissipation.

I therefore wanted to try and recreate the performance seen using the Akida Raspberry Pi development kit using Edge Impulse for the visual wake word detection.

The first thing to do was to create a new Edge Impulse project and upload the coco dataset which BrainChip used to train their network. The BrainChip site is very useful as it provides information on the model used, the resulting accuracy and a link to the dataset. For the visual wake word example, BrainChip used the AkidaNet0.25 network with 96x96-pixel resolution and achieved 84.77% accuracy.

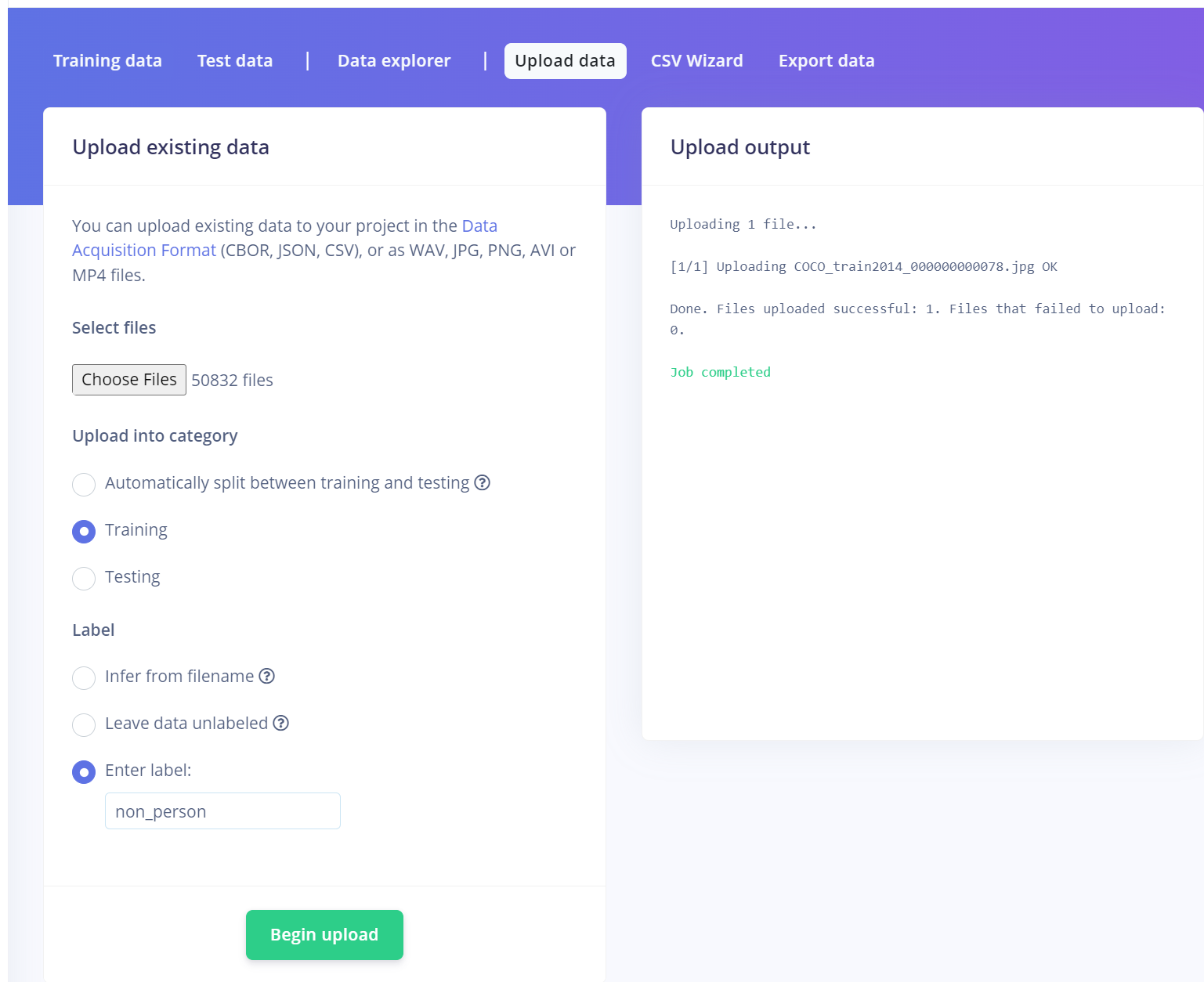

To get started with our Edge Impulse project we first need to download the dataset from the dataset mirror. This provides thousands of training and testing images which are labeled as either person or non-person.

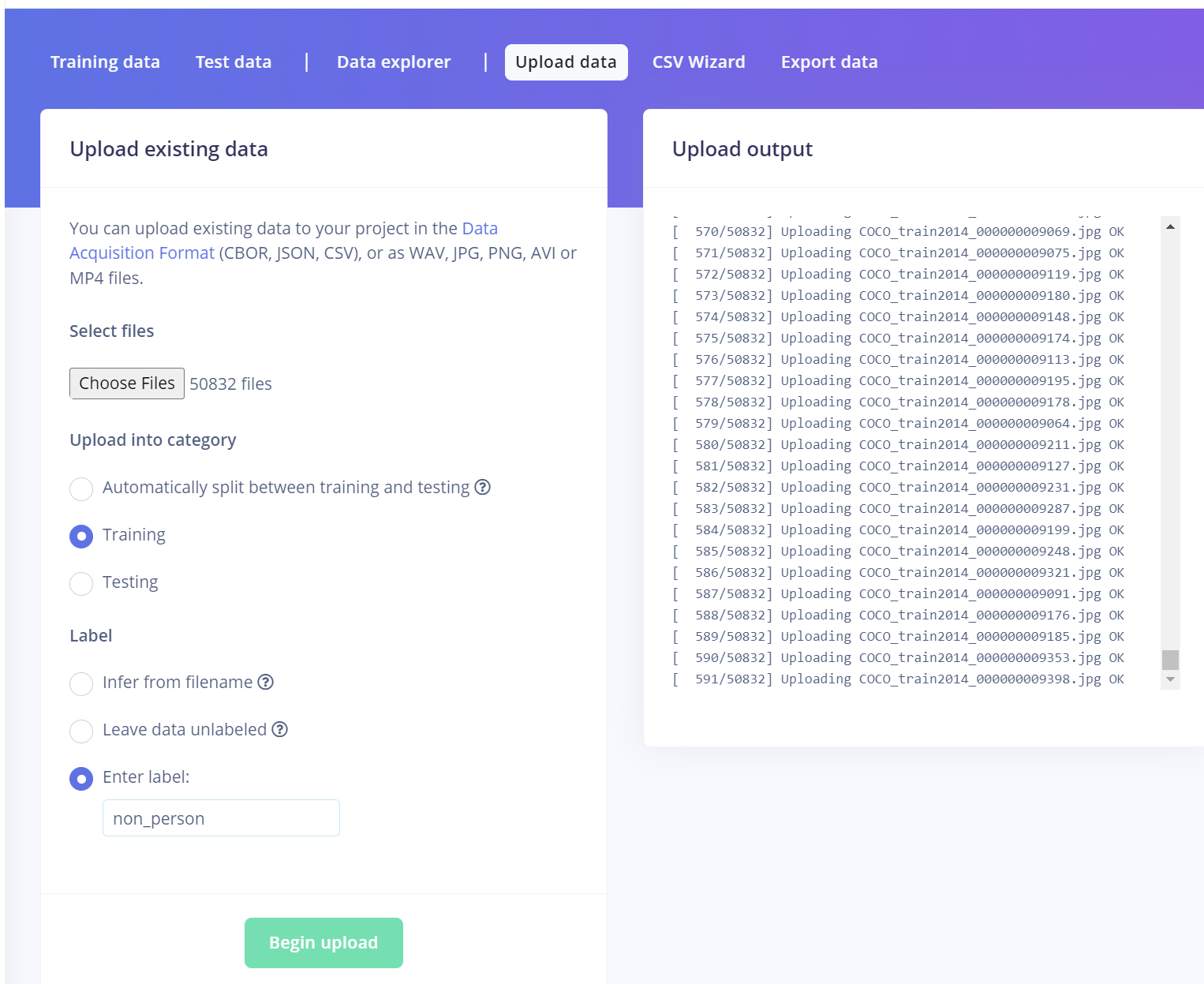

With the dataset downloaded we need to then upload them into our project in edge impulse. To do this, I used the data acquisition tab to upload all the training and testing data. Due to the large number of images, over 100,000 this may take a little time.

Be sure to load the training and testing images to the correct category.

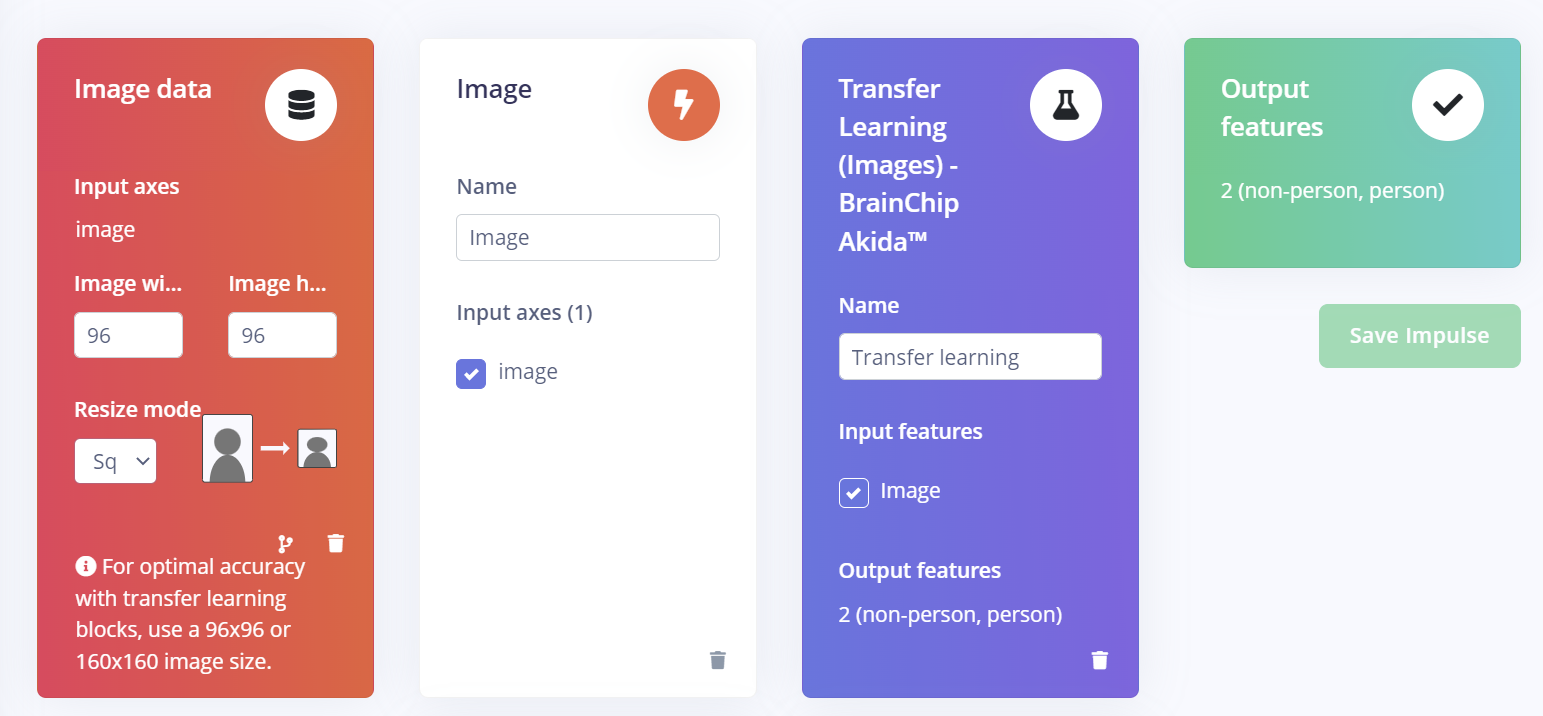

After the data has been uploaded, we can then start developing the impulse. We use the AkidaNet VWW Transfer Learning block for this.

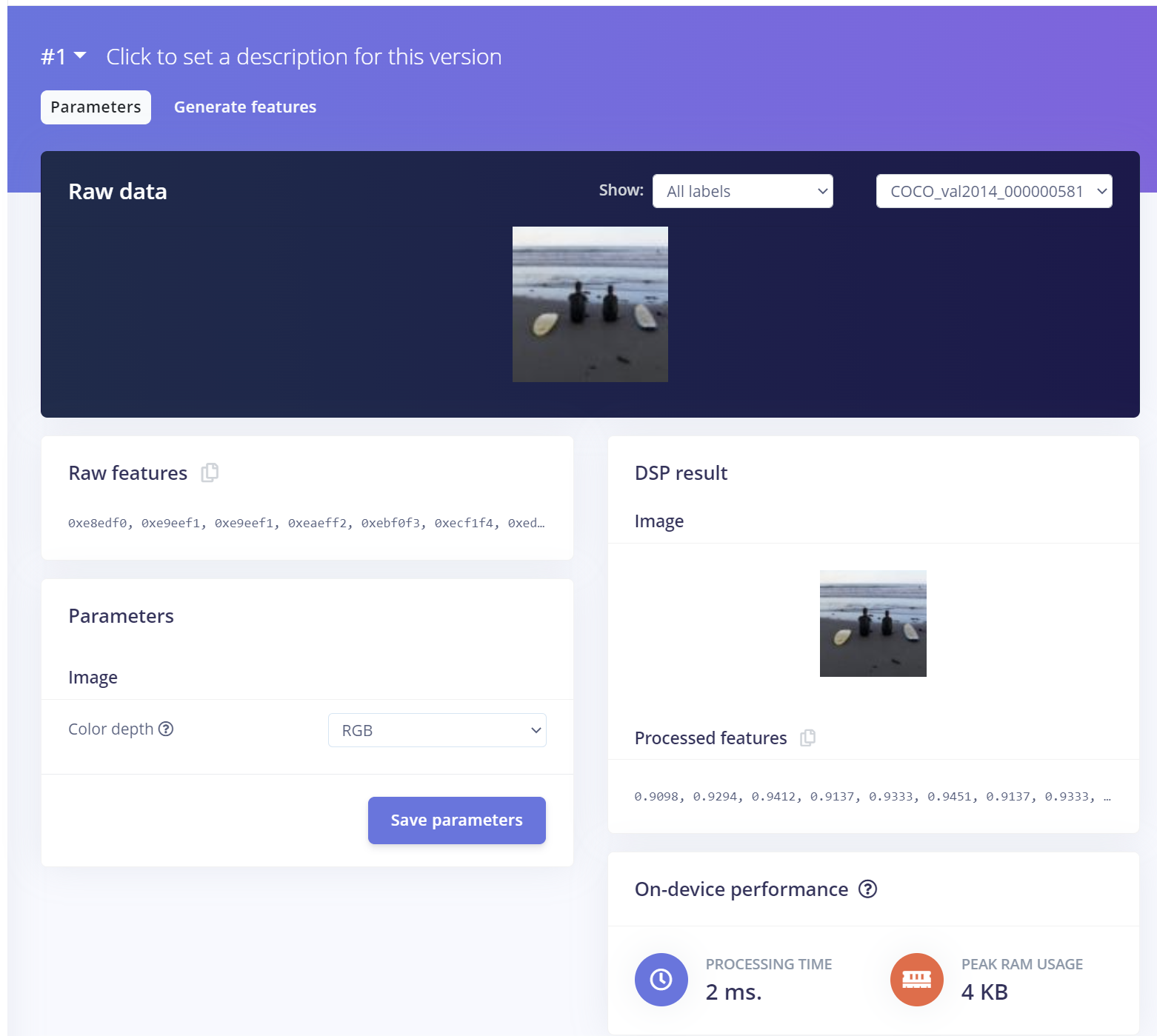

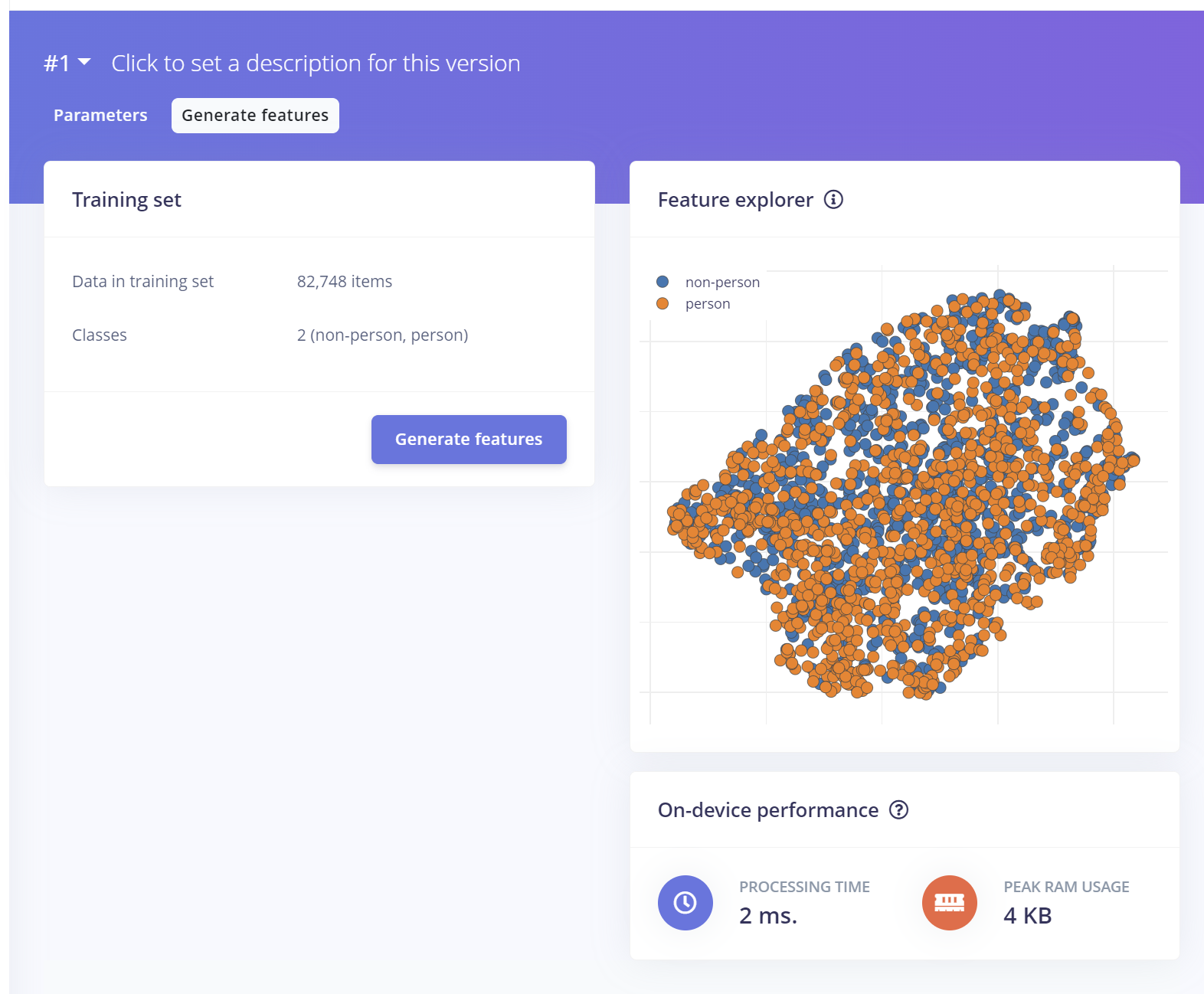

The image we process as 96x96 pixels with the resize mode of squash. On the image parameters we leave the color depth as RGB and generate the parameters.

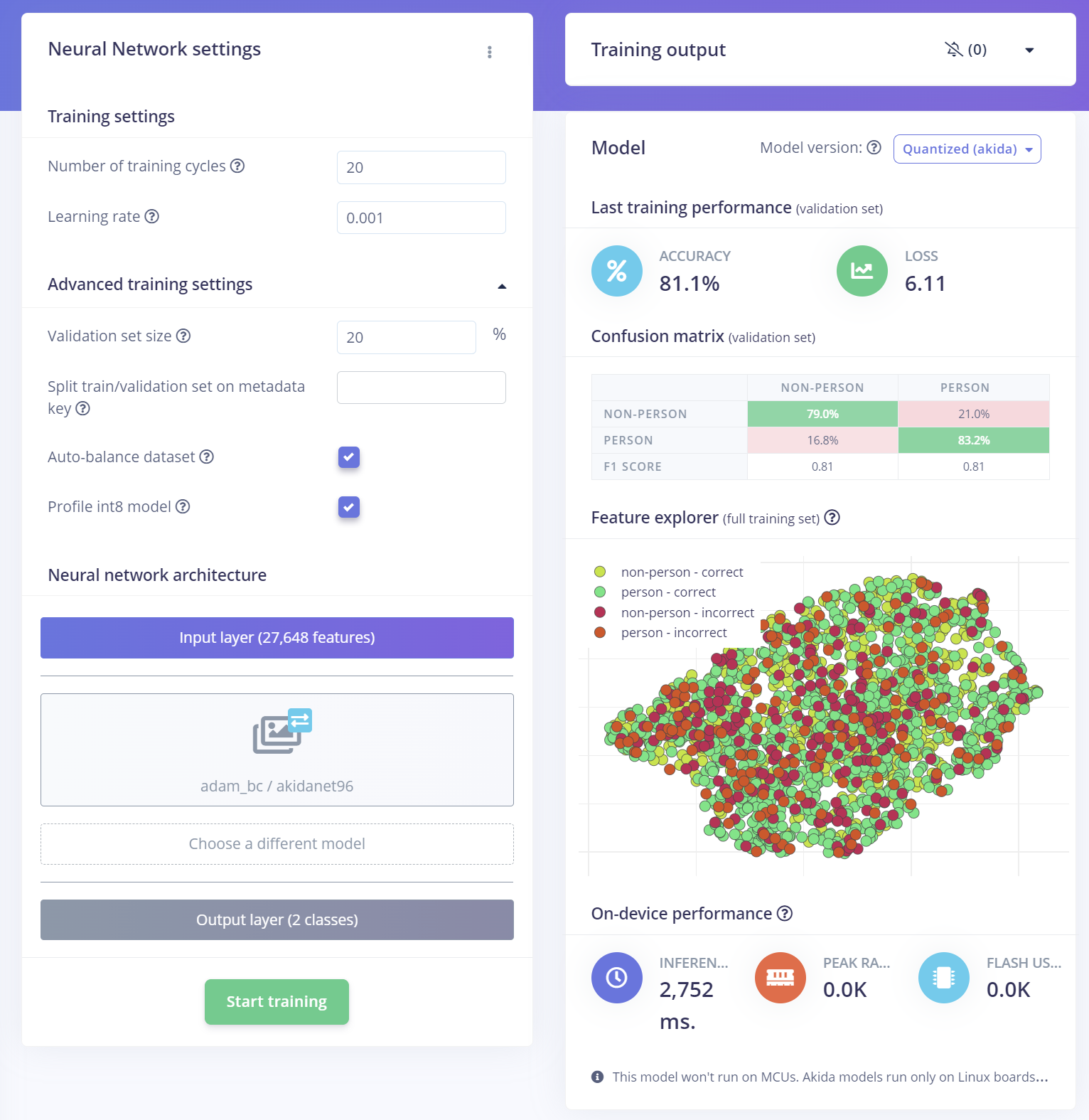

We can then train the impulse, which will take some time. However, once the training is completed, we will see the accuracy is 83.2%, which is close to that specified by BrainChip in their benchmarking.

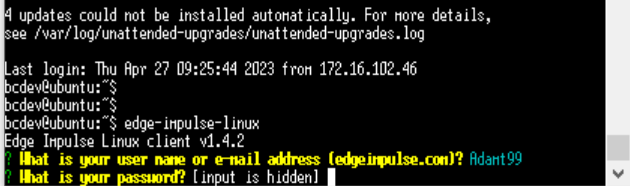

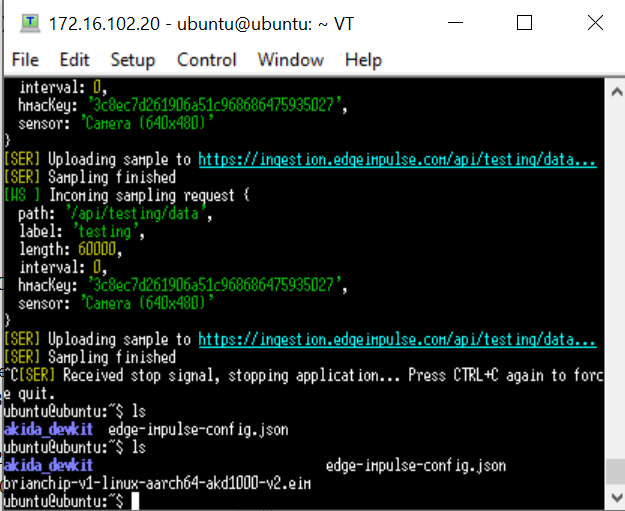

The final stage of the benchmarking is to run the model on the BrainChip Raspberry Pi development kit. To do this we first need to install the Edge Impulse client on the board, using these steps.

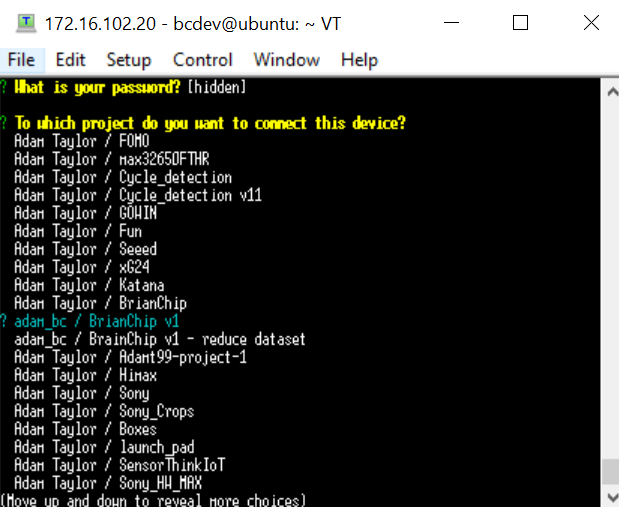

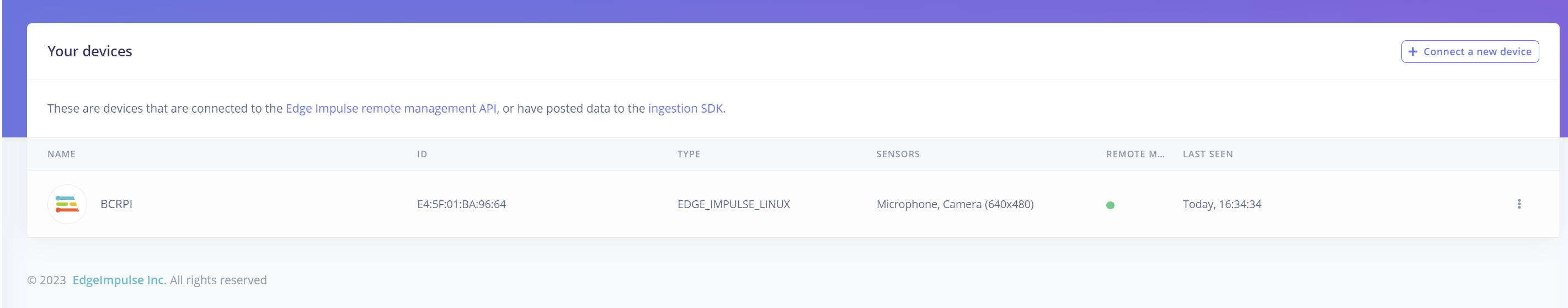

Once Edge Impulse is installed, we can connect the Raspberry Pi board to our system using the Edge Impulse client, enter our Edge Impulse credentials, and select the project.

This enables us to connect the board to our project and perform live classification of images using the Raspberry Pi board.

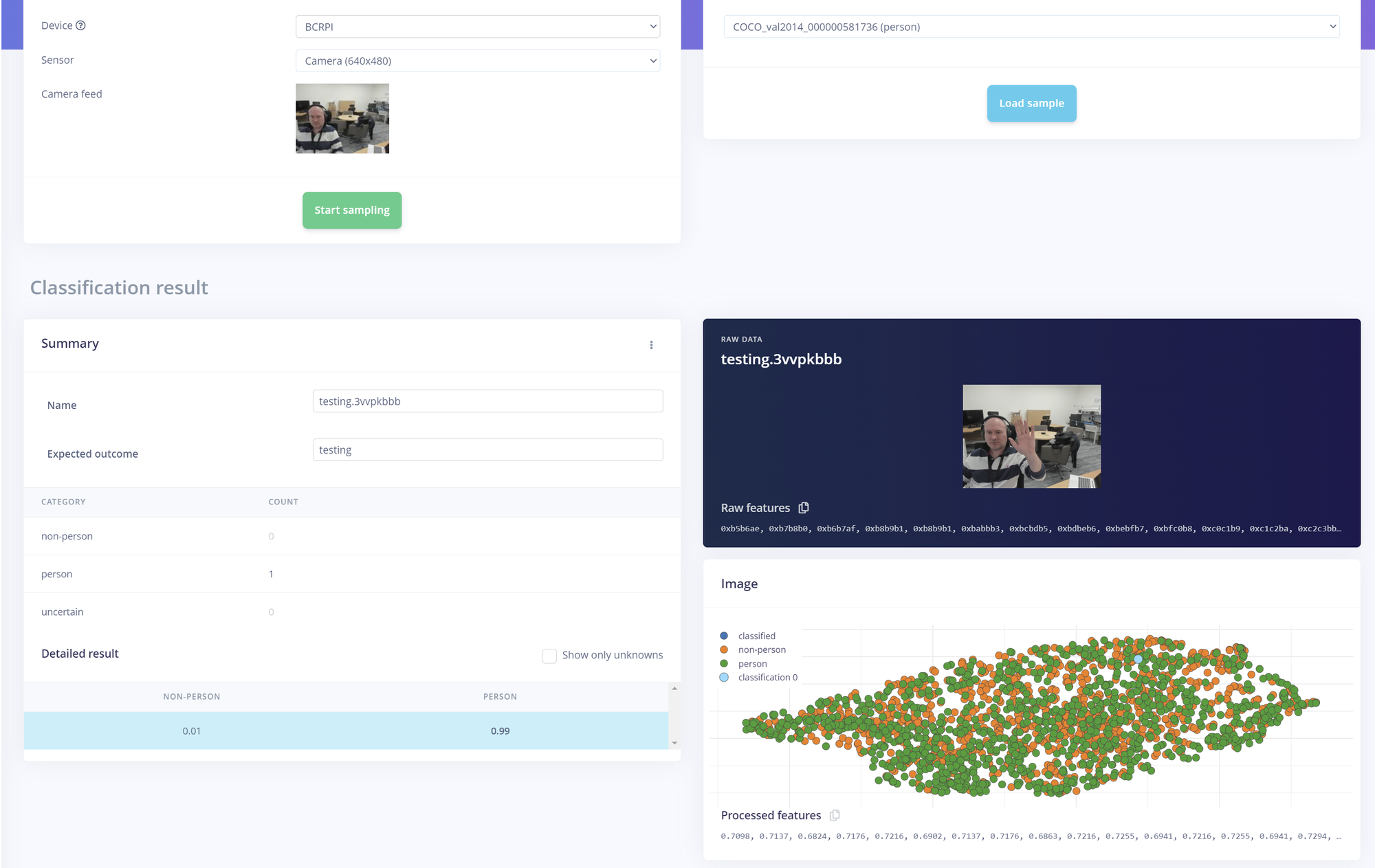

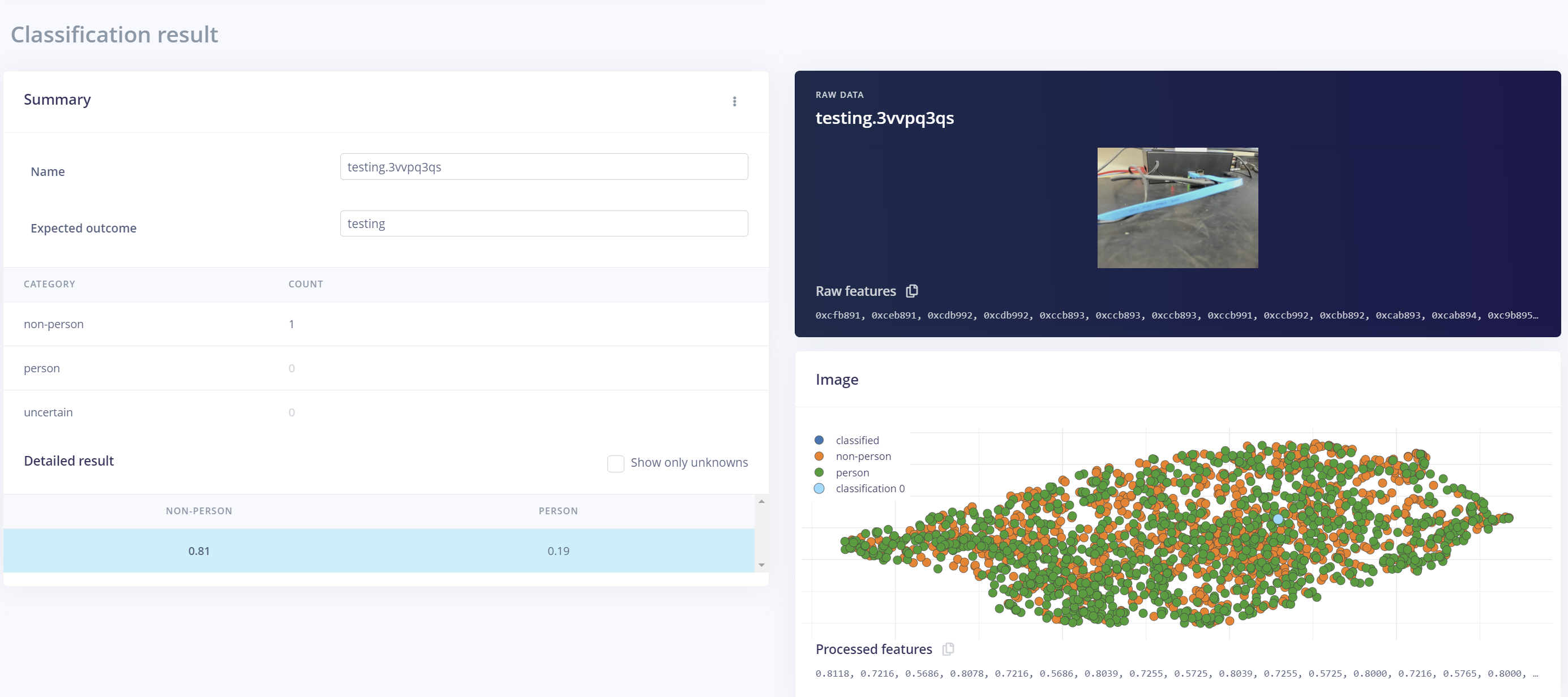

With the board connected we can run live classification where images are captured and processed on the board and results displayed in the edge impulse studio. This step enables us to use live data from the field and make any necessary adjustments to the configuration and training of the network.

The final step is to deploy the trained network on the Raspberry Pi and run the model on the hardware.

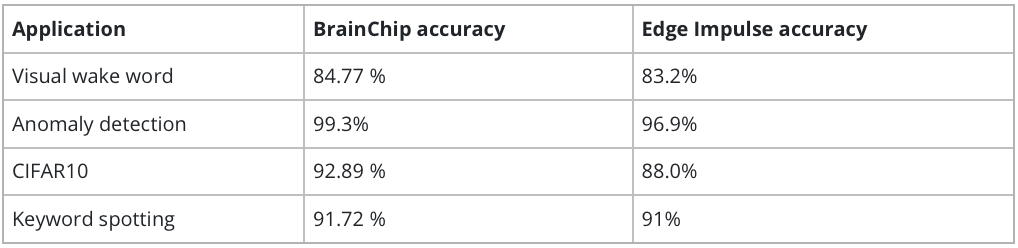

In this example, I set out to see if I could recreate the accuracy achieved for the BrainChip visual wake work detection using Edge Impulse. The original accuracy for the BrainChip VVW was 84.77% while Edge Impulse trained version was 83.2% which is very close to the original results and within what I would consider the margin of error.

Of course, visual wake word is just one application which benefits from the BrainChip architecture. I was therefore curious how the BrainChip architecture would perform against the different applications including keyword spotting, anomaly detection, and CIFAR classification. Using the same process as above I created Edge Impulse projects for the three remaining projects. Into these projects I loaded in the dataset BrainChip had used and created projects.

I have linked the projects at the end of the blog, however, you can see in the table below the alignment between what BrainChip achieved and what I was able to recreate with Edge Impulse relatively easily.

All told I am very impressed with the BrainChip Akida neuromorphic processor. The performance of the networks implemented is very good, while the power used is also exceptionally low. These two parameters are critical parameters for embedded solutions deployed at the edge.

Project links

- Visal wake word: https://studio.edgeimpulse.com/studio/224143

- Anomaly detection: https://studio.edgeimpulse.com/studio/261242

- CIFAR10: https://studio.edgeimpulse.com/studio/257103

- Keyword spotting: https://studio.edgeimpulse.com/studio/257193