We are thrilled to announce official support for the Espressif Systems ESP32 system-on-chip (SoC) and the ESP32-based ESP-EYE development board.

ESP32 is a series of low-cost, low-power SoCs with integrated Wi-Fi and dual-mode Bluetooth. Thanks to its affordable price, robust design, and wealth of connectivity options, a thriving ecosystem has formed around it — used by the developer community and industry alike.

There are dozens of different ESP32 variations and development boards designed around the SoC currently on the market. We have selected the ESP-EYE as a reference for Edge Impulse Studio integration since it is equipped with an OV2640 camera, microphone, 8Mb of PSRAM, and 4Mb of flash memory, allowing for the development of voice, vision, and other sensor data-enabled machine learning applications. Having said that, both pre-built firmware and a source code for it can be useful for connecting other ESP32-based boards to the Edge Impulse ecosystem — more on that below!

Getting started with the ESP-EYE

To get started with the ESP32 and Edge Impulse you’ll need:

- An ESP-EYE development board. A pre-built firmware for data collection/inference is specifically made for a sensor combination found on the ESP-EYE, but with a few simple steps, you can collect the data and run your models with other ESP32-based boards, including the ESP-CAM. For more details, check out “Using with other ESP32 boards."

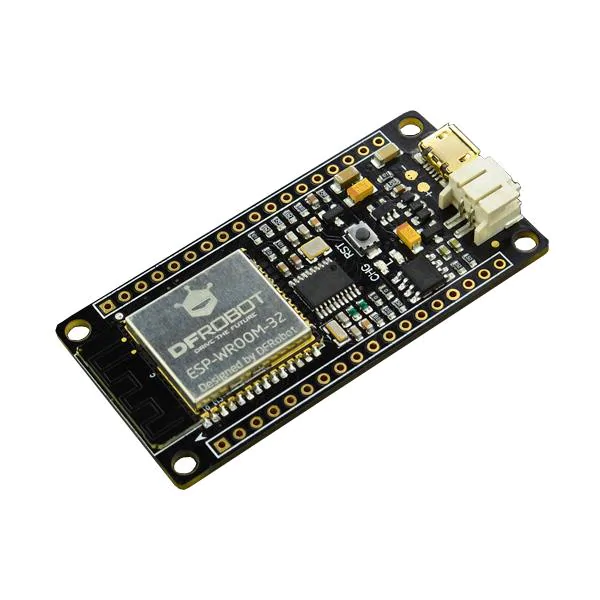

- If you wish to try analog sensor or accelerometer data collection, you can use the ESP32 FireBeetle board. The default firmware supports the LIS3DHTR module connected to I2C (SCL pin 22, SDA pin 21) and any analog sensor connected to A0.

The Edge Impulse firmware for this development board is open source and hosted on GitHub.

To get started in just a few minutes, take a look at our guide or watch the following video tutorial, which will walk through how to flash the default firmware to instantly begin data collection into your Edge Impulse project:

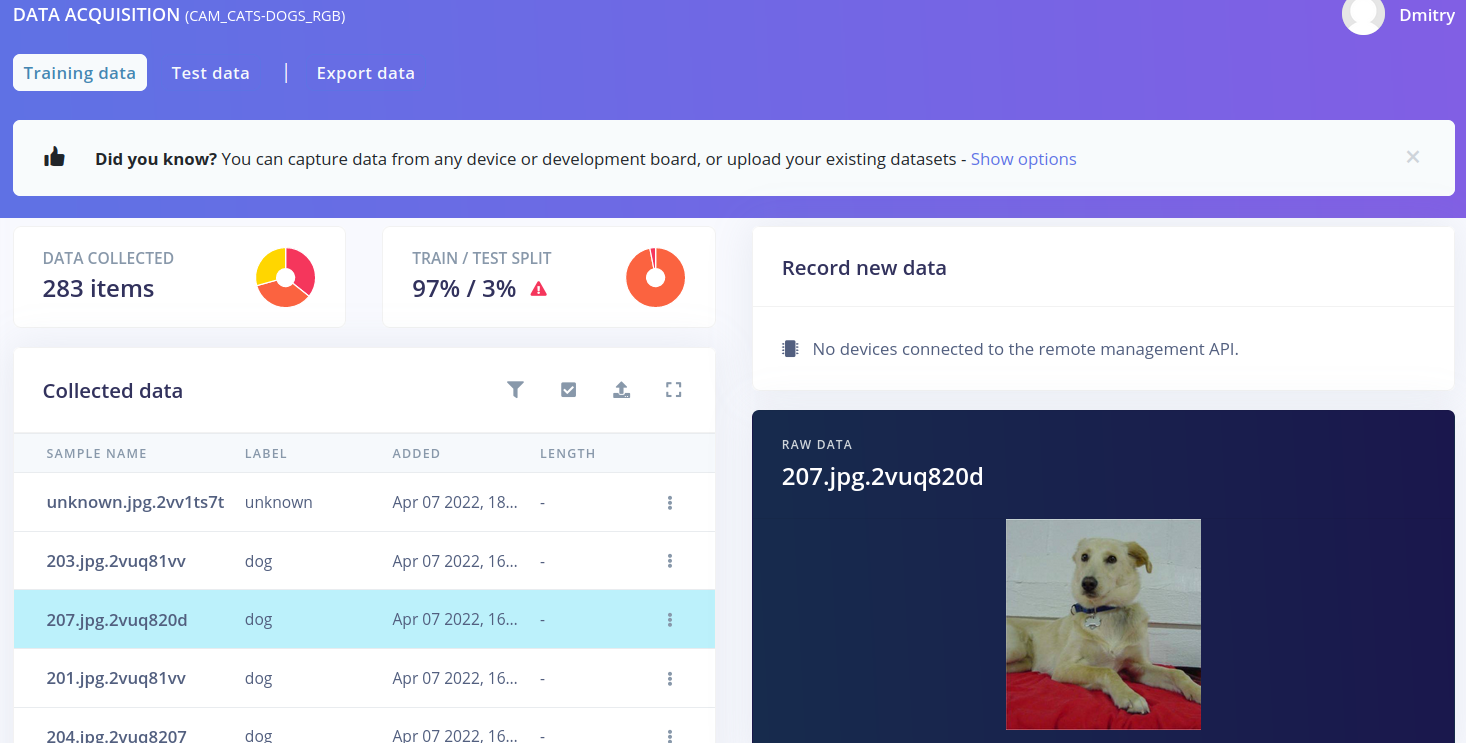

In the video above, we demonstrated sample collection with ESP-EYE camera — you can collect more data yourself, or use one of the freely available datasets like this one to build an image recognition project in under one hour!

Optimizations and benchmarks

ESP32 represents a new milestone for Edge Impulse not only because of its immense popularity in the community, but also because it is the first major non-Arm processor board supported. It has brought a set of unique challenges when working on firmware for ESP32 — the CMSIS-NN and CMSIS-DSP optimizations could have not been used for the Tensilica Xtensa LX6 microprocessor. Espressif engineers are developing their own set of optimized kernels and operations for faster neural network inference on their SoCs — ESP-NN and ESP-DSP. ESP-NN support is already integrated in official Edge Impulse firmware and brings 3x-4x speedup over the default TensorFlow Lite for Microcontrollers kernels. That allows running our keyword spotting project real-time with a high accuracy:

Without ESP-NN:

Predictions (DSP: 140 ms., Classification: 20 ms., Anomaly: 0 ms.):

no: 0.074219

noise: 0.638672

unknown: 0.265625

yes: 0.017578With ESP-NN:

Predictions (DSP: 140 ms., Classification: 5 ms., Anomaly: 0 ms.):

no: 0.001953

noise: 0.996094

unknown: 0.000000

yes: 0.000000

After applying more optimizations, the total inference time is 105 ms. for 250 ms. window of data. It goes without saying that it works even better for tabular data coming from sensors like accelerometer, which typically have less data in an inferencing window and smaller model. For example, a three-layer fully connected model for continuous gesture recognition has an inference time of just 1 ms. For vision models, thanks to ESP-NN optimizations it is possible to run smaller MobileNet v1 (alpha 0.1 96x96 RGB) models with ~200 ms. inference times per frame. However, MobileNet v2 has a few NN operators that are not optimized with ESP-NN, and therefore its inference time is significantly slower at ~800 ms per frame for comparable models. Recently introduced family of object detection models, FOMO (MobileNet v2 96x96 alpha 0.35 backend) runs with ~862 ms. latency. Below is a benchmark table for a few reference models.

| Model | Inference latency |

| Keyword spotting (Conv1D) | 3 ms. |

| Continuous gesture (Fully connected) | 1 ms. |

| MobileNet v1 alpha 0.1 Grayscale 96x96 | 186 ms. |

| MobileNet v2 alpha 0.1 Grayscale 96x96 | 817 ms. |

| MobileNet v1 alpha 0.1 RGB 96x96 | 205 ms. |

| MobileNet v2 alpha 0.1 RGB 96x96 | 839 ms. |

| FOMO alpha 0.35 Grayscale 96x96 | 862 ms. |

Which sensors are supported?

ESP-EYE was chosen as a reference board for Edge Impulse ESP32 firmware, given it’s freely available, affordable and features both a camera and a microphone. Other ESP32-based boards can run both data collection firmware and trained models as well, with some changes to the source code to account for differences between the boards. For more details, check out “Using with other ESP32 boards."

The standard firmware supports the following sensors:

- Camera: OV2640, OV3660, OV5640 modules from Omnivision

- Microphone: I2S microphone on ESP-EYE (MIC8-4X3-1P0)

- LIS3DHTR module connected to I2C (SCL pin 22, SDA pin 21)

- Any analog sensor, connected to A0

The analog sensor and LIS3DHTR module were tested on ESP32 FireBeetle board and Grove 3-Axis Digital Accelerometer LIS3DHTR module.

Better, faster, stronger

As you might have noticed in the previous section, there is another optimization that can be included in ESP32 firmware, namely ESP-DSP, primarily for speeding up FFT calculations, used in spectral analysis, MFCC and MFE DSP blocks from Edge Impulse Studio. That is something our team has in sights for the next firmware update, along with some other enhancements. Since the ESP32 firmware, like any other firmware for Edge Impulse supported devices, is open source and uploaded to a public GitHub repository. If you have suggestions — or even better — pull requests, with implemented features and optimizations, feel free to get in touch with us!

Happy discovery!