These days you can find feature-packed home security cameras starting at under $30, so it should come as no surprise that these tiny devices are popping up in homes everywhere. Typically coming standard with movement detection and notification capabilities, security cameras can give us peace of mind that everything is secure and in good order while we are away. These conveniences come with some fairly significant drawbacks, however. The movement detection capabilities, especially in the lower-end cameras are not very sophisticated, and often alert when a pet walks into view, or when bright lights reflecting off of a passing car shine through a window. Excessive, uninformative alerts lead to notification fatigue, which is likely to cause people to ignore alerts, and potentially miss something important. An even larger problem is that of privacy. How certain can you be that a disgruntled employee of the device manufacturer is not snooping on your living room? And what about the possibility of exploits (which manufacturers are not always upfront about) that give bad actors access?

To address these issues, I decided to build my own security camera that uses machine learning to detect people, while ignoring irrelevant distractions like pets. All operations are performed entirely on-device to avoid privacy issues. When a person is detected by the camera, the system will send an alert to a specified email address. In addition to being able to customize the camera to do whatever I want, building the system from the ground up sets my mind at ease about a camera pointing at me in my home, because I know exactly what it does (and does not) do.

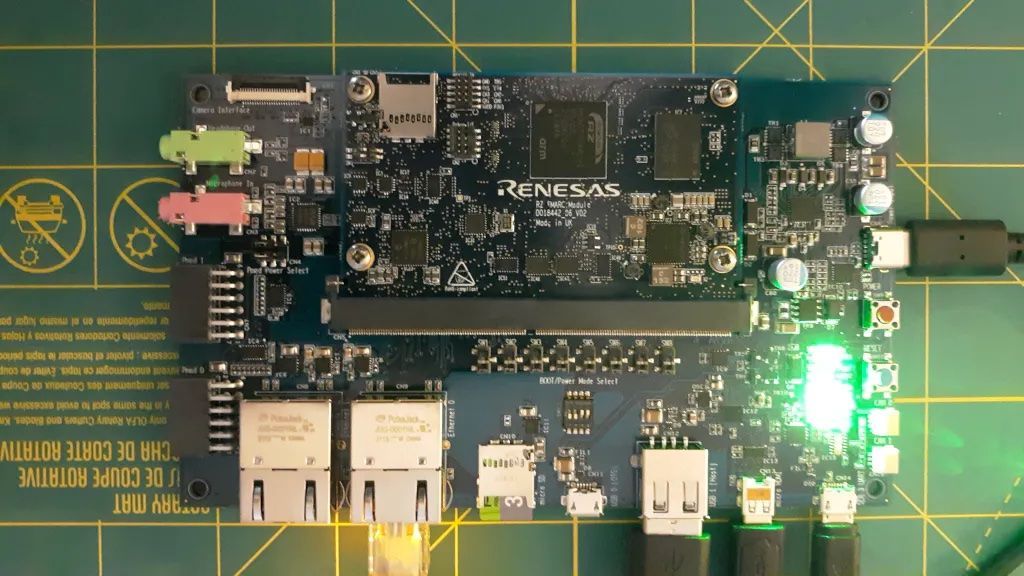

To get this project off the ground, I needed to choose a solid hardware platform that can handle real time image capture and object recognition. I picked the Linux-capable Renesas RZ/G2L evaluation board kit, which is very well suited to embedded ML applications with a dual-core processor (1.2 GHz and 200 MHz) on-board. The 2 GB of SDRAM and 512 MB of flash memory were well in excess of what I needed to make my project work, which leaves room for additional functionality in the future. Gigabit ethernet made it simple to get connected to the Internet to send email notifications. A USB webcam was used to capture images, and was immediately detected by the RZ/G2L, so I didn’t have to worry about installing any drivers.

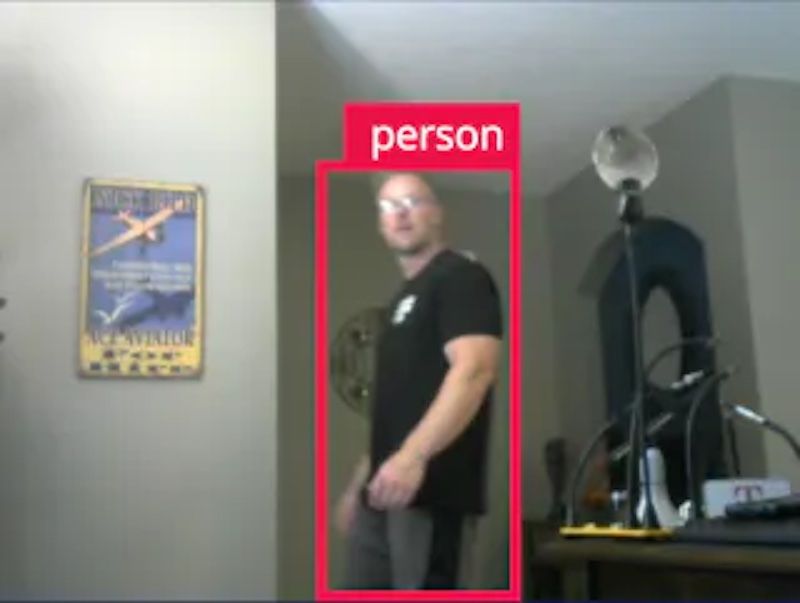

With the hardware ready to go, it was time to build an application that captures webcam images, determines if people are in the frame, and if so, sends an email alert. I used OpenCV to capture webcam images and display them on the screen and chose Edge Impulse to simplify building a machine learning object detection pipeline that is optimized for embedded devices. To build an object detection pipeline, you first need examples of the objects that you want to detect, so I wrote a program to collect webcam images with and without people present in them. In total, I captured 461 images that I uploaded to Edge Impulse and then drew bounding boxes around objects with the data acquisition tool.

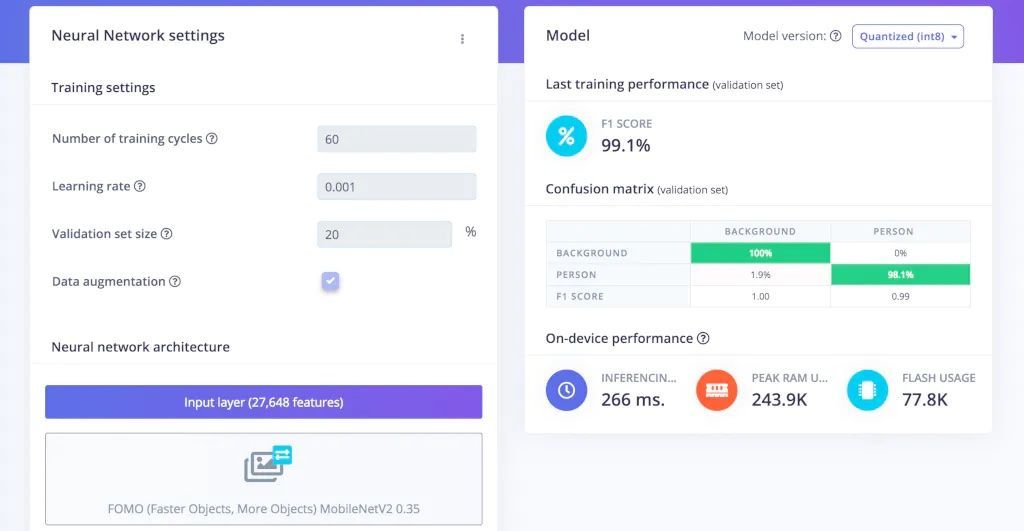

To build the model, I created an impulse consisting of a preprocessing step to resize the input images for more efficient downstream analysis. Next, an object detection neural network was added. This neural network implements Faster Objects, More Objects (FOMO), which was designed for real-time object detection on resource-constrained devices. It is 30 times faster than MobileNet SSD yet runs with less than 200 KB of RAM. After training the model on the data I collected, it achieved a 99.1% object classification accuracy rate.

The only thing left to do at this point was deploy the model to the hardware platform. I exported it from Edge Impulse as a C++ library, then was easily able to integrate it within my own application that handles capturing images and sending notifications. After compiling the code, I transferred it to the RZ/G2L board and started it up.

By keeping all of the image captures and processing local, privacy concerns have been eliminated. There is no unknown handling of the data at remote locations by a third party. Further, by detecting specifically the presence of a person (as opposed to a pet, or flash of bright light through a window from a passing car, etc.), notifications are only sent when they are relevant and worthy of attention.

For additional details about the machine learning pipeline, head on over to my public Edge Impulse project. You can find the source code, and more implementation details, in my project write-up.

Want to see Edge Impulse in action? Schedule a demo today.