Introduction

Wheel lug nuts are such a tiny part of the overall automobile assembly that they’re easy to overlook, yet serve a critical function in the safe operation of an automobile. In fact, it is not safe to drive even with one lug nut missing. A single missing lug nut will cause increased pressure on the wheel, in turn causing damage to the wheel bearings, studs, and make the other lug nuts fall off.

Over the years there have been a number of documented safety recalls and issues around wheel lug nuts. In some cases, it was only identified after the fact that the automobile manufacturer had installed incompatible lug nut types with the wheel or had been inconsistent in installing the right type of lug nut. Even after delivery, after years of wear and tear, the lug nuts may become loose and may even fall off which would cause instability for an automobile to be in service. To reduce these incidents of quality control at manufacturing and maintenance in the field, there is a huge opportunity to leverage machine learning at the edge to automate wheel lug nut detection.

This motivated us to create a proof-of-concept reference project for automating wheel lug nut detection by easily putting together a USB webcam, Raspberry Pi 4, Microsoft Azure IoT, and Edge Impulse, creating an end-to-end wheel lug nut detection system using object detection. This example use case and other derivatives will find a home in many industrial IoT scenarios where embedded machine learning can help improve the efficiency of factory automation and quality control processes including predictive maintenance.

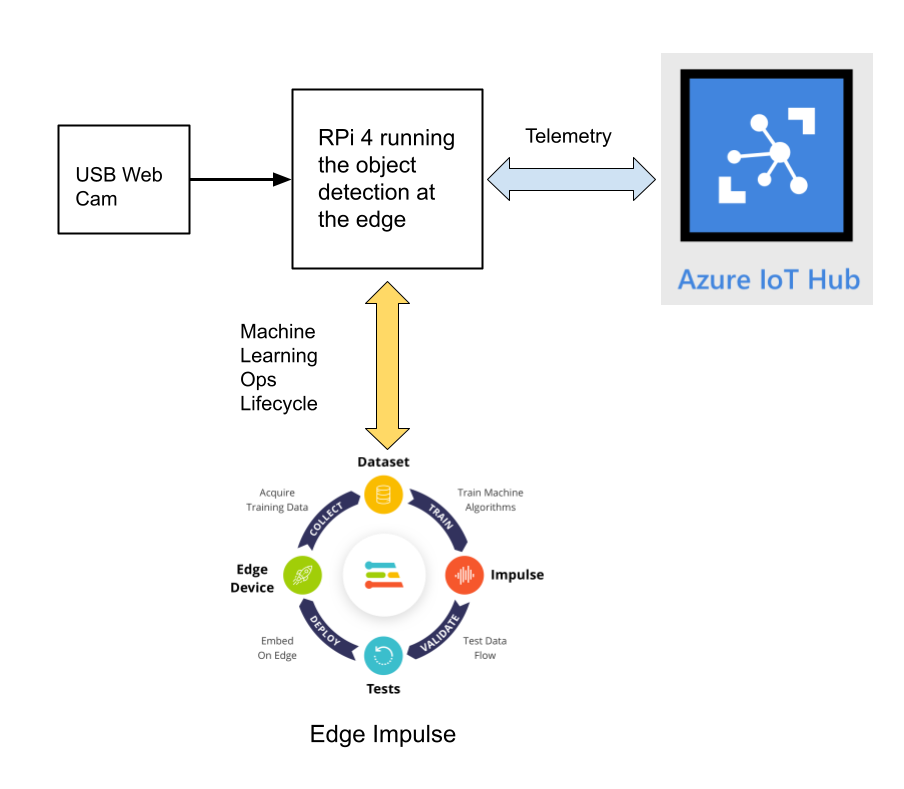

This reference project will serve as a guide for quickly getting started with Edge Impulse on the Raspberry Pi 4 and Azure IoT, to train a model that detects lug nuts on a wheel and sends inference conclusions to Azure IoT as shown in the block diagram below:

Design Concept: Edge Impulse and Azure IoT

Edge Impulse is an embedded machine learning platform that allows you to manage the entire Machine Learning Ops (MLOps) lifecycle, which includes 1) Data acquisition, 2) Signal processing, 3) ML training, 4) Model testing, and 5) Creating a deployable model that can run efficiently on an edge device.

For the edge device, we chose to use the Raspberry Pi 4 due to its ubiquity and available processing power for efficiently running more sophisticated machine learning models such as object detection. By running the object detection model on the Raspberry Pi 4, we can optimize the network bandwidth connection to Azure IoT for robustness and scalability by only sending the inference conclusions, i.e. “How many lug nuts are on the wheel?” Once the inference conclusions are available at the Azure IoT level, it becomes straightforward to feed these results into your business applications that can leverage other Azure services such as Azure Stream Analytics and Power BI.

In the next sections we’ll discuss how you can set this up yourself with the following items:

- Raspberry Pi 4

- USB webcam (such as a Logitech HD webcam)

- Edge Impulse account

- Azure IoT Hub instance

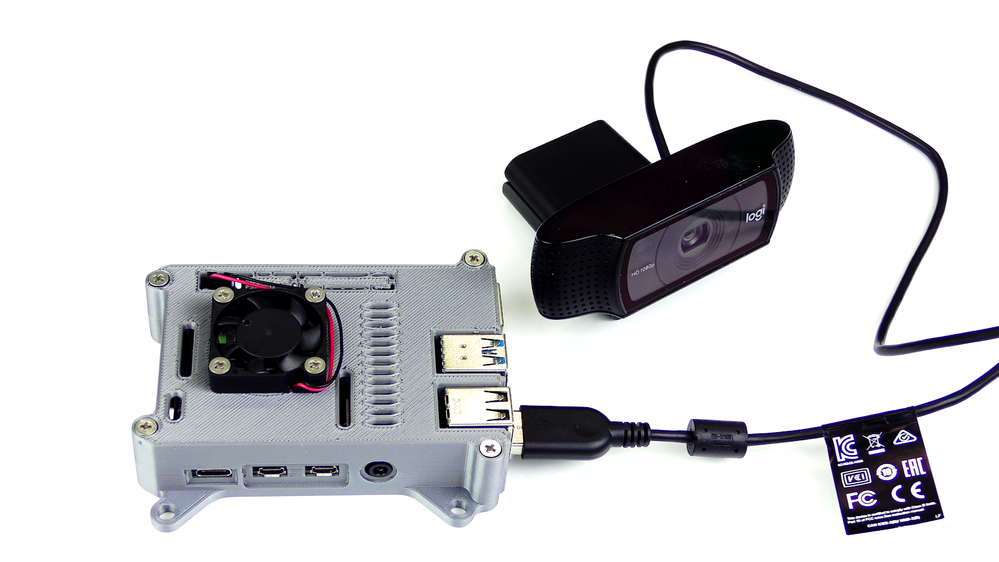

Setting Up the Hardware

We begin by setting up the Raspberry Pi 4 to connect to a Wi-Fi network for our network connection, configuring it for camera support, and installing the Edge Impulse Linux CLI (command line interface) tools on the Raspberry Pi 4. This will allow the Raspberry Pi 4 to directly connect to Edge Impulse for data acquisition and finally, deployment of the wheel lug nut detection model.

For starters, you’ll need a Raspberry Pi 4 with an up-to-date Raspberry Pi OS image that can be found here. After flashing this image to an SD card and adding a file named ’wpa_supplicant.conf’:

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

update_config=1

country=<Insert 2 letter ISO 3166-1 country code here>

network={

ssid="<Name of your wireless LAN>"

psk="<Password for your wireless LAN>"

}along with an empty file named ’ssh’ (both within the ’/boot’ directory), you can go ahead and power up the board.

Once you’ve successfully SSH’d into the device with:

ssh pi@<IP_ADDRESS>and the password ’raspberry’, it’s time to install the dependencies for the Edge Impulse Linux SDK. Simply run the next three commands to set up the NodeJS environment and everything else that’s required for the edge-impulse-linux wizard:

curl -sL https://deb.nodesource.com/setup_12.x | sudo bash -

sudo apt install -y gcc g++ make build-essential nodejs sox gstreamer1.0-tools gstreamer1.0-plugins-good gstreamer1.0-plugins-base gstreamer1.0-plugins-base-apps

npm config set user root && sudo npm install edge-impulse-linux -g --unsafe-permFor more details on setting up the Raspberry Pi 4 with Edge Impulse, visit this link.

Since this project deals with images, we’ll need some way to capture them. The wizard supports both the Pi camera modules and standard USB webcams, so make sure to enable the camera module first with:

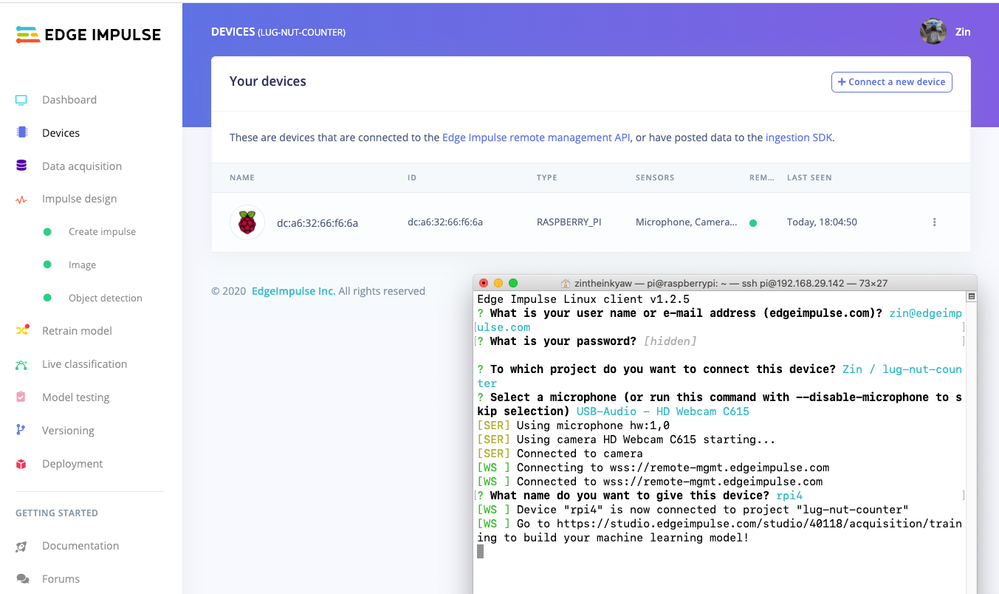

sudo raspi-configif you plan on using one. With that completed, go to Edge Impulse and create a new project, then run the wizard with:

edge-impulse-linuxand make sure your device appears within the Edge Impulse Studio’s device section after logging in and selecting your project.

Data Acquisition

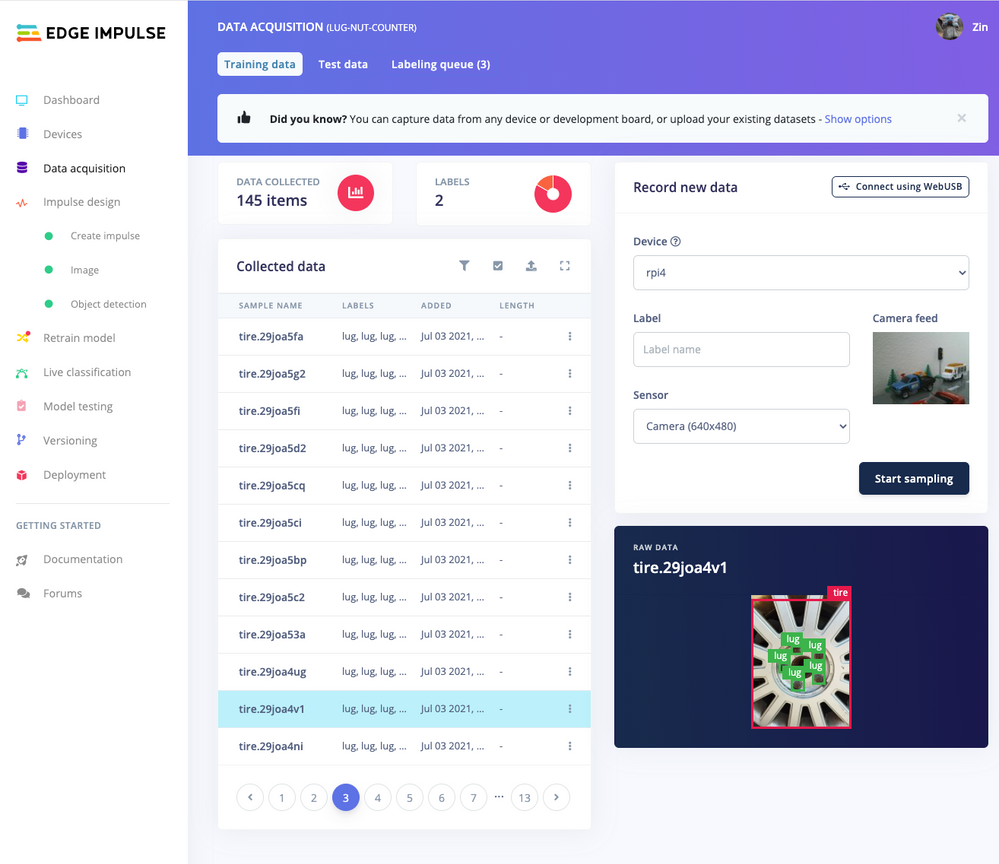

Training accurate production ready machine learning models requires feeding plenty of varied data, which means a lot of images are typically required. For this proof-of-concept, we captured around 145 images of a wheel that had lug nuts on it. The Edge Impulse Linux daemon allows you to directly connect the Raspberry Pi 4 to Edge Impulse and take snapshots using the USB webcam.

Using the Labeling queue in the Data Acquisition page we then easily drew bounding boxes around each lug nut within every image, along with every wheel. To add some test data we went back to the main Dashboard page and clicked the ’Rebalance dataset’ button that moves 20% of the training data to the test data bin.

Impulse Design and Model Training

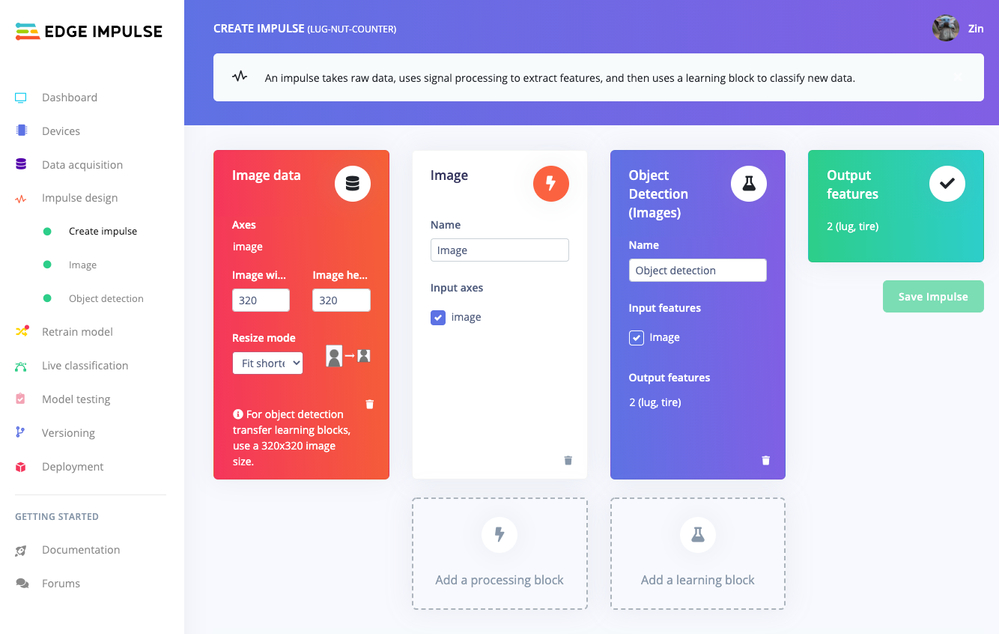

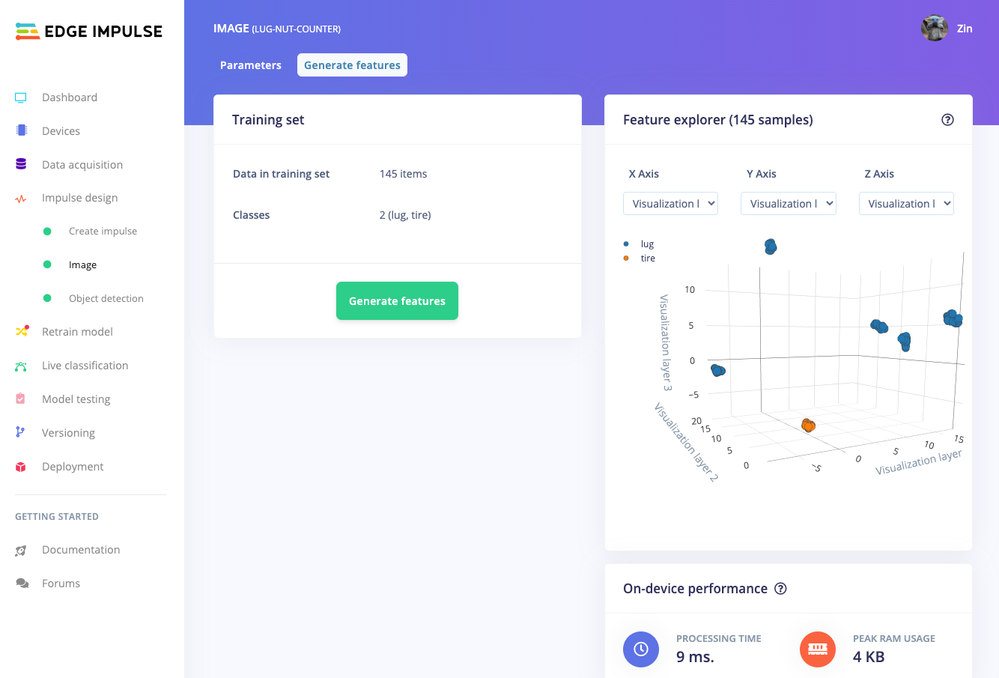

Now that we have plenty of training data, it’s time to design and build our model. The first block in the Impulse Design is an Image Data block, and it scales each image to a size of ’320’ by ’320’ pixels.

Next, image data is fed to the Image processing block that takes the raw RGB data and derives features from it.

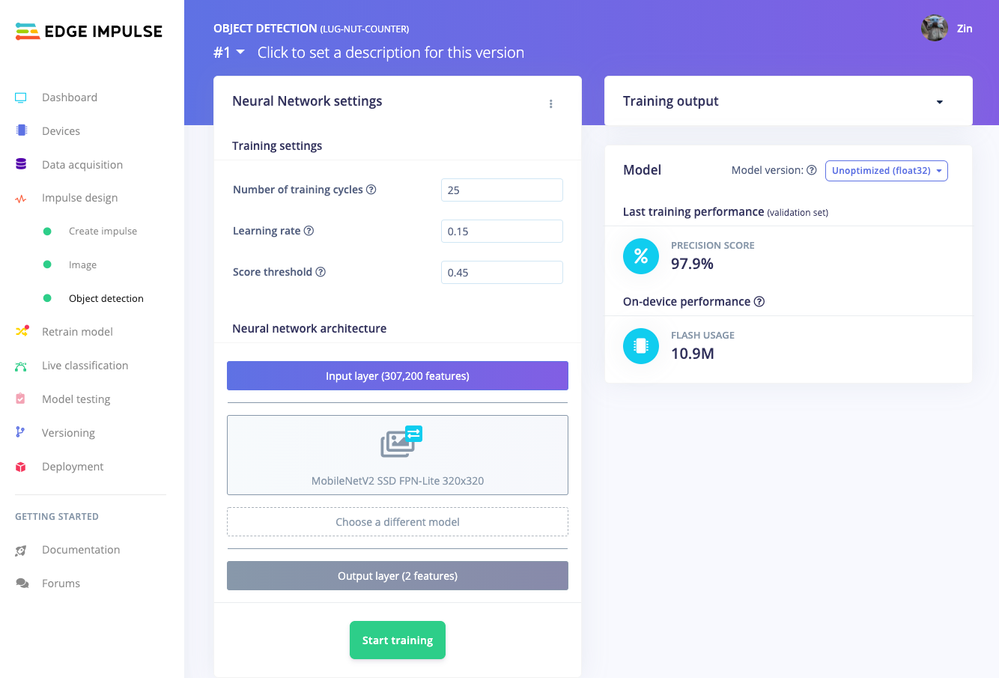

Finally, these features are used as inputs to the MobileNetV2 SSD FPN-Lite Transfer Learning Object Detection model that learns to recognize the lug nuts. The model is set to train for ’25’ cycles at a learning rate of ’.15’, but this can be adjusted to fine-tune for accuracy. As you can see from the screenshot below, the trained model indicates a precision score of 97.9%.

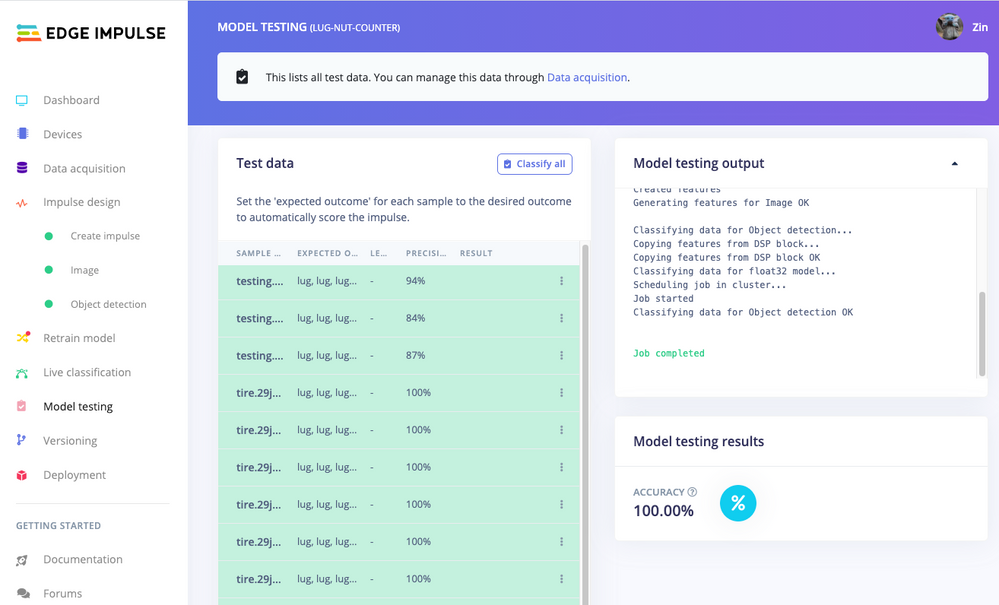

Model Testing

If you’ll recall from an earlier step we rebalanced the dataset to put 20% of the images we collected to be used for gauging how our trained model could perform in the real world. We use the model testing page to run a batch classification and see how we expect our model to perform. The ’Live Classification’ tab will also allow you to acquire new data direct from the Raspberry Pi 4 and see how the model measures up against the immediate image sample.

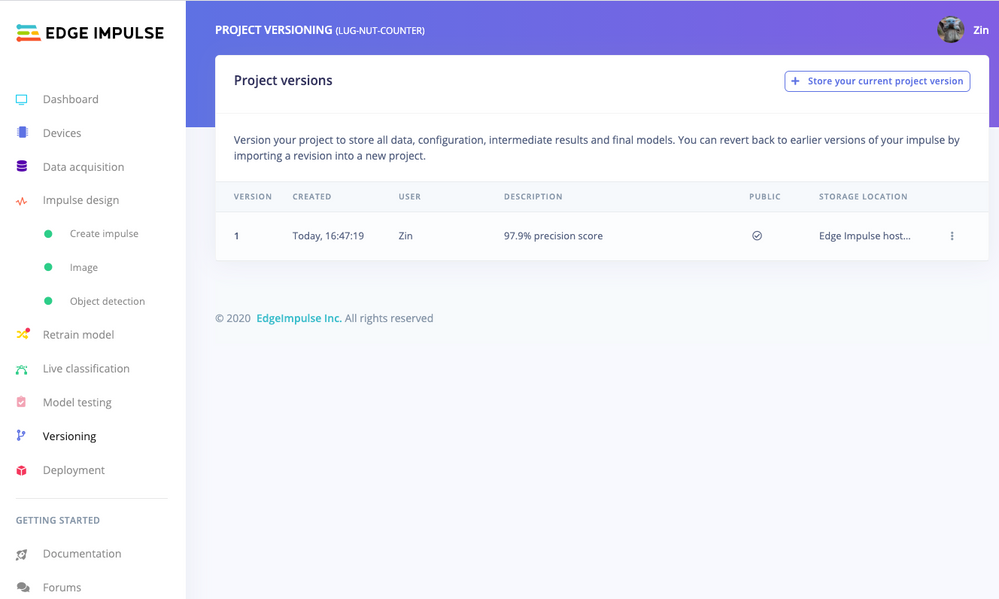

Versioning

An MLOps platform would not be complete without a way to archive your work as you iterate on your project. The ’Versioning’ tab allows you to save your entire project including the entire dataset so you can always go back to a "known good version" as you experiment with different neural network parameters and project configurations. It’s also a great way to share your efforts as you can designate any version as ’public’ and other Edge Impulse users can clone your entire project and use it as a springboard to add their own enhancements.

Deploying Models

In order to verify that the model works correctly in the real world, we’ll need to deploy it to the Raspberry Pi 4. This is a simple task thanks to the Edge Impulse CLI, as all we have to do is run:

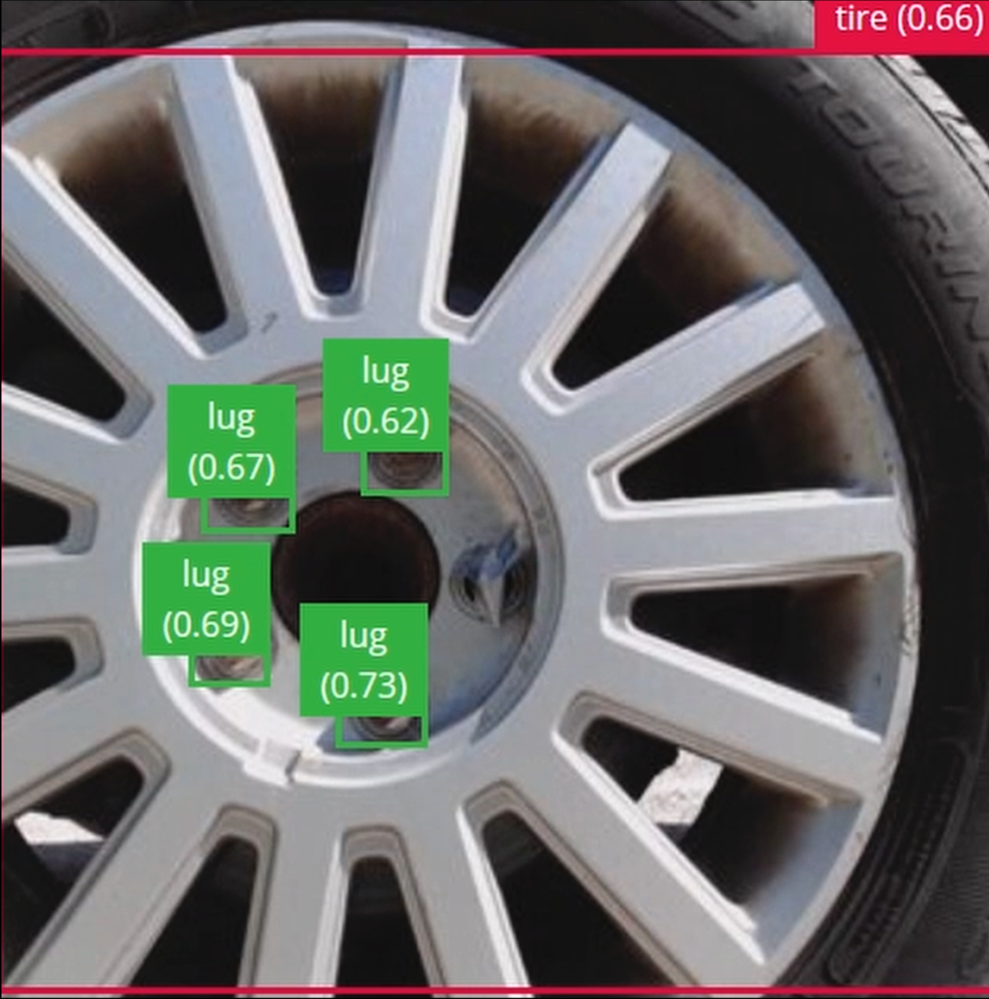

edge-impulse-linux-runner which downloads the model and creates a local webserver. From here, we can open a browser tab and visit the address listed after we run the command to see a live camera feed and any objects that are currently detected. Here’s a sample of what the user will see in their browser tab: