It has often been said that we all carry supercomputers around with us in our pockets. While this may seem like hyperbole at first blush, it is a true statement about the exponential increase in computing power that has been achieved in the past few decades. It is undeniable that the smartphones we carry around with us, and often take for granted, have far more computational power available to them than the room-sized supercomputers of yesteryear.

Looking back on the progress we have made in advancing the state of the art, we may feel like patting ourselves on the back for a job well done. And there is nothing wrong with that, but oftentimes it is far more interesting to look forward than backward. But to get the best glimpse of the future, I think we should peer ahead with a knowledge of what came before. Doing so will color our view of what is to come with the paintbrush of rapid technological progress.

The overall trend in computing is that tasks that were once only possible on supercomputers become possible on personal computers in time, and for a fraction of the previous high cost. Today we certainly have many applications that require massive compute resources. Generative AI, which has come into the spotlight in a big way recently, fits into this mold for many use cases of the technology. Consider the popular large language models with billions or trillions of parameters that cost millions of dollars in compute time to train and run inferences, or the text-to-image models that turn a sentence into an often jaw-dropping photorealistic image.

If you have been paying attention, then you will realize that these presently out-of-reach algorithms will one day run on the supercomputers in our pockets, as hard as it may be to imagine today. So then, as it stands now, exactly how far away from this future are we? There is much work yet to be done. That cannot be denied. Yet we may be closer than many people realize.

Recently, an engineer by the name of Vita Plantamura created a tool called OnnxStream that allows the cutting-edge Stable Diffusion deep learning text-to-image model to run on the $15 Raspberry Pi Zero 2 W single-board computer. That was quite an incredible feat, but given the diminutive specs of the platform (1 GHz Arm Cortex-A53 processor and 512 MB of RAM), it is not very practical for real-world use. Image generation takes about an hour and a half on this Raspberry Pi model.

That led me to wonder — by loosening up the budget just a bit, maybe up to $100, how close are we to running a powerful text-to-image deep learning algorithm on an edge computing platform? To answer that question, I decided to leverage OnnxStream to run Stable Diffusion on the OKdo ROCK 5 Model A 4 GB single-board computer. It just fits in the budget, and the octa-core processor with a quad Arm Cortex-A76 running at 2.2 - 2.4 GHz and a quad Arm Cortex-A55 running at 1.8 GHz should really give it a leg up on the Raspberry Pi Zero 2 W.

But the proof is in the pudding, so I had to run the experiment for myself to find out for sure. Playing along at home is strongly encouraged, so feel free to grab your own OKdo ROCK 5 and walk through the following instructions to replicate my work. Or if you just cannot wait to see how it all turned out, skip down 595 words (not that anyone is counting) to see the results.

Set up the ROCK 5 Model A

Download the Debian Bullseye for ROCK 5A disk image from the OKdo Software & Downloads Hub to your computer (Ubuntu Linux assumed in the following steps).

Decompress the disk image:

xz -d -v rock-5a_debian_bullseye_kde_b16.img.xzWrite the disk image to an SD card:

sudo dd bs=4096 if=rock-5a_debian_bullseye_kde_b16.img of=/dev/sdXXXNote that you will need to replace “/dev/sdXXX” with the actual device name assigned to the SD card (e.g. /dev/sdb). You can find this by running “df -h” before and after plugging the SD card into your computer to see what drive letter is added.

Alternatively, you can use a tool like balenaEtcher to write the image to the SD card.

Eject the SD card from your computer, and insert it into the ROCK 5A board. The gold pads on the SD card must be oriented such that they are facing the PCB.

Attach a Cat5 Ethernet cable and a USB-C power adapter with a fixed voltage in the 5.2 V to 20 V range, that is able to produce at least 24 W.

After a minute or so, check your router to determine the IP address that was assigned to the ROCK 5A, then ssh into it from your computer: ssh rock@<ROCK-IP-ADDRESS>

The initial password is “rock." For security purposes, the first thing you should do is change that password with the “passwd” command.

Update the system by issuing the commands:

sudo apt update

sudo apt upgrade Install OnnxStream and generate an Image

Still ssh’d into the ROCK 5A, in the “/home/rock” directory, create a new directory to store the application:

mkdir onnxstream

cd onnxstreamNow you will need to download and compile XNNPACK, which optimizes neural network inference on Arm platforms:

sudo apt install cmake

sudo apt install build-essential

git clone https://github.com/google/XNNPACK.git

cd XNNPACK

git rev-list -n 1 --before="2023-06-27 00:00" master

git checkout <COMMIT_ID_FROM_THE_PREVIOUS_COMMAND>

mkdir build

cd build

cmake -DXNNPACK_BUILD_TESTS=OFF -DXNNPACK_BUILD_BENCHMARKS=OFF ..

cmake --build . --config ReleaseAt this point, Stable Diffusion, with the OnnxStream enhancement, can be downloaded and compiled:

cd /home/rock/onnxstream

git clone https://github.com/vitoplantamura/OnnxStream.git

cd OnnxStream

cd src

mkdir build

cd build

cmake -DMAX_SPEED=ON -DXNNPACK_DIR=/home/rock/onnxstream/XNNPACK ..

cmake --build . --config ReleaseThe final step is downloading model weights for Stable Diffusion:

cd /home/rock/onnxstream

wget https://github.com/vitoplantamura/OnnxStream/releases/download/v0.1/StableDiffusion-OnnxStream-Windows-x64-with-weights.rar

sudo apt install unrar

unrar x StableDiffusion-OnnxStream-Windows-x64-with-weights.rarNow you are ready to use Stable Diffusion! In the future, you can follow these remaining steps each time you want to generate a new image:

cd /home/rock/onnxstream/OnnxStream/src/build

./sd --models-path /home/rock/onnxstream/SD --prompt "An astronaut riding a horse on Mars"After the job is done, your image will be saved in the same folder with the name “result.png” by default. If you would like to further tweak the process, the application has the following available parameters:

--models-path: Sets the folder containing the Stable Diffusion models.

--ops-printf: During inference, writes the current operation to stdout.

--output: Sets the output PNG file.

--decode-latents: Skips the diffusion, and decodes the specified latents file.

--prompt: Sets the positive prompt.

--neg-prompt: Sets the negative prompt.

--steps: Sets the number of diffusion steps.

--save-latents: After the diffusion, saves the latents in the specified file.

--decoder-calibrate: Calibrates the quantized version of the VAE decoder.

--decoder-fp16: During inference, uses the FP16 version of the VAE decoder.

--rpi: Configures the models to run on a Raspberry Pi Zero 2.

The results

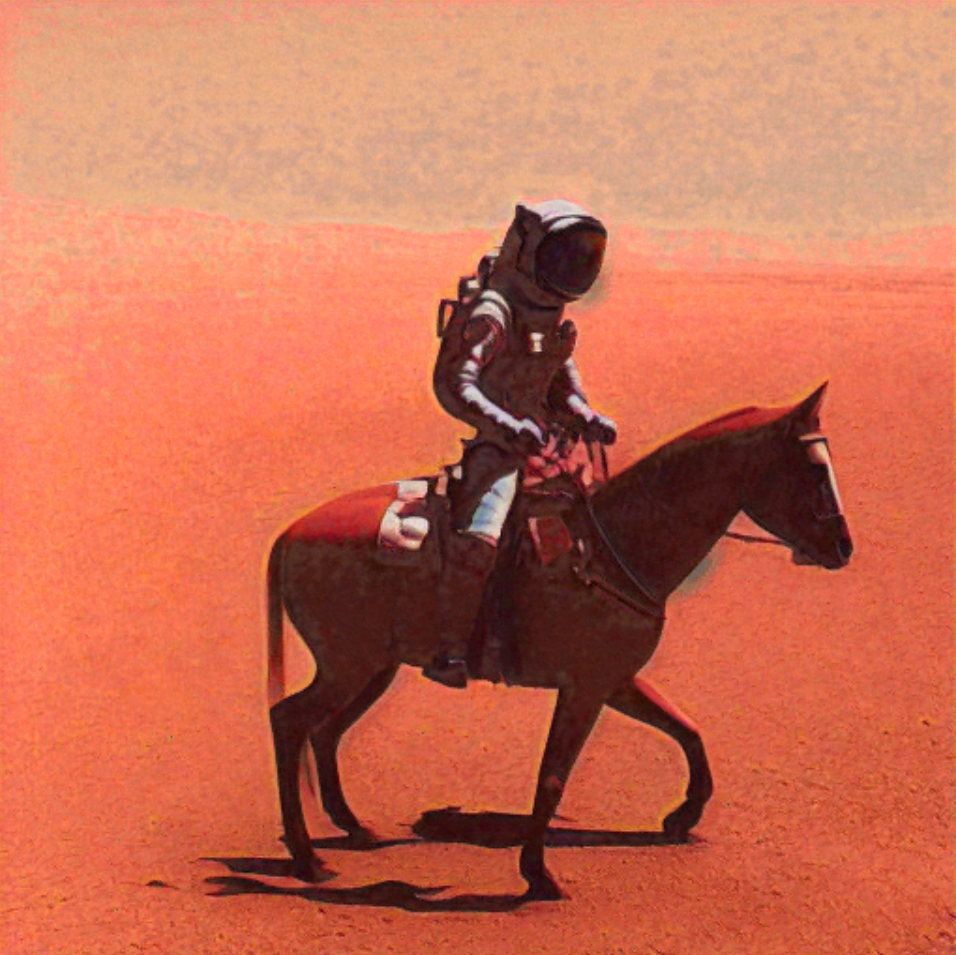

Running a full Debian Linux distribution made installation of the software on the ROCK 5 really simple. Once it was up and running, I tried a few prompts to see what kind of results I would get. Here is “a photo of an astronaut riding a horse on Mars”:

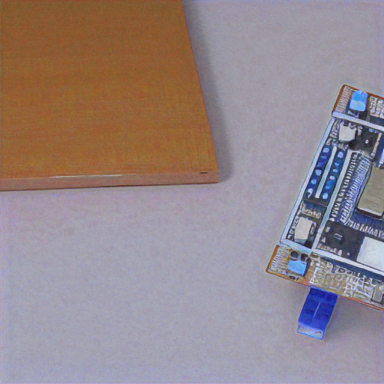

Not bad at all. And this one, which was maybe a bit of a stretch, is “single-board computer running a machine learning algorithm to control a robot:"

Clocking in at about four to six minutes, the ROCK 5 slashed the processing time of the job tremendously as compared with the Raspberry Pi Zero 2 W. It might not be quite as fast as the few seconds that one might wait when using an online, cloud-based tool, but for most purposes, it is quite acceptable.

It should also be noted that the ROCK 5’s resources were not fully utilized in this test. For example, the OnnxStream software keeps RAM usage to about 300 MB to be compatible with the Pi Zero 2 W, but the ROCK 5 has 4 GB available. Utilizing more of this memory could dramatically speed up processing times. Moreover, if I was to bump up the budget just a bit, the ROCK 5 has an eMMC module connector and an M.2 E Key connector that can be used for storage solutions that are much faster than an SD card. That could also prove to boost the speed of the algorithm somewhat.

It seems that we are not all that far from having a wide range of practical applications that run deep learning algorithms on the edge. That means we will soon be able to run generative large language models and image generation models on inexpensive, low power hardware that will eliminate the need to send sensitive data to cloud computing environments over the public Internet. It will also enable researchers, hobbyists, and small businesses to experiment with these technologies to bring a new generation of AI applications to the world. As computing technology continues its march forward, we can expect the benefits to reach far and wide, enabling use cases that today still seem fantastical.

You may have been wondering what the story is behind the image at the top of this article. It was generated by Stable Diffusion on the ROCK 5, with the prompt “lead image for the world’s best blog post." Hey, I can dream, can’t I? And apparently, Stable Diffusion can as well.