Of all the causes of automobile accidents, crashes that occur due to blind spots stand out as having significant potential to be remedied through technology. The need for a solution is apparent, with over 840,000 blind spot-related accidents — which lead to 300 deaths — occurring each year in the United States alone. While the area of a blind spot varies depending on the type of vehicle, all vehicles have blind spots. These areas to the sides of a car that cannot be seen in the rearview mirror or side mirrors present a risk not only to the driver, but also to nearby motorists and pedestrians.

Blind spot detection technologies do already exist, but they tend to be in more expensive makes and models of cars, which leaves many people without this important safeguard. Engineers Ed Oliver, Victor Altamirano, and Alejandro Sanchez saw this as an opportunity to develop a low-cost device that can be added onto any vehicle to provide an extra set of “eyes” that continually monitor the blind spots. To make this idea a reality, they turned to Edge Impulse Studio to design a machine learning image classification pipeline that is capable of recognizing cars, motorcycles, and pedestrians.

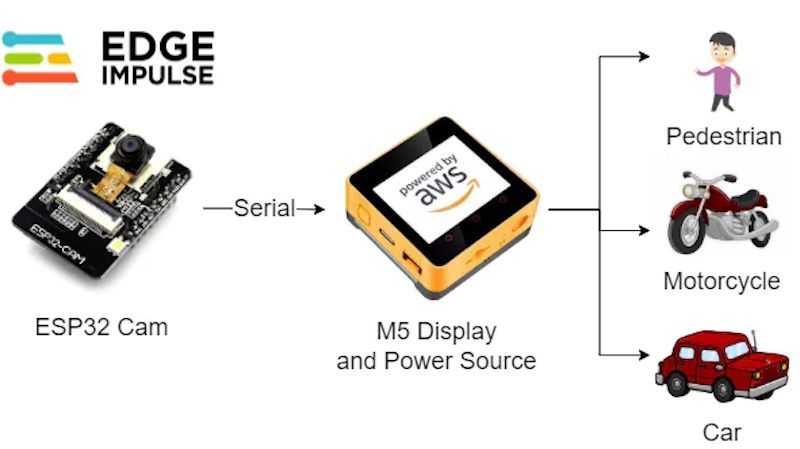

The device consists of a low-power and low-cost ESP32-CAM that combines the very capable ESP32 microcontroller with a tiny image sensor. For convenience, an M5Stack display was also included to provide feedback about objects detected in the blind spot. The display also provides the battery power to operate the ESP32-CAM development board while on the go. The ESP32-CAM was placed inside a custom, 3D printed case and arranged in an angled position, looking outside of the driver’s side window where it has a view of the blind spot. The M5Stack display was positioned on top of the dashboard so that the driver can safely view the screen and be made aware of any potential dangers.

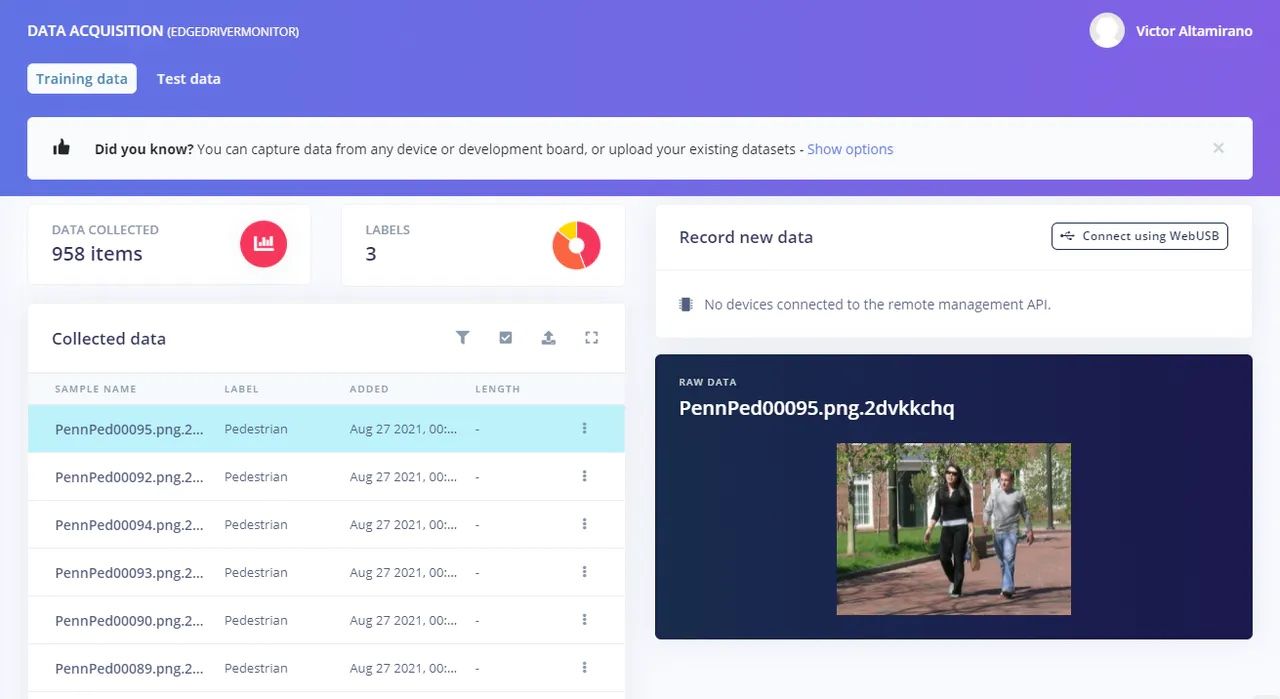

To get started, the team began by creating a dataset of 1,224 car, motorcycle, and pedestrian images to train their classifier. These images were uploaded to Edge Impulse, where the data analysis pipeline was developed. Preprocessing steps resize the images to reduce the computational complexity of the problem and transform the images into a data format that can be processed by the classifier. A pretrained MobileNetV2 neural network, with fifty thousand parameters, was selected to do the classification, then retrained to recognize the object classes present in the uploaded training dataset.

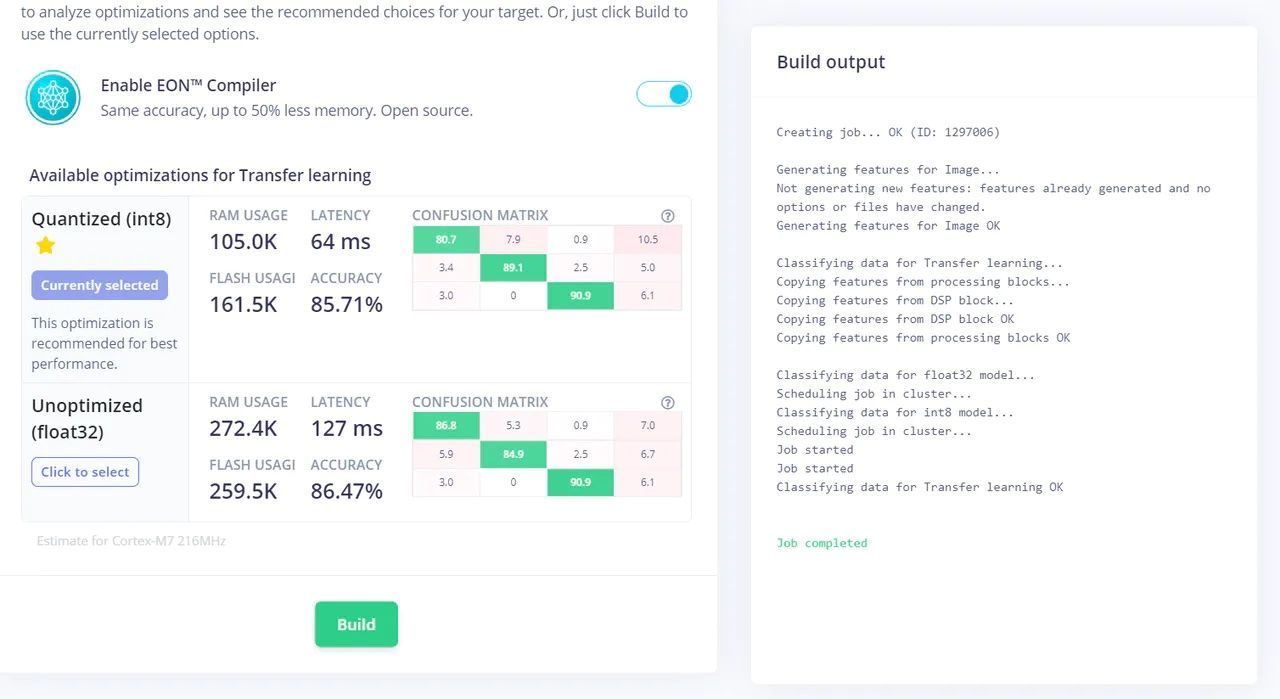

After the training step was completed, an average classification accuracy rate of 86.47% was observed. Given this excellent result, it was time to deploy the model directly to the ESP32-CAM so that inferences could run locally, without the need for Internet connectivity. This was accomplished by exporting the pipeline from Edge Impulse as an Arduino library that could easily be integrated into an existing Arduino sketch. The EON Compiler was enabled, and the model was quantized to give the best possible performance on the resource-constrained ESP32 microcontroller.

The final device can be fitted into any vehicle where it will alert the driver about relevant obstructions present in the blind spot. The creators of the project credit Edge Impulse with making it simple for them to bring their idea to fruition, and on a very short timeline. Their seamless experience has led them to see a future in which countless machine learning-based applications are brought to life through this development platform.

Want to see Edge Impulse in action? Schedule a demo today.