When most people think about the risks in their lives, they tend to focus on the possibility of big, dramatic events like a plane crash or shark attack. But in reality, the risk of such an event is vanishingly small when compared with everyday, mundane activities like driving. In fact, the leading cause of death for persons under the age of 54 in the United States is road crashes, according to ASIRT. Further, 4.4 million people are injured seriously enough to require medical attention each year.

Hardware hacker and machine learning enthusiast Manivannan S. recognized that part of this problem stems from the fact that cars have blind spots. This issue is especially pronounced when backing up, which can lead to inadvertent collisions with pedestrians. Manivannan tackled this problem by designing a system that will alert a driver when a person is present in one of the blind spots behind a car. Right about now you may be thinking that this sounds great, but it will be too expensive to be widely practical, with the LIDAR and other equipment needed. As it turns out, through the clever use of widely available hardware and the simple interface of Edge Impulse, Manivannan’s system is both inexpensive and easy to replicate.

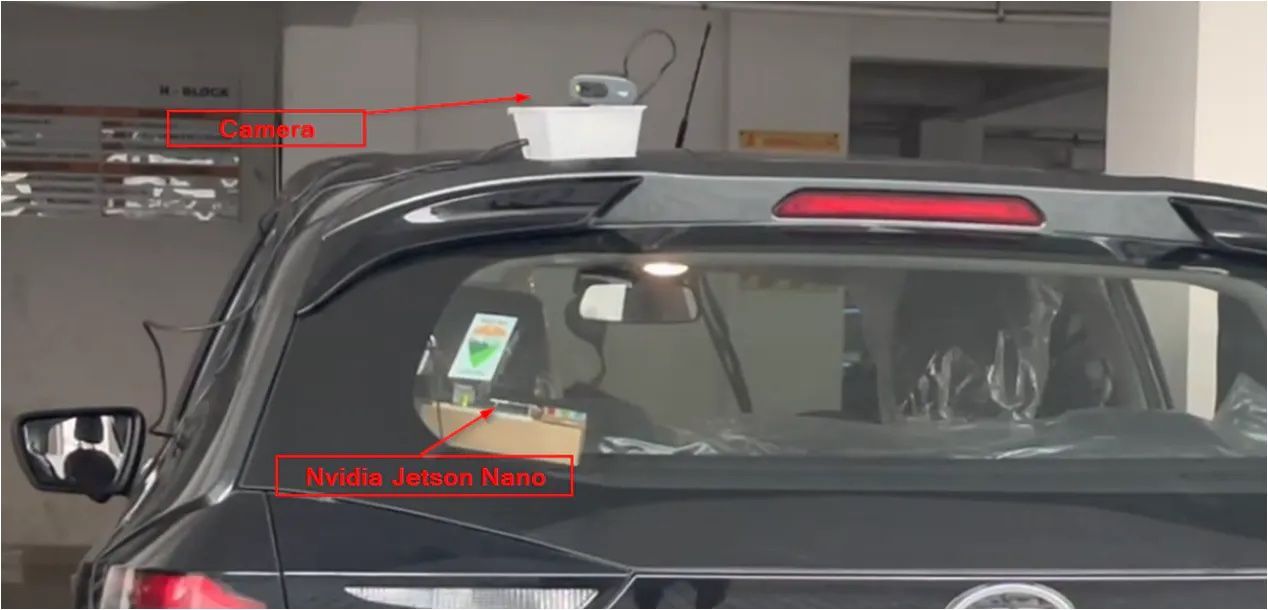

The device consists of a common webcam mounted on the roof of a car, facing backwards. A servo continually rotates the camera with the help of an Arduino Nano that drives it, pausing just long enough to capture an image from each blind spot. The real fun comes in with the $59 NVIDIA Jetson Nano 2GB single-board computer that processes the images captured by the webcam to detect pedestrians. When the device catches a pedestrian in a blind spot, a buzzer sounds an alarm to alert the driver. The prototype device is assembled with plastic containers and cardboard boxes, but it takes little imagination to see how this could be turned into a polished product with a Jetson Nano module and custom carrier board to handle the additional functions required.

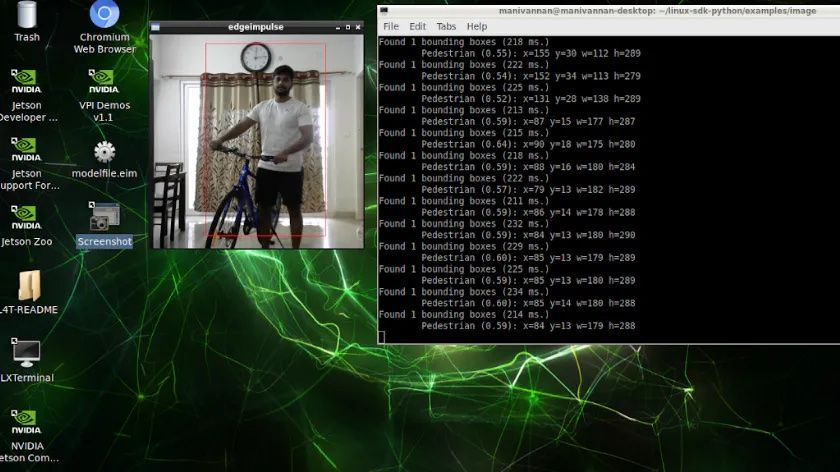

Manivannan found an existing dataset of pedestrian images that he was able to leverage in building a machine learning object detector model with Edge Impulse. He uploaded his dataset into a new project, then used the built in tools to rapidly draw boxes around each pedestrian to apply the labels. After that, it was a matter of tweaking a few hyperparameters, then starting training with the click of a button. In a matter of minutes, a trained MobilenetV2 convolutional neural network was produced. With another button click, the accuracy of the model was checked against a test dataset.

The model trained on Edge Impulse was deployed to the Jetson Nano by running a few simple commands in the terminal. At this point, the model was ready to run locally on the Jetson, and start alerting a driver to the presence of pedestrians in blinds spots. As it currently stands, the model’s predictions are correct about 79% of the time, which is fairly impressive when considering the very small dataset that was used during training, with less than 150 pedestrian images. The accuracy would be expected to improve substantially with a larger set of training images.

Further details and code samples are available in Manivannan’s write-up. If you have a Jetson Nano, and a few other common spare parts around, you could fire up Edge Impulse and replicate this project in an afternoon. And if you do not have a Jetson Nano — bonus points for porting the project to another platform supported by Edge Impulse!

Want to see Edge Impulse in action? Schedule a demo today.