When you’re optimizing a neural network for on-device efficiency, how can you be sure which changes you make will move your model in the right direction? And if you’re trying to select embedded hardware for your machine learning application, how do you know which devices are best suited to your workload? Making the right choices can be crucial for tinyML, where each byte and milliwatt is of critical importance.

Until recently, with no available benchmarks, the only way to inform these decisions was through systematic evaluation of all the options available for your application—which, given the diversity of available technologies, can be a herculean feat.

Fortunately, with the release of MLPerf Tiny Inference by MLCommons, an open engineering consortium, the landscape has now changed. MLPerf Tiny Inference was designed from the ground up to help engineers assess the performance of neural networks running on extremely low-power embedded devices.

This release comes as embedded machine learning is experiencing a boom in popularity as compute resources capable of running machine learning algorithms have grown smaller and less expensive. These tiny neural networks can process audio, video, and other sensor data to provide rapid inference times without the privacy implications of sending that data to the cloud for processing.

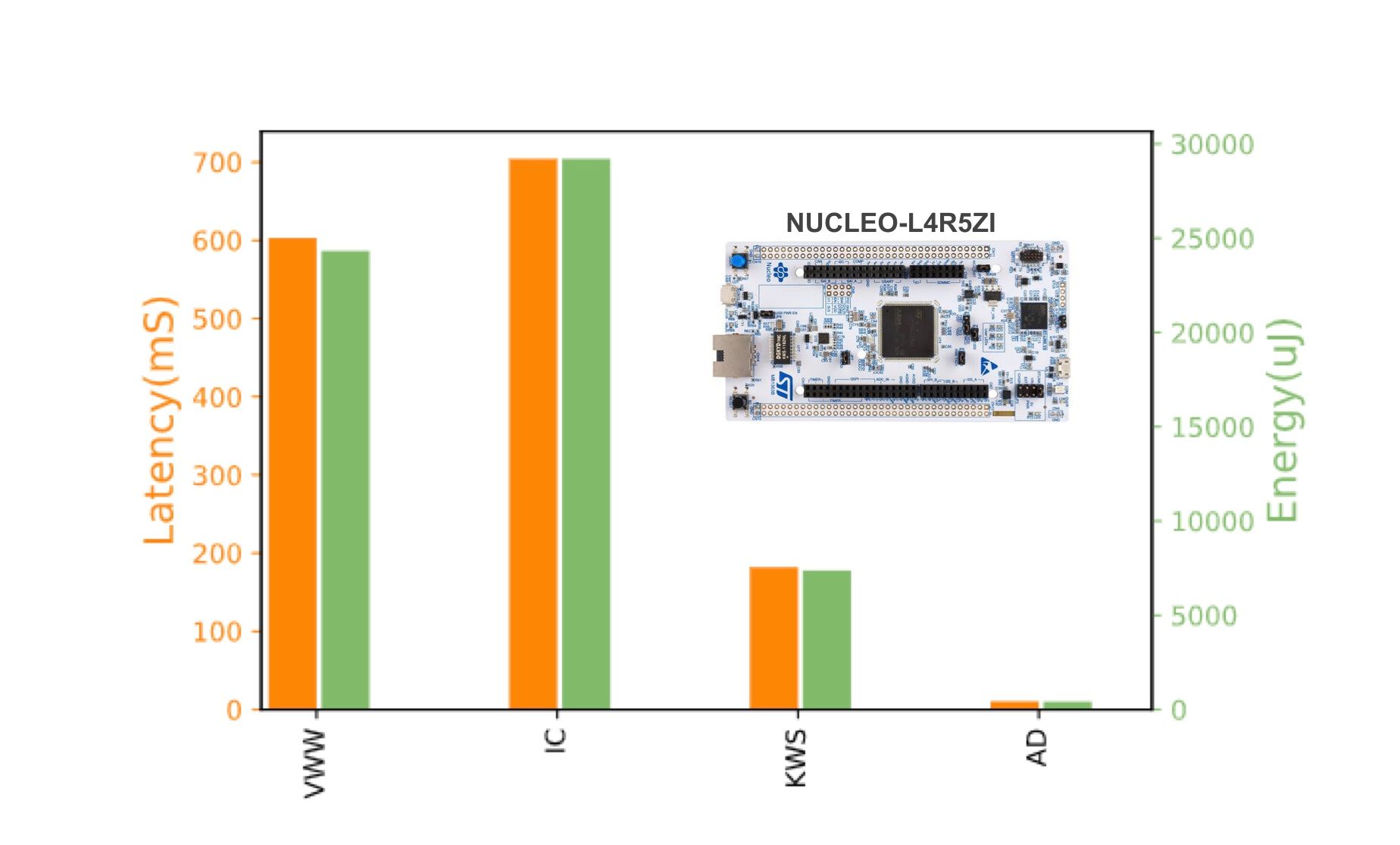

The MLPerf Tiny Inference benchmarking suite offers reporting and also comparisons of tinyML devices, systems, and software. In particular, the benchmark measures four tasks that represent key tasks in embedded machine learning: Keyword spotting, visual wake words, image classification, and anomaly detection.

To support developers and hardware manufacturers who wish to explore and submit MLPerf Tiny benchmarks, Edge Impulse has worked with the MLCommons team to produce a set of Edge Impulse reference projects that contain the datasets and models used in three of the scenarios:

- Keyword spotting

- Visual wake words

- Anomaly detection (Using classification on a limited subset of data, so any user can train the model with our free tier)

MLPerf Tiny Inference was developed in collaboration with over 50 partners in industry and academia; it reflects the needs of the community at large. It looks to be an important tool to add to our ML toolboxes that will help us to add intelligence to everyday items, from wearables to thermostats and cameras.

You can get involved with the MLPerf Tiny effort by joining the working group. To get started exploring the benchmarks, check out the Visual Wake Words project on Edge Impulse.