According to recent statistics, automobile accidents are on the rise in the United States. In 2021, there were over six million reported car crashes, resulting in over 40,000 fatalities and millions of injuries. These alarming numbers have sparked concern among safety advocates and government officials alike.

Drunk driving is a major contributor to car accidents. Despite numerous campaigns and stricter laws, alcohol-impaired driving remains a leading cause of crashes and fatalities. In 2021, nearly 30% of all traffic fatalities were the result of drunk driving. Speeding is also a major factor in car accidents. Driving above the posted speed limit or too fast for conditions can significantly increase the risk of a crash. In fact, speeding was a factor in over 25% of all traffic fatalities in 2021.

These risky behaviors are only made more dangerous when the driver does not wear a seatbelt. Without taking this most basic of safety precautions, otherwise survivable crashes can quickly turn tragic. When taken together, alcohol-impaired driving, speeding, and failure to wear a seatbelt are responsible for 45% of all fatal accidents, per the NHTSA.

One thing that all of these behaviors have in common is that they are completely avoidable. If there were a way to eliminate these risk factors, the roadways would be a much safer place. Engineer Justin Lutz has recently built a prototype device that has the potential to prevent many avoidable fatal accidents. Using the Edge Impulse machine learning development platform and a Raspberry Pi 4 single board computer, he showed how a fleet of service vehicles can easily and inexpensively be retrofitted to monitor risky driving behaviors.

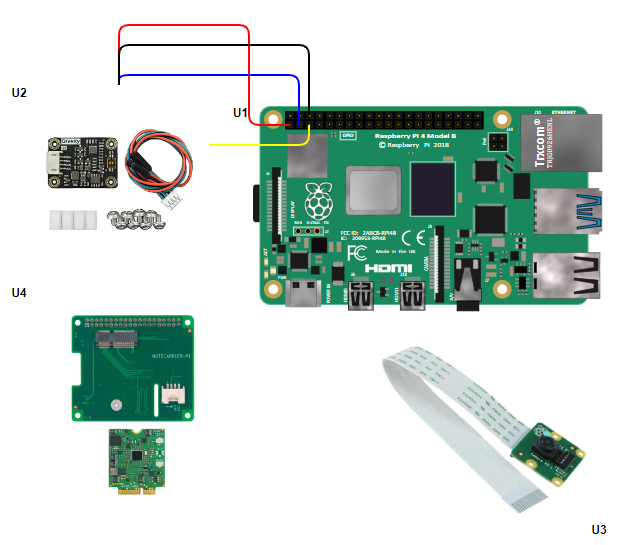

The Raspberry Pi computer was paired with a Raspberry Pi Camera Module with the idea of collecting images of the driver to determine if they are wearing a seatbelt using a machine learning object detector. A DFRobot MQ-3 gas sensor was included in the build — it can detect the presence of alcohol, whether coming from the driver or an open container. Additionally, a Seeed Studio Grove LIS3DHTR 3-axis accelerometer was added in to detect the rapid acceleration and braking consistent with aggressive driving practices. The final piece of the hardware puzzle was a Blues Wireless Notecard, which is useful in detecting both the speed at which the driver is traveling, and their precise location.

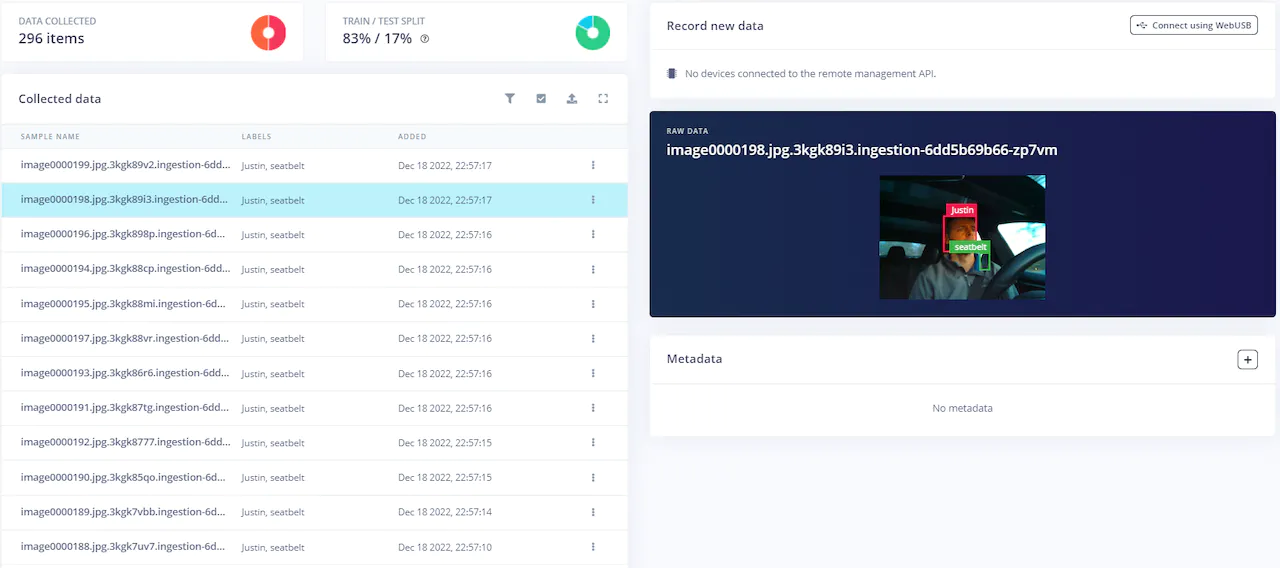

Before the seat belt detector could be constructed, Lutz first needed to collect a dataset consisting of sample images of him both wearing and not wearing a seat belt. After mounting the device on the dashboard of his car and collecting these images, they were uploaded to Edge Impulse, where the AI-powered data acquisition tool gave assistance in drawing bounding boxes around both the driver and the seatbelt. These labeled images — about 330 in total — would be used to train the object detection model.

Next, Lutz built an impulse to analyze the image data in Edge Impulse Studio. It consists of preprocessing steps that resize the image and extract the most important features in the data. These steps are crucial in running an object detection model on a resource-constrained edge platform like the Raspberry Pi. The features were then forwarded into a pretrained YOLOv5 model designed to detect both people and seat belts. By using a pretrained model as a starting point, the model can leverage knowledge it has already acquired from other datasets, which allows it to learn to recognize new objects more accurately, and with less training data.

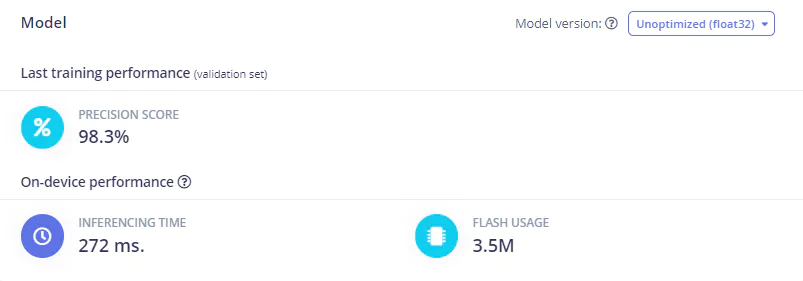

After finishing the impulse design, the training process was started with the click of a button. After a short time, results were presented to help in assessing how well the model was performing. It was found that a precision score of better than 98% was achieved right off the bat. And since Edge Impulse created machine learning models that are highly optimized for tiny platforms, the model size was a miniscule 3.5 megabytes, which is well within bounds for the relatively powerful Raspberry Pi. Furthermore, inference times were quite snappy, clocking in at about 270 milliseconds.

Everything was looking great, so Lutz was set to deploy the model to the physical hardware. Doing so removes all dependencies on the cloud, as well as the wireless connectivity requirement and privacy concerns that come along with using cloud computing resources. There are many options available, but the Linux (ARMv7) option was chosen in this case. This created a binary containing the entire machine learning analysis pipeline, and also gave the flexibility to add additional code to process data from additional sensors.

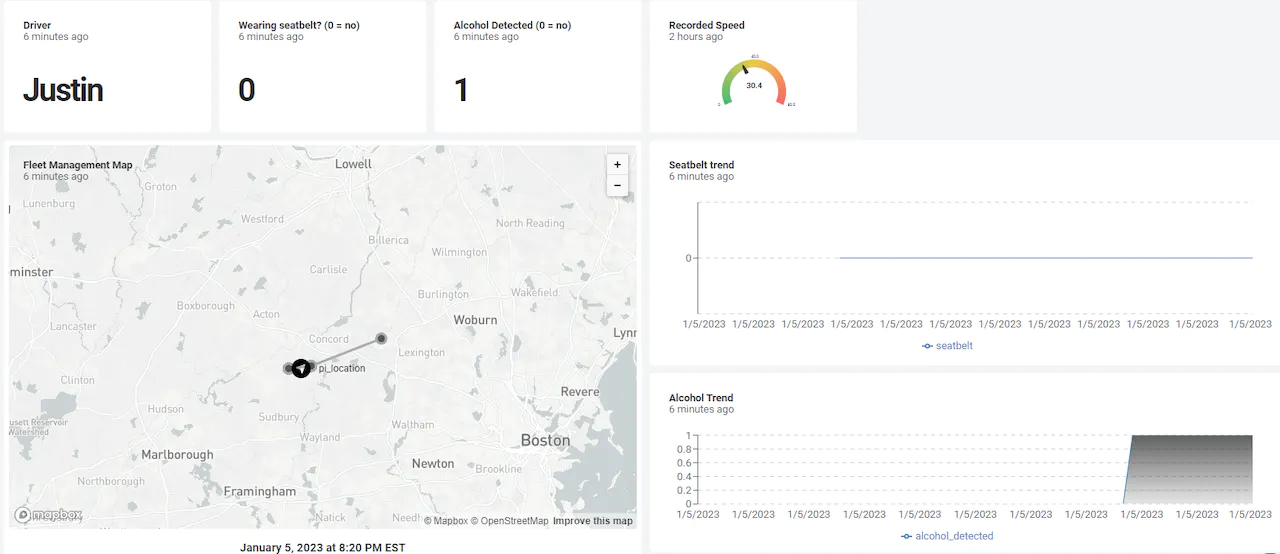

To put the finishing touches on the device, Lutz wrote a Python script to collect and interpret data from the DFRobot MQ-3 gas sensor and Blues Wireless Notecard to check for alcohol impairment and speeding. Accelerometer data was thresholded to reveal when excessive, aggressive, actions are taken behind the wheel. These data points, along with model inference results that detect seatbelt use, were wirelessly sent to Blues Wireless Notehub.io where they can be monitored. This data also includes information about the driver’s location.

Lutz even demonstrated how the raw data in Notehub.io could be used to drive a web dashboard using Datacake. This provides a nice, graphical, map-based overview of driver safety metrics that could be used by someone managing a fleet of service vehicles. Since Notehub.io has the ability to send text messages via Twilio, it would also be possible to send alerts immediately any time a dangerous behavior has been observed.

Testing of the system showed it to work quite well, but Lutz noted that the camera could at times be blinded by the sun, and also that it would not work well at night. He believes that these shortcomings could be resolved by exploring alternative types of sensors. The project write-up is well worth the read, so be sure to take a look. You can also get a headstart in building your own object detection-based solutions by cloning his public Edge Impulse Studio project.

Want to see Edge Impulse in action? Schedule a demo today.