In the early days of the COVID-19 pandemic, when many people were trying to avoid visiting public places as much as possible, there were lots of things that we missed out on. Visiting friends and family and eating out at restaurants were high on the list of most missed activities. The experience also revealed some things we spend a lot of time doing that, as it turns out, we would be quite happy to do without. One of those things is grocery shopping. When pushing a cart through crowds to search for all of the items on a grocery list, only to then stand in a long line to wait for a cashier to complete the checkout was replaced by store employees that load the groceries in your trunk or deliver them to your front door, we all knew that this was an upgrade that we did not want to let go of.

But sometimes, planning ahead and placing an online order is not the best option, and a trip to the store is needed. It would be nice if we could still hang on to some of the convenience and time-savings that we have come to expect in recent years, even in these situations. Fortunately, with smart checkout devices like the one built and demonstrated by engineer Samuel Alexander, it looks like we will be able to do just that in the near future. Alexander’s proof of concept device shows just how simple and inexpensive it can be to implement an automated checkout system that uses computer vision and machine learning to instantly ring up all of the items placed on a checkout counter.

Alexander wanted to keep the system as simple and minimal as possible so that it could be a viable solution for retailers of any size. Towards this end, he chose to use Edge Impulse to develop an object detection pipeline. Using Edge Impulse’s FOMO™ algorithm, which runs 30 times faster than MobileNet-SSD and requires less than 200 KB of RAM, he was able to deploy the model on a Raspberry Pi 4 single-board computer — no supporting cloud computing infrastructure needed. Adding in a common USB webcam was all that was needed to round out the hardware requirements for the system. For demonstration purposes, an LCD display was also included to show the results produced by the object detection algorithm.

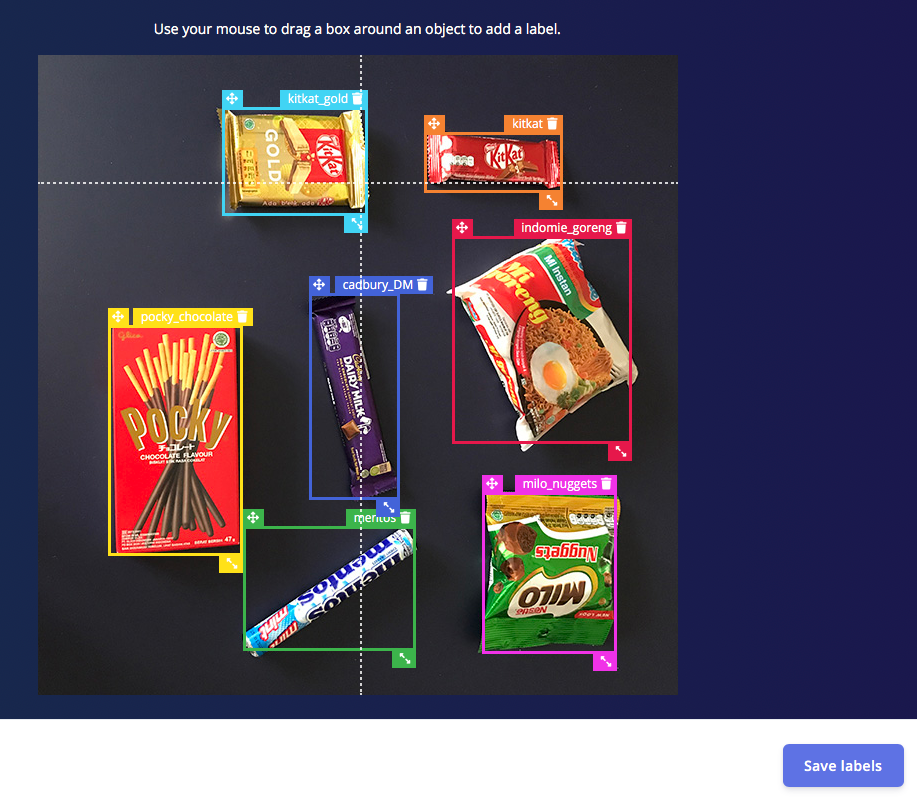

A crucial first step in using the FOMO algorithm involves teaching it which objects it is to recognize. To prove the concept, Alexander collected 408 images of eight different snack items. These images were taken from different positions on the checkout counter, and in different orientations to help the model recognize them under varying conditions. The images were then uploaded to Edge Impulse Studio. The labeling queue, with its AI-assist feature, was used to draw bounding boxes around each item and assign a label.

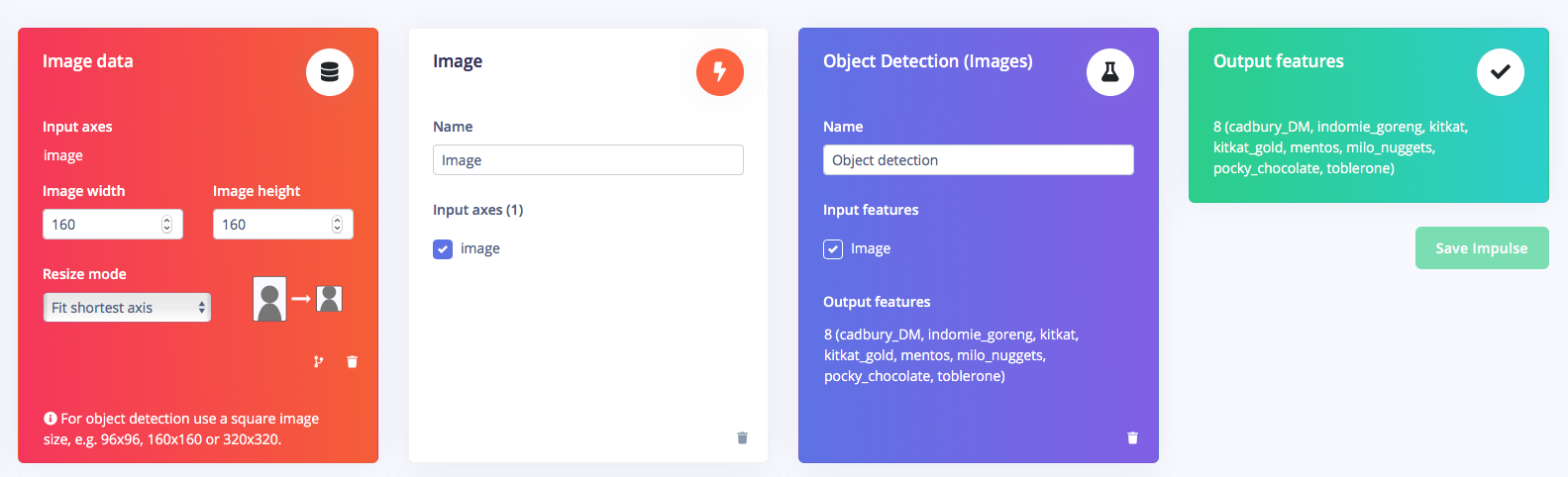

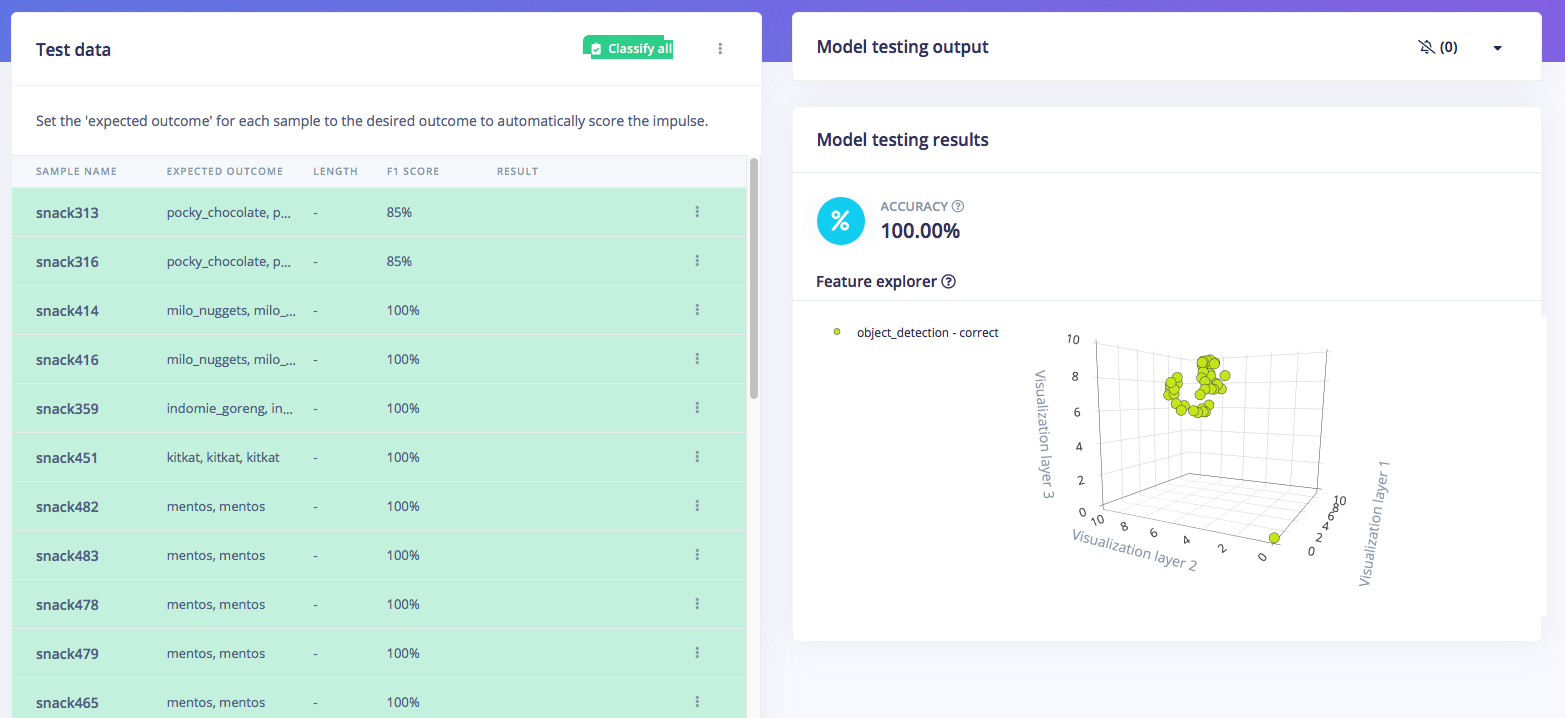

An impulse was then designed that begins with an image preprocessing step that reduces the complexity of the image data by extracting the most informative features. These features are then fed into a FOMO algorithm that can identify eight types of snack foods in real time. The model was trained using the previously uploaded and labeled dataset, 80% of which was earmarked for training, while the remainder was set aside for later validations. Training accuracy showed an F1 score of 97% had been achieved, and a test using the 20% of data not included in the training process revealed that objects were detected correctly in 100% of cases.

Results like these do not leave much room for improvement, so Alexander moved on and deployed the object detection pipeline to the Raspberry Pi 4. Since this platform is 100% supported by Edge Impulse, it only took a few clicks to have it up and running. With a little bit more coding in Python, the device was able to sum up the total cost of all items on the checkout counter, and display that total on the LCD display.

This smart cashier system shows just how simple it can be to implement a device that uses machine learning to save time, money, and improve customer satisfaction. Alexander notes that with a bit more work to collect additional images of items, and from more angles and under different lighting conditions, these same techniques could be used to build a device for use in real world scenarios. Be sure to check out (pun intended) the project documentation for additional tips and tricks to get you on your way to building a more efficient world.

Want to see Edge Impulse in action? Schedule a demo today.