Designing electronic gadgets so that they adapt to their environment promises to make them more efficient in carrying out their tasks and able to provide users with a better, more customized experience. Imagine, for example, headphones that automatically switch their noise cancellation feature on or off depending on where you are. This may be a small convenience by itself, but with a network of personal devices automatically adapting to every situation, user experiences would be greatly enhanced. But this is easier said than done — the gold standard for device localization is GPS, but GPS receivers tend to drain batteries, and moreover, GPS signals cannot be received indoors.

Aside from these technical limitations, it is also the case that GPS coordinates alone will not tell you the type of environment one is in. This would, at a minimum, require access to an up-to-date lookup service that could translate a set of coordinates into, say, an office, home, road, or airport. Machine learning enthusiast Swapnil Verma approached this problem from a different angle. He reasoned that humans are quite good at recognizing what type of environment they are in by just listening to the sounds in the area. So he decided to try to replicate this capability with a machine learning model. This could be done indoors or out, needs no external database lookups in the cloud, and would require very little power by using an Edge Impulse model that has been highly optimized for tinyML hardware.

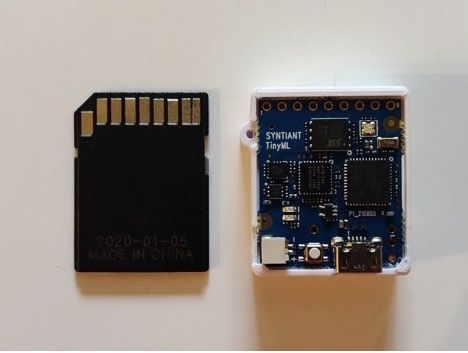

Verma kept the bill of materials to a minimum by selecting the Syntiant TinyML Board for the build. This development board comes loaded with a NDP101 Neural Decision Processor, a Microchip SAM D21 Arm Cortex-M0+ MCU, and a microphone, which checks all of the boxes for the location identification device — plenty of horsepower to run a tinyML algorithm and a microphone to collect audio samples. While not needed for this project, the TinyML Board has lots of other features, like a six-axis motion sensor and a microSD card slot for storage.

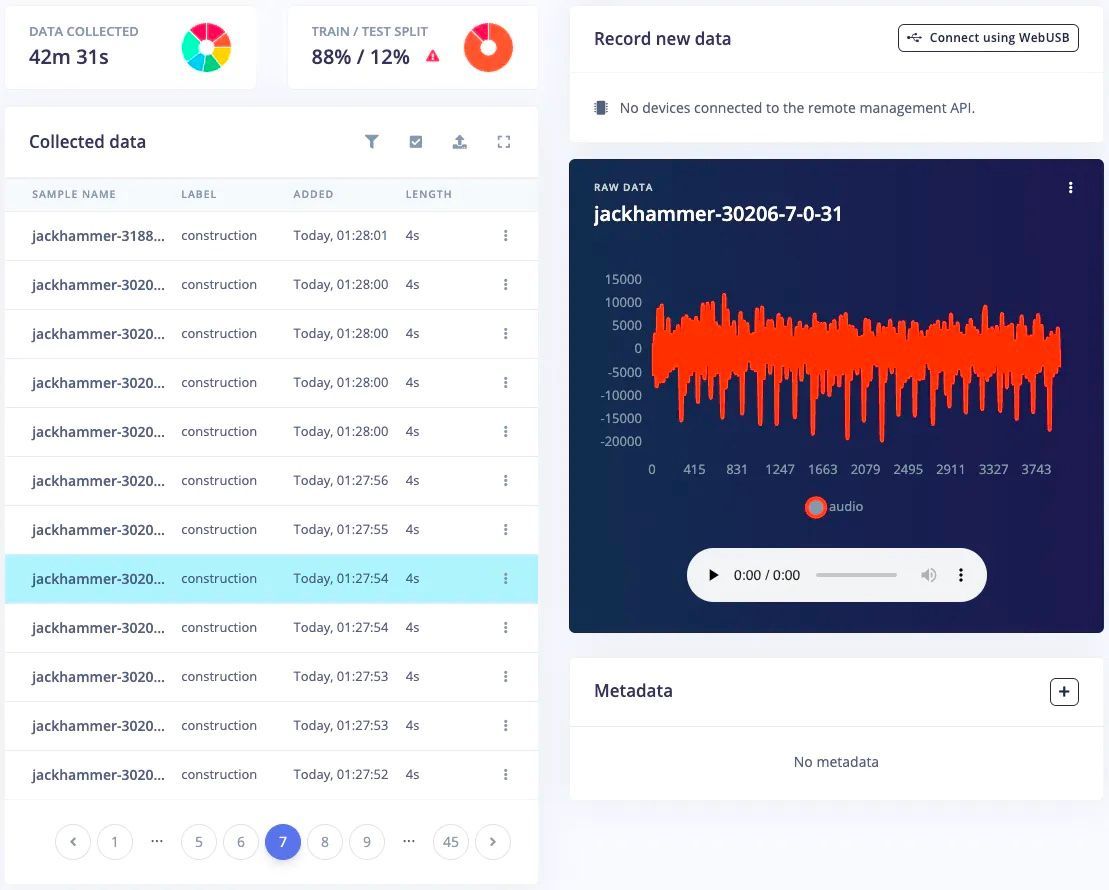

Before jumping in and building the machine learning classification pipeline, audio samples would be needed to train it. For the proof of concept, Verma chose to focus on recognizing airports, roads, offices, bathrooms, construction sites, homes, and also unknown, quiet environments. Examples of each class were extracted from the ESC-50 public dataset, then were uploaded to Edge Impulse Studio using the data acquisition tool. From there, data can be visualized, split up, filtered, labeled, broken up into training and testing sets, and just about anything else you might need to do with raw data.

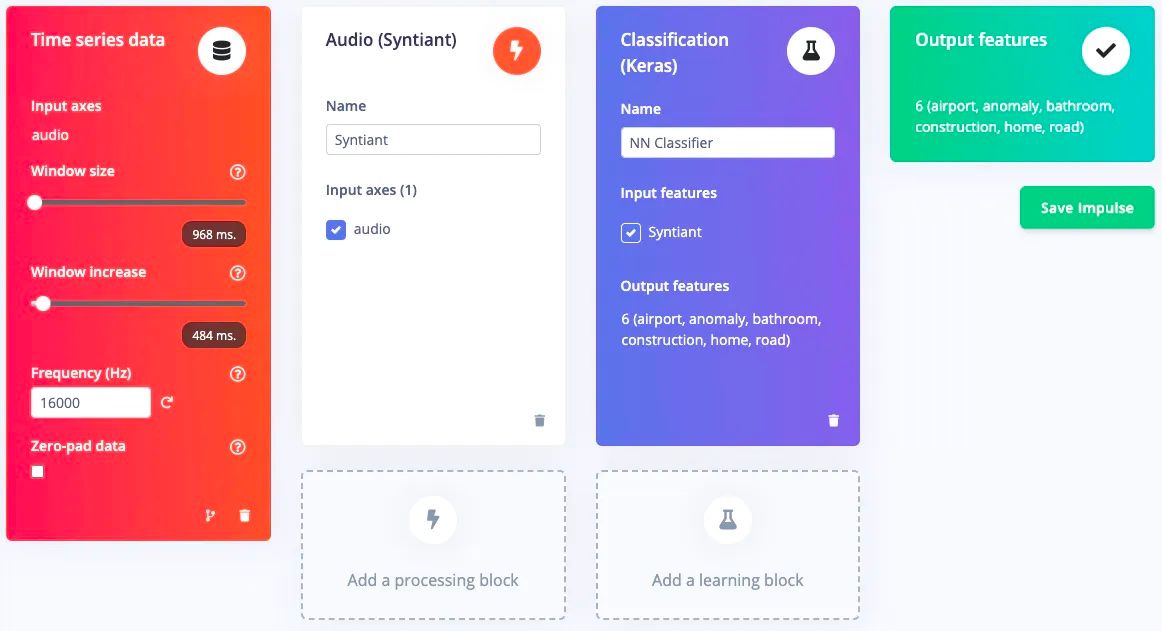

An impulse was then designed to handle processing and classifying these audio samples. A preprocessing audio (Syntiant) block was added to extract the most relevant time and frequency features from the signal. It also performs additional processing specific to the Syntiant NDP101 chip to make the most of this neural network accelerator. Finally, a neural network classifier was added to learn the mappings between features and environmental sound classes. The default hyperparameters were kept, and just like that, the machine learning model design was finished.

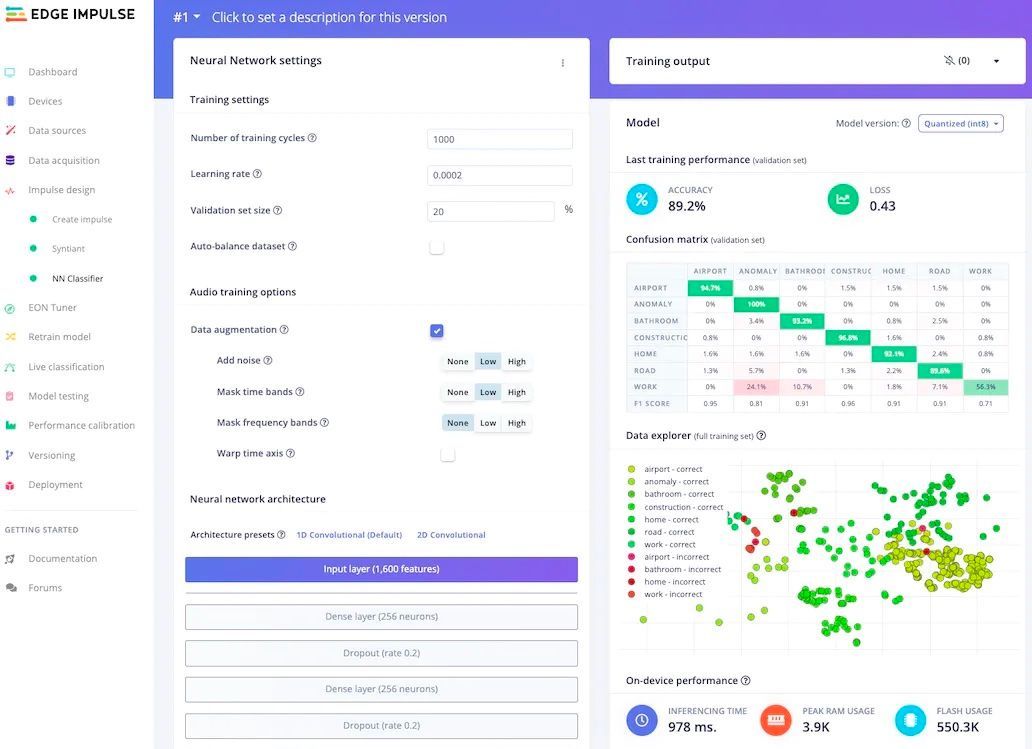

The Feature Explorer tool was used to check separation of samples between classes. Satisfied with how the data looked, Verma started the model training process. While the overall classification accuracy was quite good at better than 89%, the confusion matrix showed that the office class was not performing very well. Verma used the data acquisition tool to dig further into those samples and found that they only consisted of keyboard and mouse sounds. He did not think these sounds were adequate to represent the class, so that class was removed and the model was retrained.

The new model achieved a classification accuracy of 92.1%, which is quite good, but to be safe, another test was performed using data that had been held out from the training process. This showed an average classification accuracy of nearly 85%. That is more than sufficient to prove the concept, so Verma was ready to deploy the model to the Syntiant TinyML Board. Since this hardware is fully supported by Edge Impulse, Verma was able to download a custom firmware image containing the full classification pipeline to flash to the hardware.

The completed device was tested out and found to work as expected. Given how lightweight the model is, it would be easy to incorporate into any number of mobile computing devices like smartphones, watches, and headphones to provide customized experiences. What would you build a location identifier into? Verma has made his Edge Impulse project public, so it is a simple matter to clone this project and integrate it into your own designs. Make sure to read the project documentation for some great tips as well.

Want to see Edge Impulse in action? Schedule a demo today.