Over the years, retail store checkout processes have evolved from items being manually keyed in by a cashier, to the use of barcode scanners, and then self-checkouts. As the processes have become more automated, it has created a win-win situation for retailers and shoppers alike. Retailers have found substantial benefits arising from reduced costs and improved efficiency, while also providing a more convenient shopping experience for customers.

Self-checkouts in particular have transformed retail store checkout processes. By reducing the need for cashiers, stores can save money on labor costs and increase efficiency. Retailers can also handle a higher volume of customers, reducing the need to hire additional employees to cover peak periods. The likelihood of errors, such as incorrect change or miscounted items, is also reduced with self-checkouts.

The next logical step is to move to a fully automated checkout process. Self-checkout still requires a shopper to scan each item, one at a time. An automated checkout, on the other hand, can scan all of the items in one go. That significantly reduces the time it takes to check out, and is also a huge convenience for the customer. All of the other benefits of self-checkouts, like error reduction, apply to automated checkouts as well.

Despite the many apparent benefits of a fully automated process, these types of checkouts are still rarely seen in the wild. A machine learning enthusiast by the name of Adam Milton-Barker believes that this is all about to change because of the availability of simple tools like Edge Impulse Studio and low-cost, high-performance hardware platforms like the NVIDIA Jetson Nano. Once the word really starts to get out about these recent technological advances, he believes we will see machine learning improving virtually every aspect of our lives.

To demonstrate how easy it can be to get started, Milton-Barker built an automated checkout system prototype in just a few hours of time. He decided to build an image classification neural network that was capable of recognizing a few types of items that one might find in a grocery store. While this may be a relatively simple starting point, the techniques used in this project could be applied to detect a larger variety of items. And with another small change, the classifier could be swapped for an object detection algorithm that can recognize multiple items at a time.

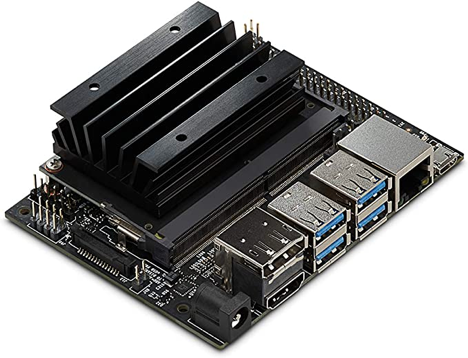

An NVIDIA Jetson Nano Developer Kit was chosen for the project because it is small and inexpensive enough that it could be widely deployed in point of sale terminals. Additionally, it is more than powerful enough to handle even tough image recognition tasks. It sports a quad-core Arm Cortex-A57 CPU, 4 GB of RAM, and a 128-core Maxwell GPU. A USB webcam was included in the build to allow the device to capture images of products. Once a batch of items has been scanned, the Jetson Nano could be used to do virtually anything, like process credit card payments or email the customer a receipt, since it is a full fledged Linux computer — although Milton-Barker is saving these types of features for a future revision of the device.

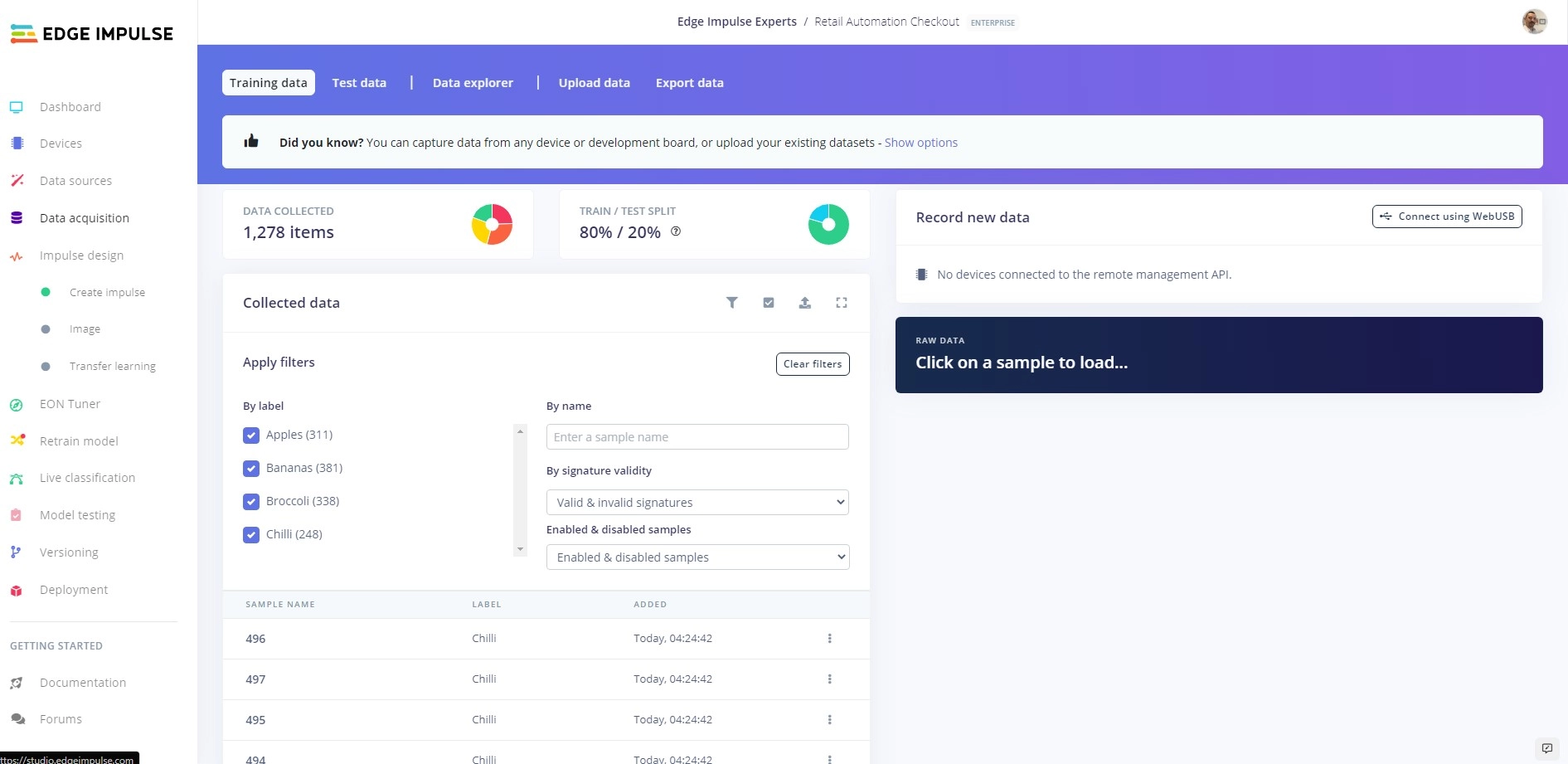

With a plan mapped out and the hardware all squared away, the next thing to do was collect some data to train the neural network. A publicly available dataset consisting of images of fruit and vegetables was located, and about 1,200 images of apples, bananas, broccoli, and chili peppers were extracted from it and uploaded to a project in Edge Impulse Studio using the data acquisition tool. Labels were automatically assigned to each image based on the file names, which saved a lot of time that would have otherwise been spent doing annotation.

Next, the Jetson Nano was set up and linked to an Edge Impulse Studio project by installing the Edge Impulse CLI. This makes it easy to deploy machine learning algorithms to the device, and if you happen to be collecting data with the Jetson for your own project, it will automatically upload the data to your project.

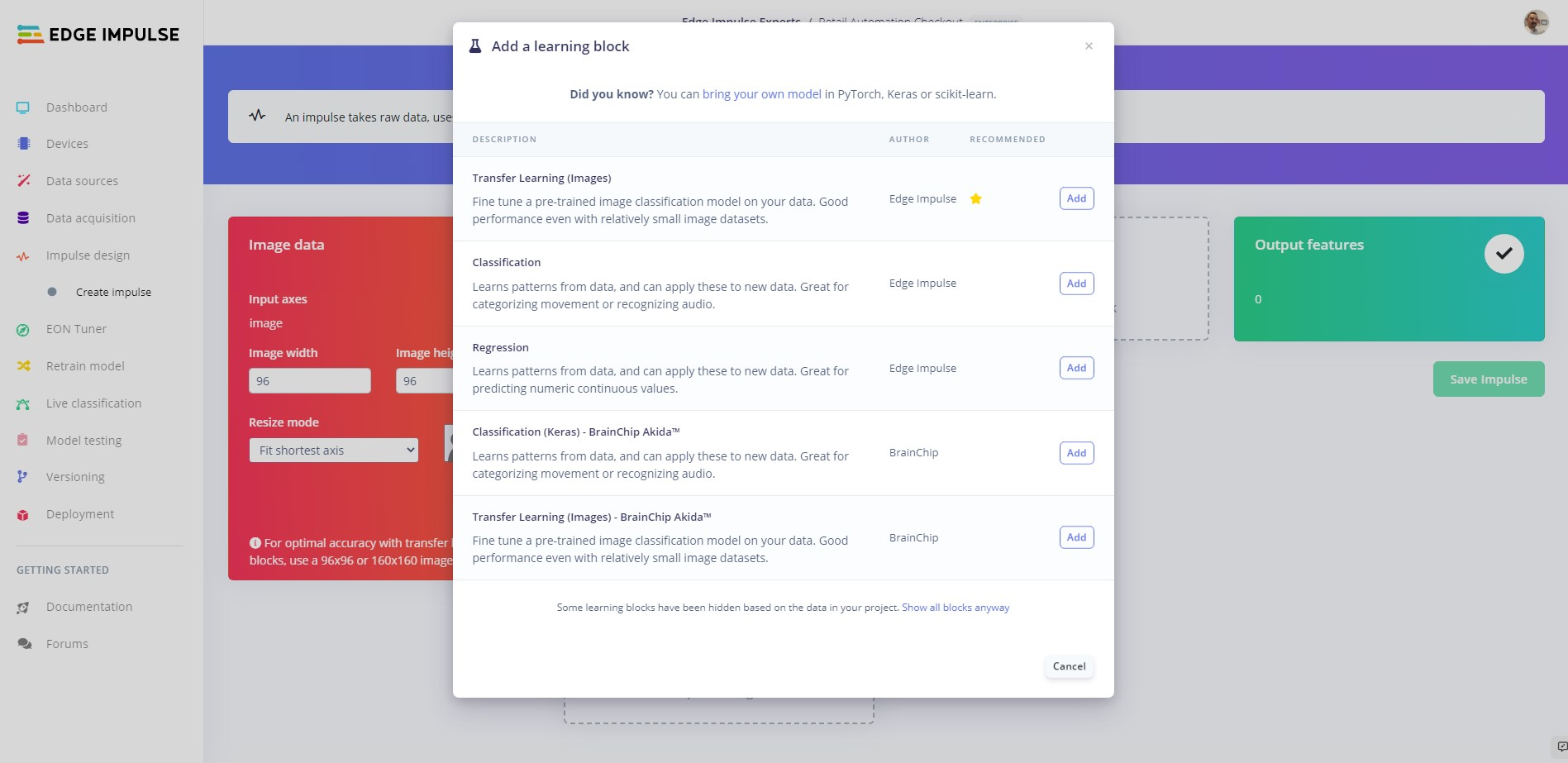

Speaking of machine learning algorithms, everything was in place for Milton-Barker to start designing one, so he pivoted to the Impulse Design tool. The impulse defines how sensor data is handled, from initial collection all the way through to a prediction from the trained model. In this case, the impulse started with a step that resizes images to reduce the computational resources needed for downstream analyses. Another preprocessing step calculates and extracts the most important features from the input data, which helps to increase prediction accuracy. These features finally make their way into a MobileNetV2 neural network classifier that has been pretrained to give it a general knowledge of how to recognize different objects in an image.

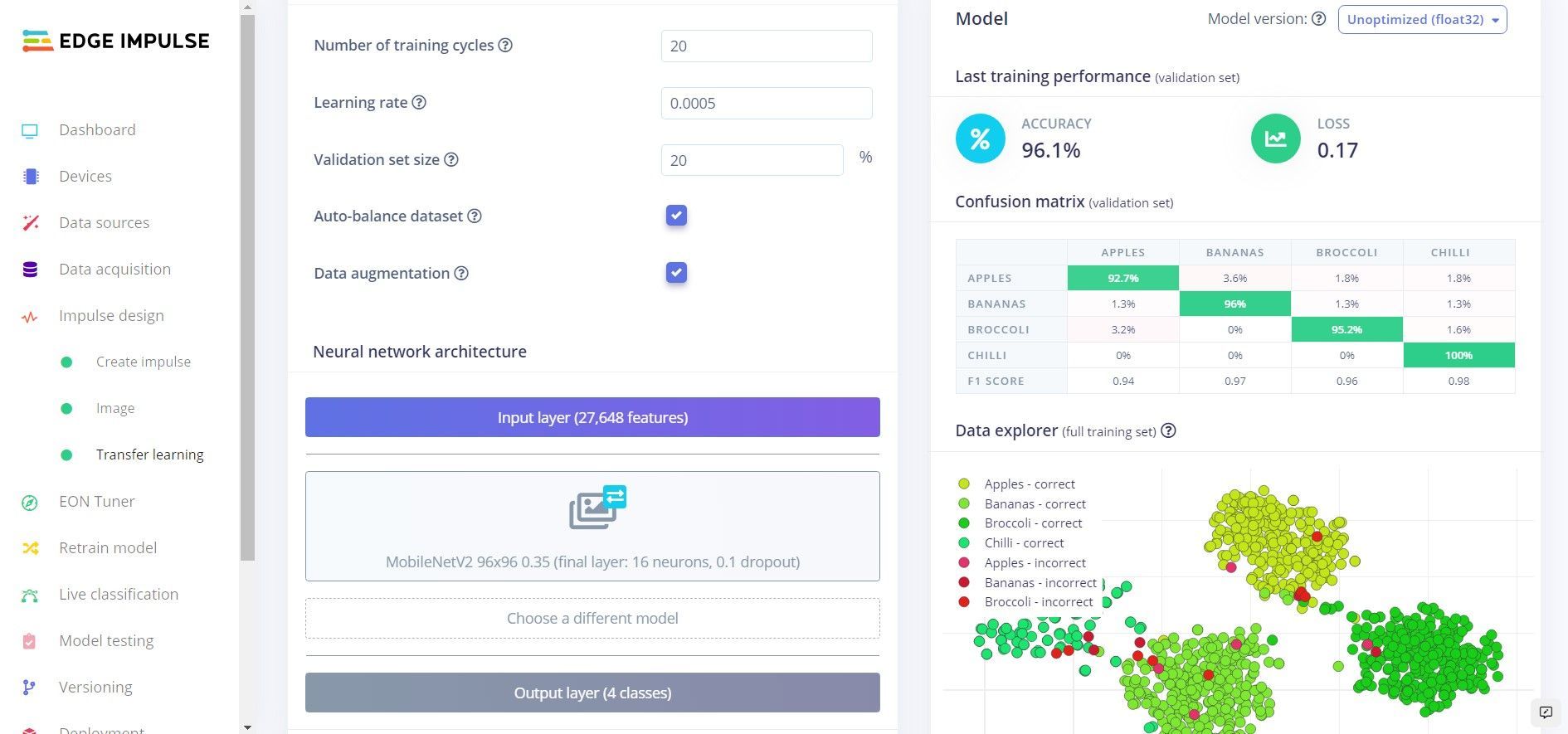

That pretrained model was then retrained using the previously uploaded dataset to repurpose it as an automated checkout scanner for retail store locations. To make the model as robust as possible under real-world conditions, and to guard against overfitting the model to the training data, the data augmentation feature, which makes tiny, random changes to the data in each training cycle was enabled. After a short run time, metrics were provided to help assess how well the model was performing.

The initial classification accuracy exceeded 96%, which is certainly more than good enough to prove the concept. To confirm that the model did not overfit to the training data, another validation was conducted using data that was not included in the training set. This revealed an average classification accuracy of over 91%. Not bad — it looks like the data augmentation feature did its job!

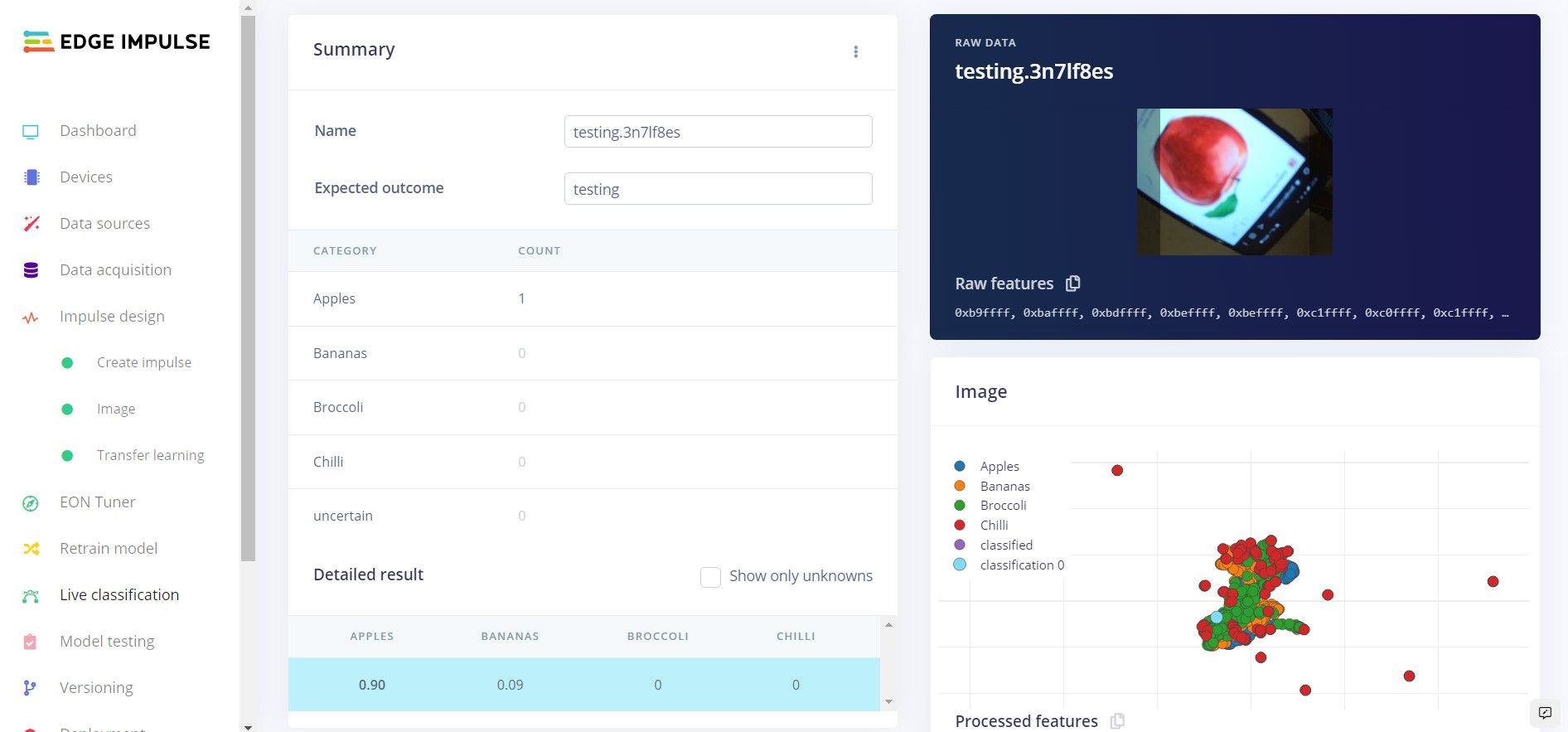

The live classification tool in Edge Impulse Studio served as a final validation of the model. This allows a connected device to transmit sensor data — images in this case — to the project, then run the machine learning pipeline on them. A series of tests showed that the classification accuracy was excellent, as would be expected from the previous validation tests. At this point, the only thing left to do was run a single command in the terminal application of the Jetson to deploy the model to the physical hardware. This ensures that no cloud connection is needed to run the analysis pipeline.

Shopping around for fun projects to do with your Jetson? We highly recommend that you walk through Milton-Barker’s project documentation and reproduce it yourself. It will keep you entertained for a couple hours, and you will learn some things along the way as well that will help you to bring your own machine learning-powered devices to life in the future.

Want to see Edge Impulse in action? Schedule a demo today.