By leveraging computer vision and machine learning, companies can detect and diagnose equipment faults in real-time, enabling proactive maintenance and preventing costly periods of downtime. This technology can also enhance safety by detecting anomalies and hazards, and automating dangerous tasks.

One example of the successful implementation of this technology is in the steel industry. Tata Steel, one of the world’s leading steel companies, has implemented computer vision and machine learning to monitor its blast furnaces. The technology has been able to detect faults before they occur, leading to a reduction in downtime and maintenance costs.

Similarly, the automotive industry has also been using computer vision and machine learning to monitor equipment. Toyota, for example, uses machine learning to detect anomalies in the painting process, reducing the number of defective products and improving quality control.

According to a survey by Deloitte, 76% of executives in manufacturing companies believe that AI and machine learning will be fundamental in driving operational efficiencies in the next two to three years. And these executives appear to be putting their money where their mouths are — in a report by Research and Markets, the global machine learning market size was valued at $7.3 billion in 2020 and is projected to grow at a CAGR of 42.2% from 2021 to 2026, reaching a value of $126 billion by the end of that period.

Industry’s growing interest in machine learning, and the rapid expansion of the market, presents many opportunities for innovation to meet the demand. Where there is a need, engineer and serial inventor Justin Lutz always seems to have a machine learning model to meet it, and this case is no different. He has demonstrated how simple and inexpensive it can be, even for small manufacturing companies, to get a foot in the door and start benefiting from the machine learning revolution.

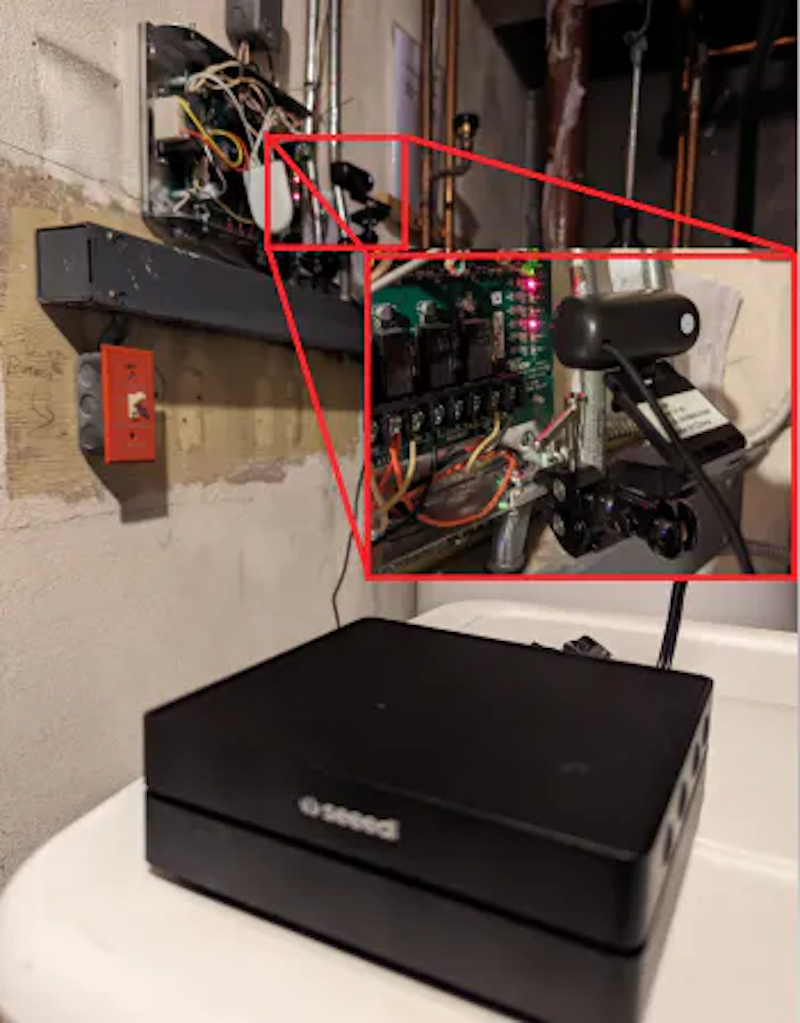

His proof of concept device uses computer vision and machine learning to monitor industrial equipment and raise an alarm when something out of the ordinary is detected. Working with what was available, he demonstrated the device monitoring the heating zone controller in his home, but the same basic methods he employed could be adapted to virtually any type of equipment with little additional work.

As the status of the heating zone controller changes, it illuminates different lights on the panel. Lutz wanted to monitor which lights were switched on, with the idea being that any abnormal states could automatically be recognized. For this job, he chose to use an object detection pipeline that was optimized for running inferences on edge computing devices.

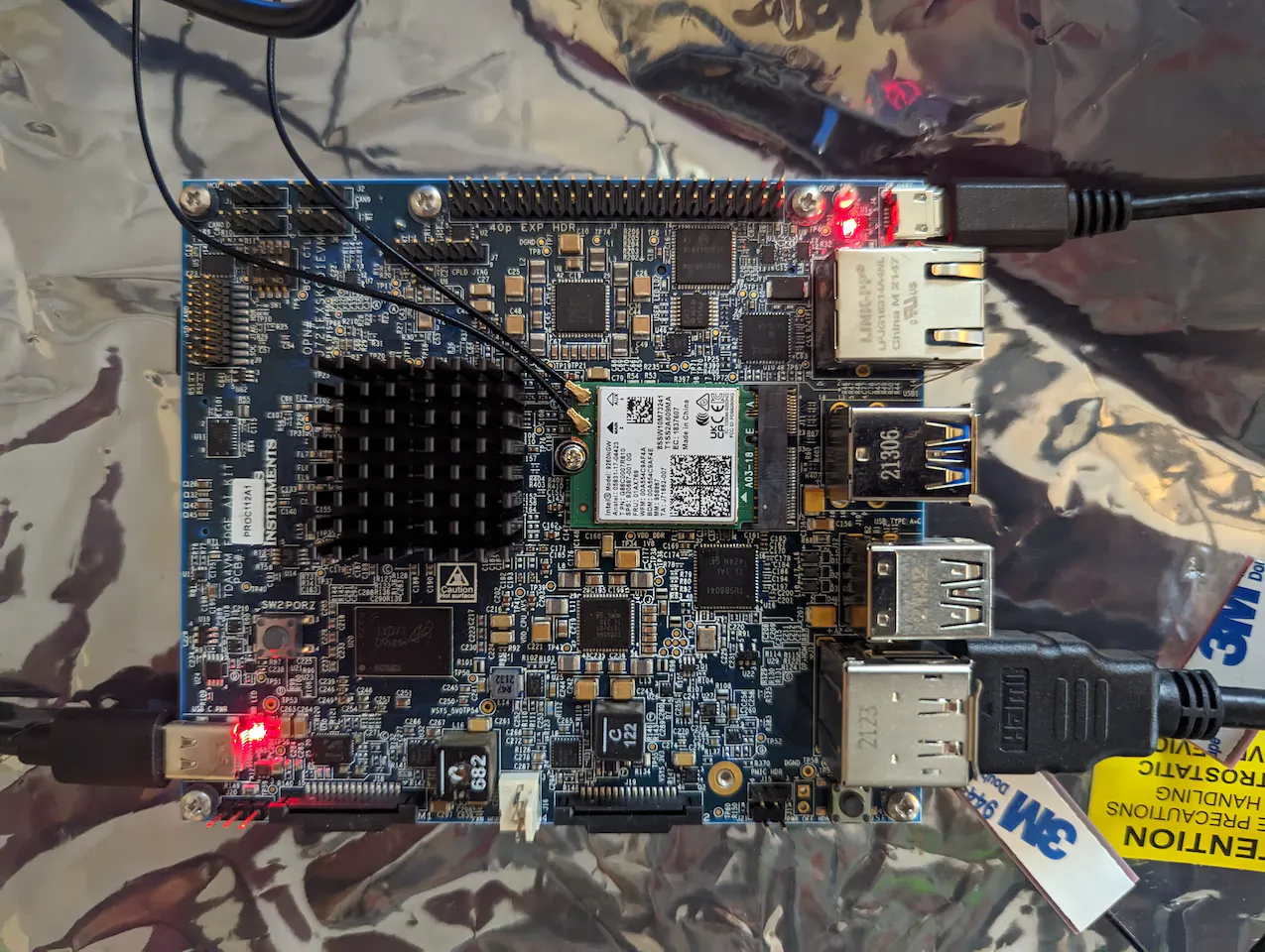

To power this creation, the Texas Instruments SK-TDA4VM Starter Kit was selected. With a TDA4VM processor onboard, this powerful kit can perform eight trillion operations per second. Also sporting 4 GB of DRAM and lots of interfaces for external devices, the SK-TDA4VM can handle a simple object detection pipeline without batting an eyelash.

Since this platform could potentially serve as a processing unit that monitors multiple pieces of equipment, Lutz wanted to interface with it remotely via RTSP streaming. He had a Seeed Studio reComputer 1010, with an NVIDIA Jetson Nano, readily available, so a USB webcam was connected to the reComputer, then it was configured to stream video over the RTSP protocol. This device was placed next to the equipment to be monitored — in this case, the heating zone controller.

With the hardware selection and setup all squared away, Lutz was ready to start collecting sample data to train the object detection model. He used a smartphone app to toggle different heating zones on and off, and captured images using the webcam stream as the state of the panel changed. After capturing a few hundred images, they were uploaded to Edge Impulse Studio.

Next up on the checklist for creating an object detection model is labeling the training dataset. This is done by drawing bounding boxes around the objects that the model should learn to recognize. Even for a few hundred images, this can be a slow and painful process. Fortunately, the labeling queue tool offers an AI-powered boost that automatically draws these boxes for you. It is only necessary to verify that the boxes are in the correct positions, and occasionally make a small tweak.

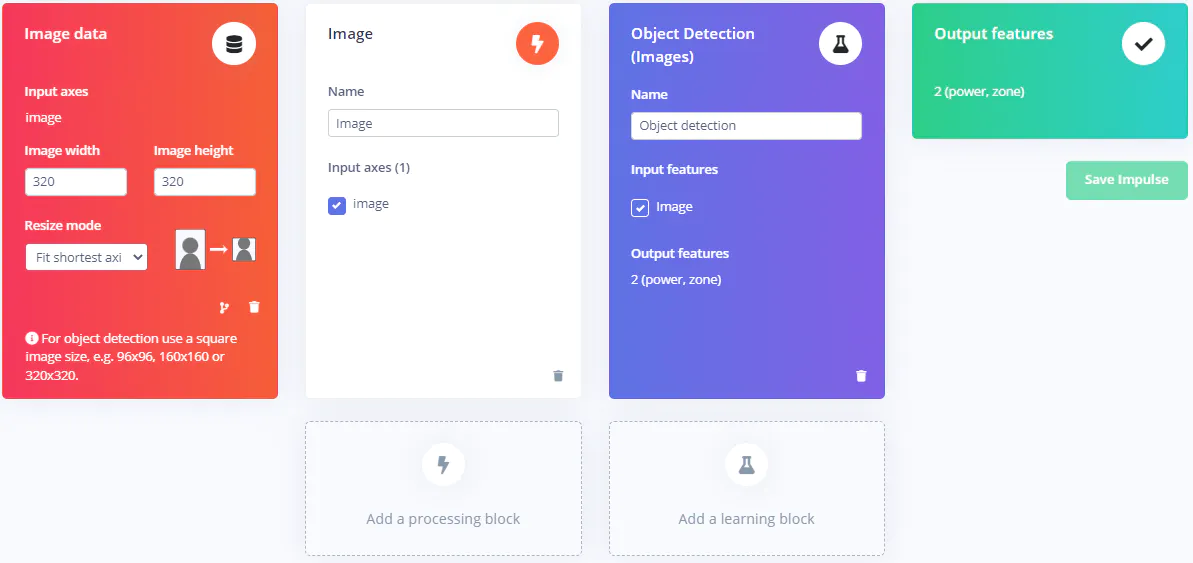

The most important part of the entire process is designing the impulse. This is what defines the end-to-end processing of the data, from raw images all the way to the predicted locations of detected objects. Preprocessing steps were added to reduce the size of the input images, which decreases the overall computational workload, and to make the images square, which is a requirement of the chosen machine learning model. The preprocessed data was then forwarded into TI’s fork of the YOLOX object detection algorithm. Using the insights provided by this model, it is possible to determine which, and how many, lights are illuminated on the heating zone controller.

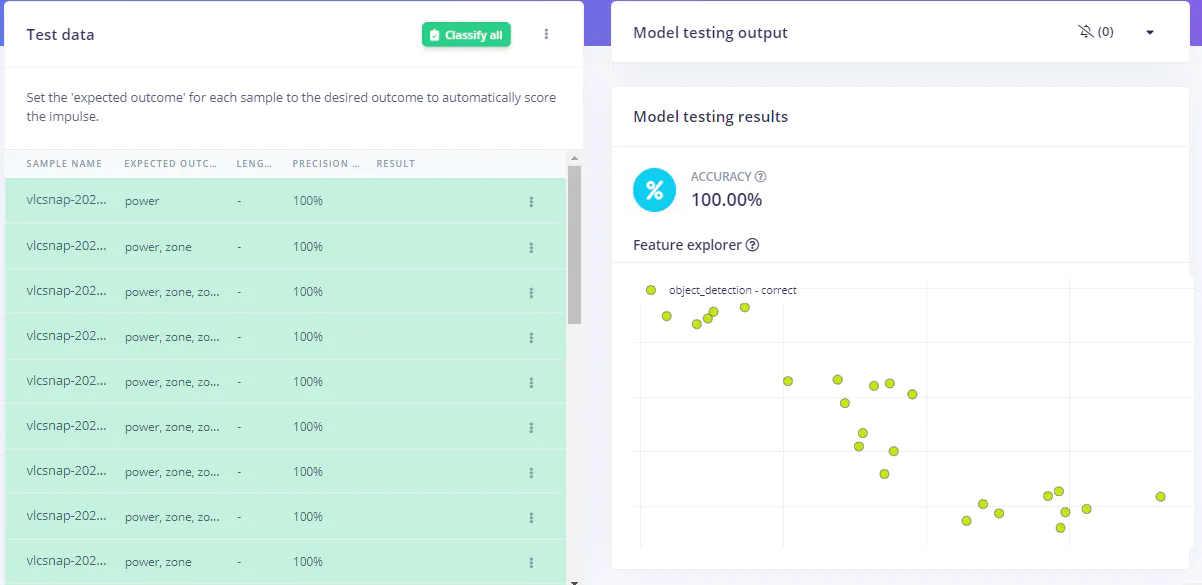

The training process was initiated after finishing the impulse, and after a short time metrics were displayed to help assess how well the model was performing. The precision score had already reached 100%, which leaves no room for improvement. To validate that finding, the more stringent model testing tool was also utilized, which confirmed the 100% precision score. Under some circumstances this might be a bit suspect, but considering that the problem is quite constrained — the model only needs to recognize lights on the same panel it was trained on — it seems quite reasonable.

The SK-TDA4VM is fully supported by Edge Impulse, so deploying the impulse to the hardware was a breeze. Selecting the “TI TIDL-RT library” option from the deployment tool in Edge Impulse Studio is all that is needed to build a downloadable ZIP file that will have you up and running the pipeline in no time.

After all the pieces of the puzzle were in place, Lutz took the system for a spin. He was finding inference times hovering in the neighborhood of 50 milliseconds, which is quite good for an object detection model running on an edge computing device. That makes for a system operating at 20 frames per second, which should be more than sufficient for most equipment monitoring use cases. And most importantly, the model was accurately identifying the heating zone controller lights as they came on.

Keeping an eye on heating zone controllers might not be your thing, but chances are, there is something taking up too much of your time that could be automated away with computer vision and machine learning. Do yourself a favor and check out Lutz’s project documentation to give yourself a head start in eliminating that pain point with your own creation. Go ahead, what are you waiting for?

Want to see Edge Impulse in action? Schedule a demo today.