The disposable plastic, aluminum, and glass bottles and cans that we use in our daily lives offer us a large convenience factor and also a very low-cost way to package goods. Unfortunately there is also a downside to having a heavy reliance on these types of low-cost, disposable packaging — they are easy to toss aside with little thought. In one survey, 75% of adults admitted to having littered in the past five years. This behavior comes with heavy consequences. Nine billion tons of litter makes its way into the oceans each year, and nearly 11 billion dollars are spent each year by US taxpayers to clean up inappropriately discarded junk.

A relatively recent trend that aims to help with these problems is the installation of reverse vending machines. These machines accept empty bottles or cans, and in exchange dispense some type of reward. It is much more challenging to build a reverse vending machine than it is a traditional vending machine, however, which is slowing their adoption. The machines need to be able to recognize the material that the inserted object is made of in order to sort it correctly for recycling, and to exchange it for an appropriate reward.

The engineering wizards of Zalmotek have built a reverse vending machine of their own, and have firsthand experience with just how complex and prone to error these machines are. A whole array of sensors — such as capacitive sensors, reflective light sensors, and inductive sensors — are needed to detect the various materials that may be inserted by a user. And even with a wide range of sensing options, Zalmotek still found that the material was only recognized correctly about 70% of the time. That is not too bad, but is not ready for deployment in the real world.

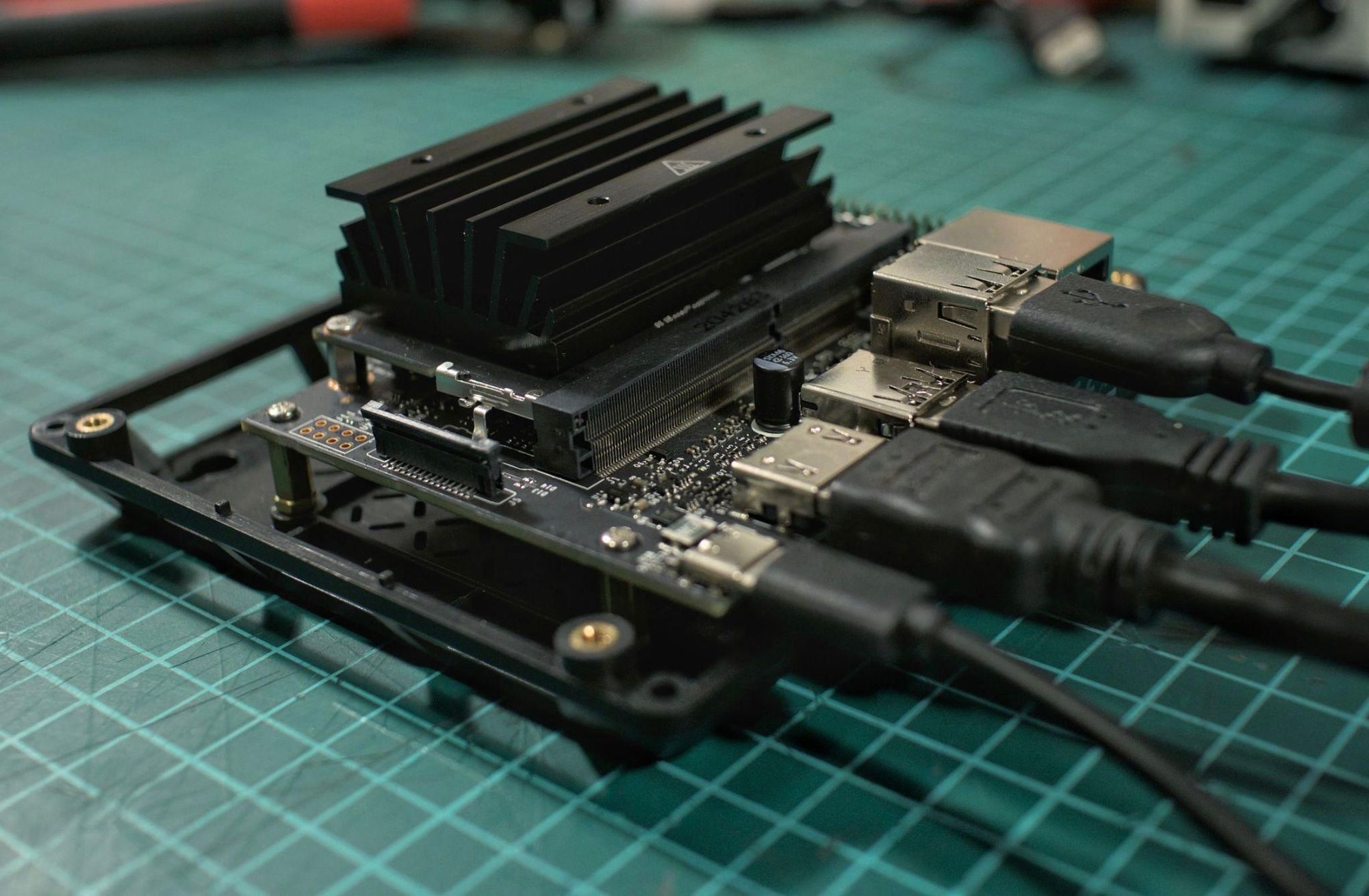

Thinking outside the vending machine box, the engineers devised a new approach to material recognition that only requires a single sensor, yet offers better performance than traditional methods. Their computer vision-based approach uses the powerful but inexpensive Jetson Nano 2GB Developer Kit and a Raspberry Pi Camera Module V2. This edge computing powerhouse sports a 128-core NVIDIA Maxwell GPU for parallelizing machine learning algorithms, and as you may well have guessed, 2GB of RAM. To construct a machine learning model to classify bottle materials, Zalmotek used Edge Impulse Studio.

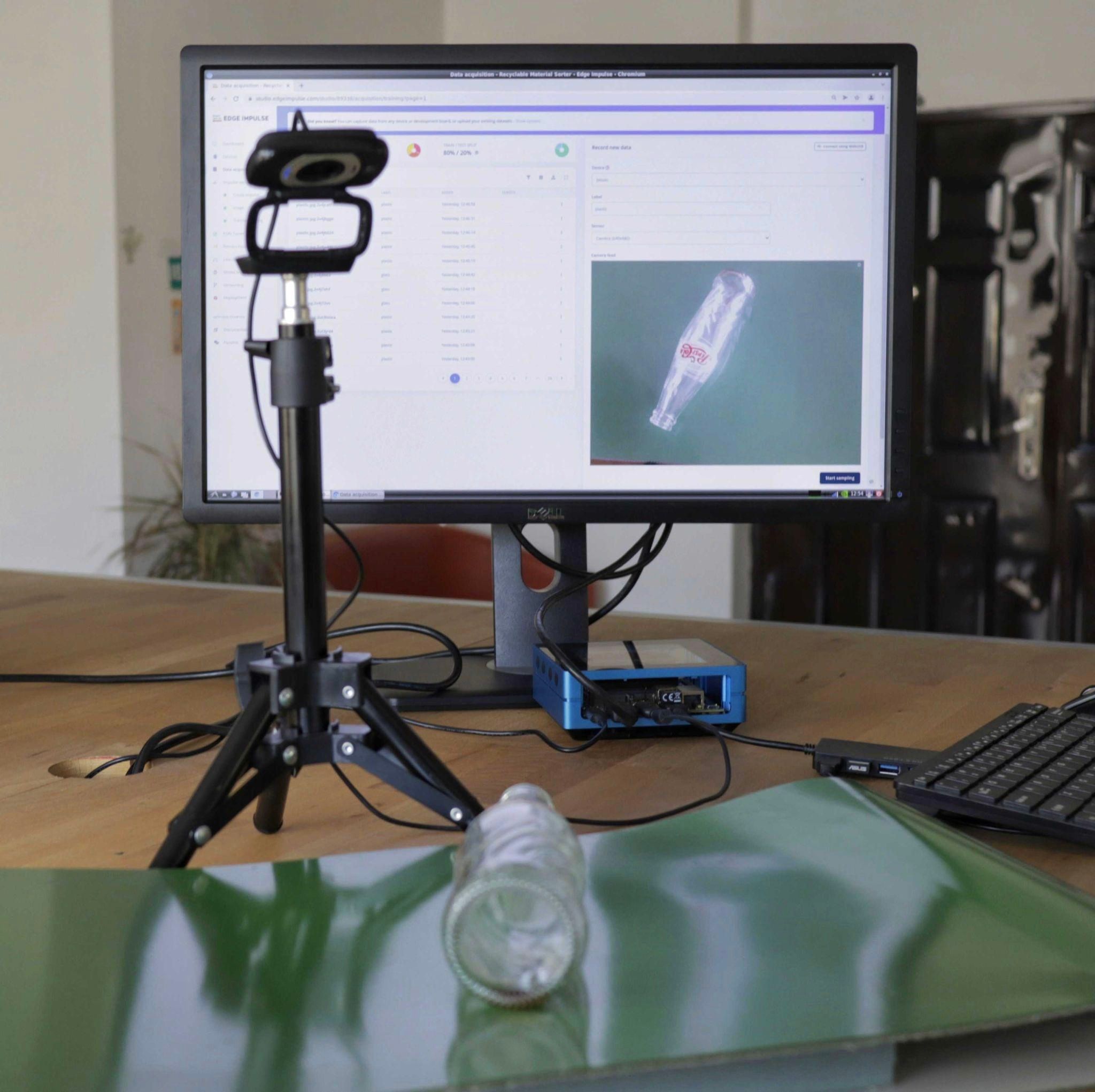

By following a quick tutorial, the Jetson was set up to connect directly to Edge Impulse. This makes it simple to collect and upload training data, and also to deploy the Edge Impulse model back to the device after it has been built and trained in the Studio. Zalmotek next captured images, with the Jetson, of bottles made of differing materials, at different angles, and under various lighting conditions. Image captures were kicked off using the data acquisition tools, where it was also possible to specify the class name of each group of images. All data was automatically uploaded to the Studio.

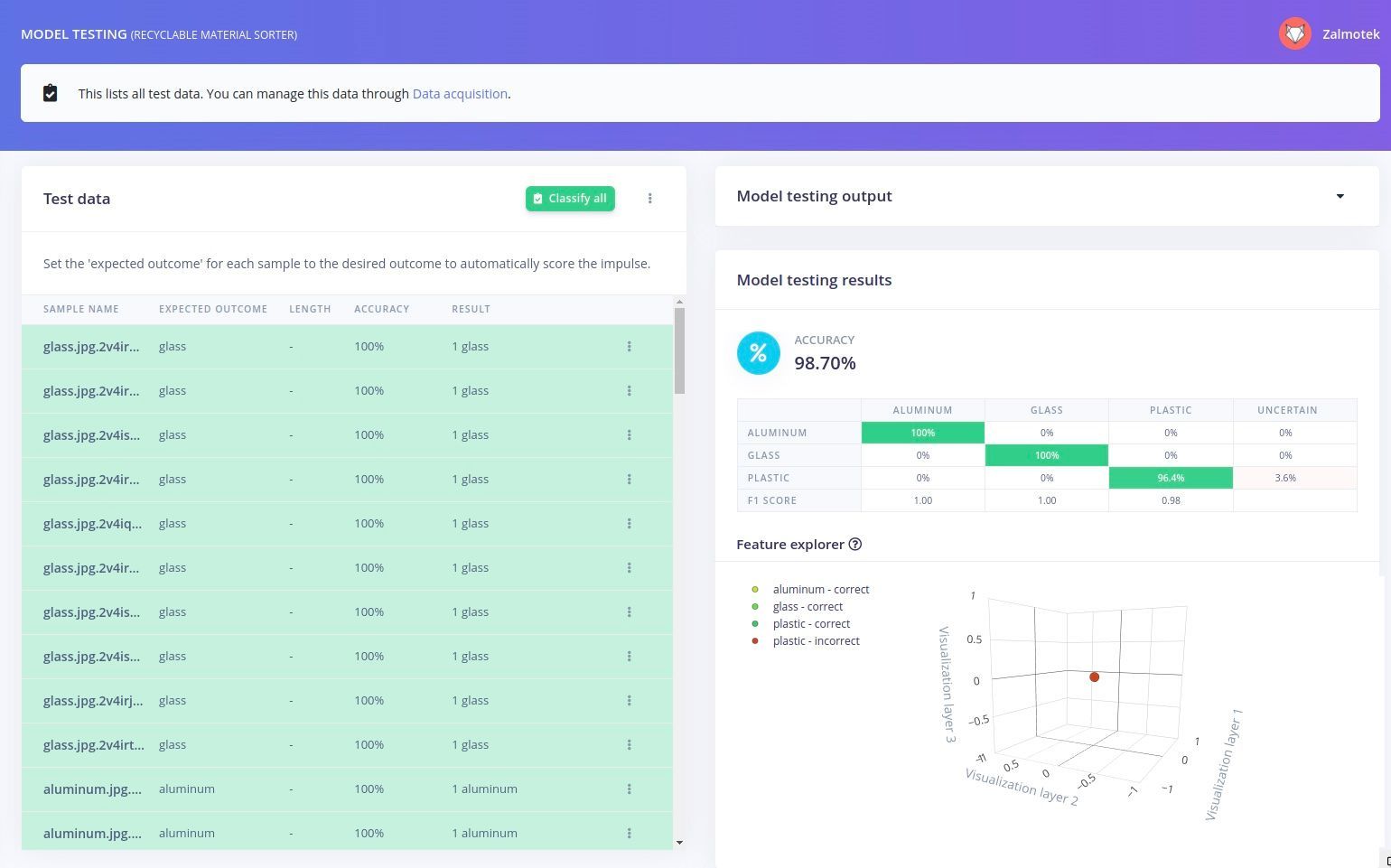

As the next step in the pipeline, the images were preprocessed to resize them and to extract their most relevant features. These features were then passed into a neural network for classification. A pretrained MobileNetV2 96x96 0.35 model was leveraged to take advantage of the knowledge already encoded within it, then through transfer learning it was further trained to recognize the image classes in the training data. The model is capable of recognizing plastics, glass, and aluminum.

After training the model, an additional dataset that was not included in the training process was used to check how good a job it is doing of classifying materials. The classification accuracy was found to be 98.7%, which beats the 70% achieved with sensors alone by a large margin. It is also worth noting that this result was achieved using a training dataset of only 300 images.

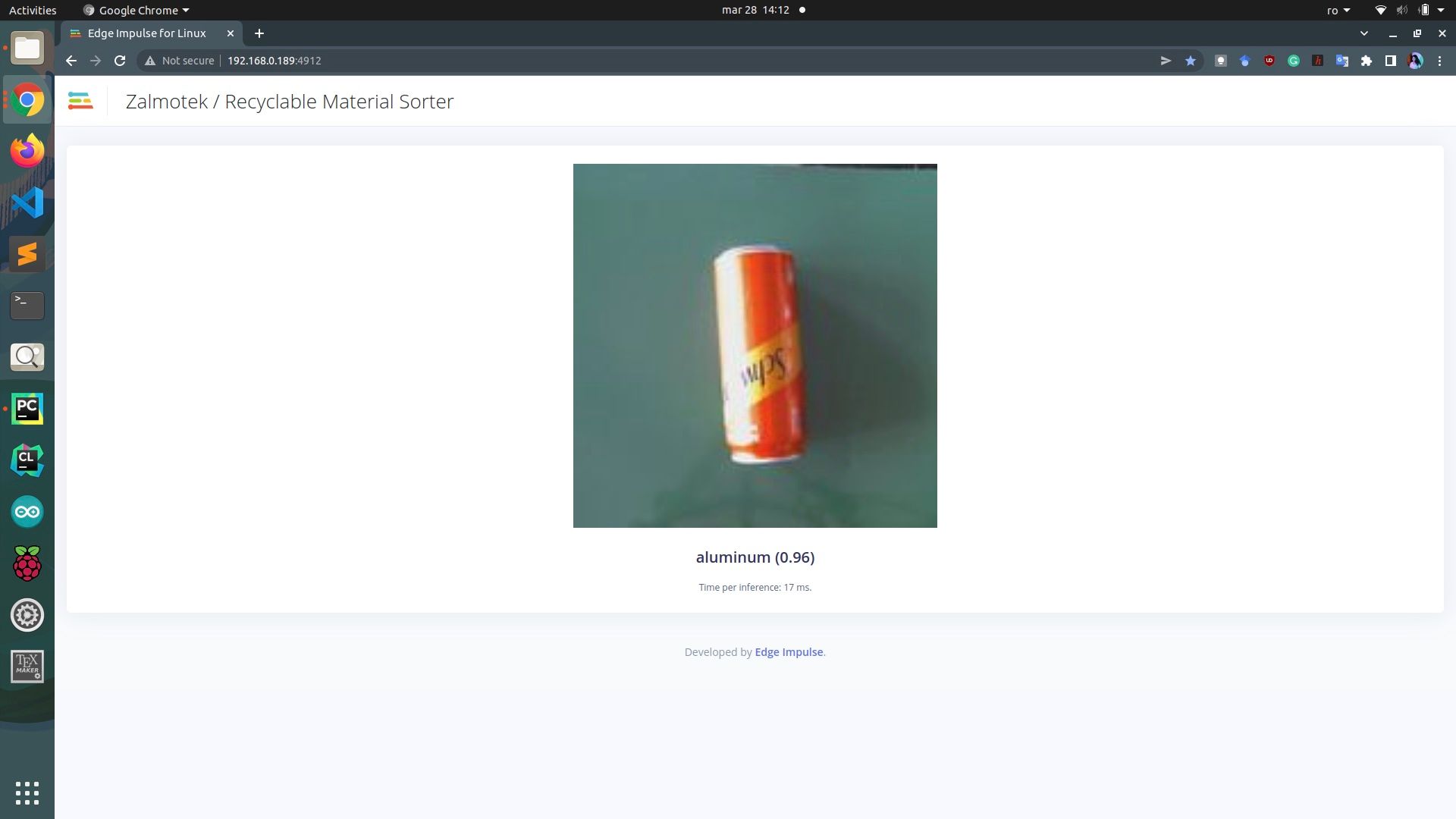

Since the Jetson is already linked to Edge Impulse, the model can be deployed by running a single command in the terminal. Then it is possible to open a local URL in a web browser and run image classifications in real-time. Zalmotek tested out their model using this tool and found it to work quite well, which is no big surprise given the classification accuracy of the model.

The sorting of materials that takes place inside the reverse vending machine saves time and money at recycling centers and ensures that the materials are actually recycled, rather than ending up in a landfill. And with the improvements in accuracy, perhaps these benefits will be realized more frequently through more widespread adoption of these machines. More details about Zalmotek’s solution are available on the project page.

Want to see Edge Impulse in action? Schedule a demo today.