Getting started with Azure Percept

Deploying new applications to edge Internet of Things (IoT) devices can turn into a very difficult proposition when faced with a network of hundreds or even thousands of devices. This is what led Microsoft to create the Azure Percept ecosystem that combines flexible cloud computing with secure endpoints.

The Percept Development Kit itself features the following:

- An NXP i.MX 8M processor

- WiFi, Bluetooth, and ethernet connectivity

- 2x USB-A 3.0 and 1x USB-C ports

- Close integrations with the Azure IoT ecosystem

These all come together to make a highly-connected and capable system for machine learning at the edge.

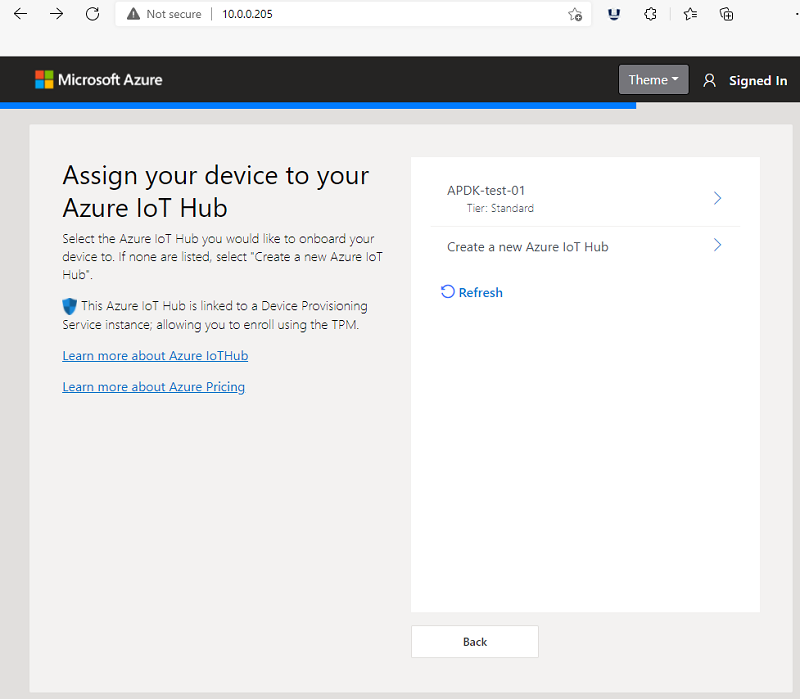

Setting up the Azure IoT Hub and the Percept hardware

To start, a new Resource Group and IoT Hub were created using the Azure command-line interface, after which the Percept was added with the first-time setup utility and its connecting string retrieved via the Azure CLI.

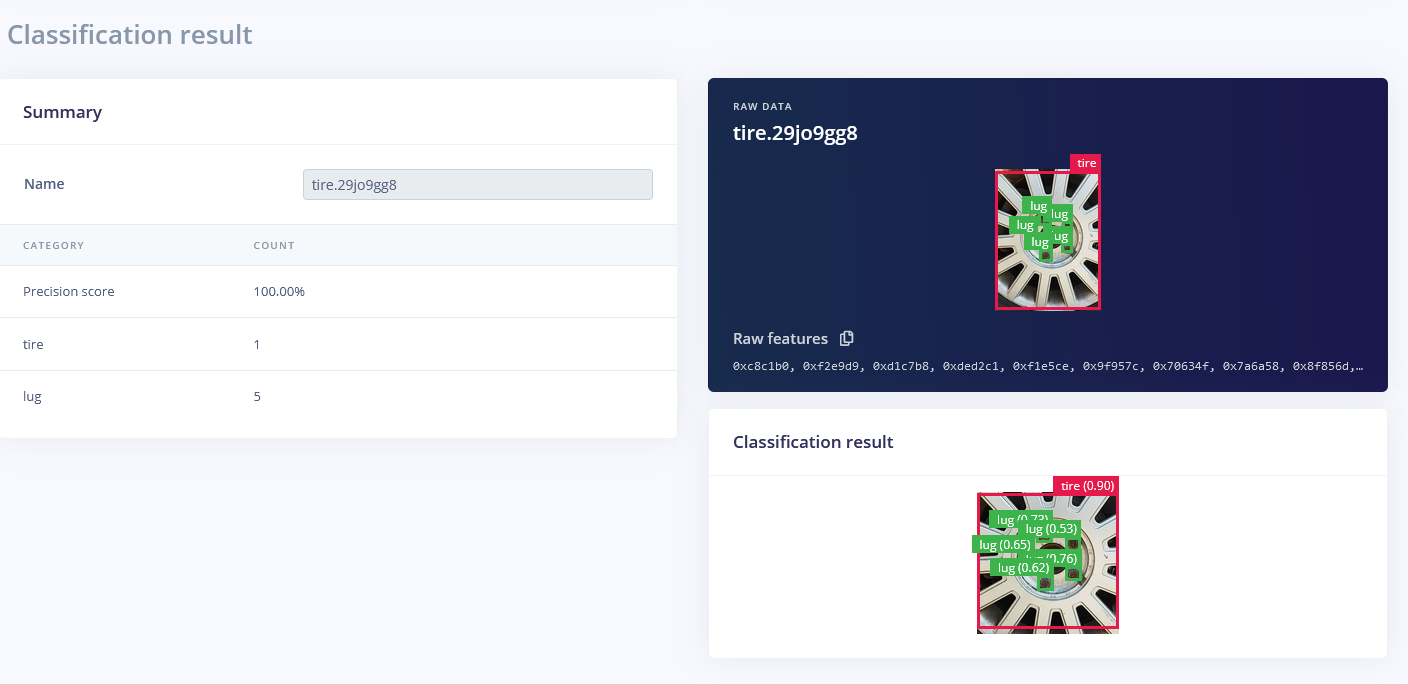

Deploying models to the Percept

The machine learning model for this project was created using Edge Impulse Studio and its object detection feature. After capturing nearly 150 images, the model was trained on labeled and resized 320x320 samples which contained two labels: “lug” and “tire”. The resulting model had an accuracy of 88.89% when tested against the test dataset. The project, found here on GitHub, contains a simple Python script based on this previous iteration meant for a Raspberry Pi 4.

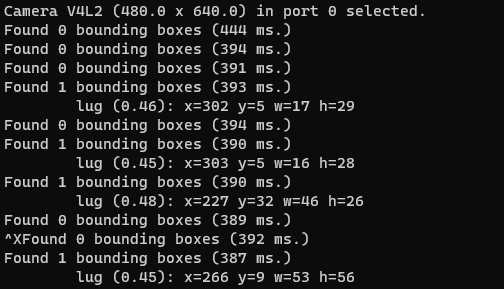

Once transferred via SFTP to the Percept DK, along with the .eim model file, it was run using the following command:

`$ sudo python3 classify.py <path_to_model.eim> <Camera port ID, only required when more than 1 camera is present> -c <LUG_NUT_COUNT>`where `<LUG_NUT_COUNT>` is the correct number of lug nuts that should be attached to the wheel.

Viewing object recognition output

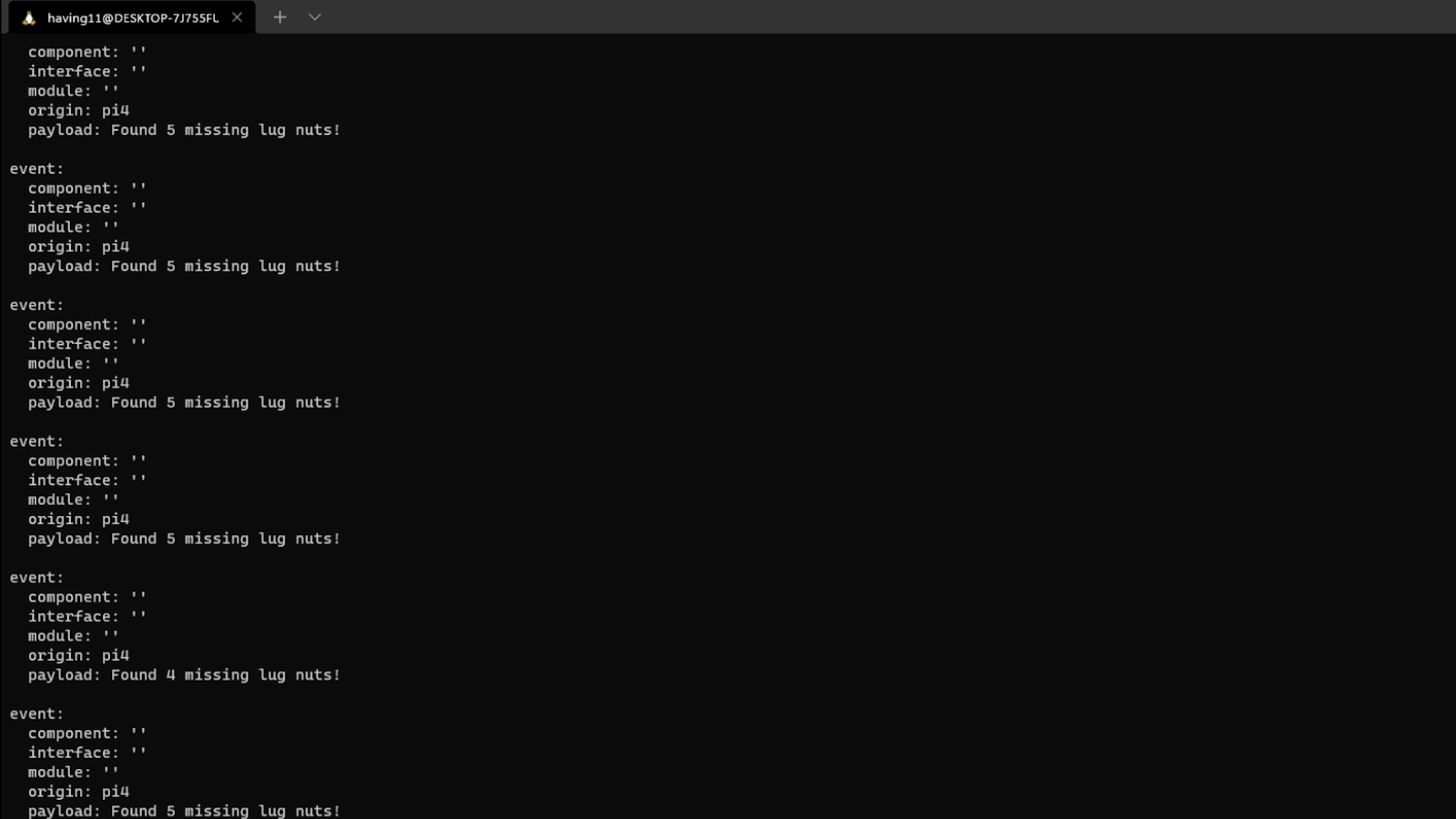

By running the command:

`$ az iot hub monitor-events --hub-name <your IoT Hub name> --output table`users are able to view any new data that is published from the device. For example, if a tire is detected but only three lug nuts are present, then a message is sent stating that the remaining two are missing. By utilizing machine learning on the local device and only sending a message if something is wrong, large amounts of bandwidth can be saved compared to streaming image data to the cloud for inferencing.

Next steps

Imagine utilizing object detection for an industrial task such as quality control on an assembly line, or detecting ripe fruit amongst rows of crops, machinery malfunction and remote, battery-powered inferencing devices. Between Edge Impulse, hardware like the Azure Percept Development Kit, and the Microsoft Azure IoT Hub, you can design endless models and deploy them on every device, while authenticating each and every device with built-in security. You can set up individual identities and credentials for each of your connected devices to help retain the confidentiality of both cloud-to-device and device-to-cloud messages, revoke access rights for specific devices, transmit code and services between the cloud and the edge, and benefit from advanced analytics on devices running offline or with intermittent connectivity. And if you’re really looking to scale your operation and enjoy a complete dashboard view of the device fleets you manage, it is also possible to receive IoT alerts in Microsoft’s Connected Field Service from Azure IoT Central - directly.

Feel free to take the code for this project hosted here on GitHub and create a fork or add to it. The complete project is available here.

Want to see Edge Impulse in action? Schedule a demo today.