For most of us, driving is such an ordinary part of our everyday lives that we forget we are sitting in a steel box racing along at 70 miles per hour. Far too often the comfort and familiarity that we have with driving leads people to take their eyes off of the road to check their smartphone messages or engage in other risky behaviors. Sometimes it is wise to take a step back for a moment to remind ourselves of the dangers that we face, and the harm that we can inflict on others, by ignoring even the most basic safety measures while we are behind the wheel. After all, bad driving habits are one of the major factors involved in the estimated 42,915 fatalities that occurred in motor vehicle crashes in the US in 2021. And smartphone use alone is believed to contribute to 1.6 million crashes each year.

As they say, old habits die hard, so one of the most effective ways to eliminate bad driving habits may be to nip them in the bud. That is the guiding principle behind a potential solution to this problem that was recently developed by engineers Bryan and Brayden Staley, anyway. They have built an electronic assistant called 10n2 (get it? “10 and two”) to teach student drivers the best practices as they hone their driving skills. With the help of Edge Impulse, their device uses machine learning to detect risky behaviors and sound an alarm to make the driver aware that they are in the danger zone in real-time.

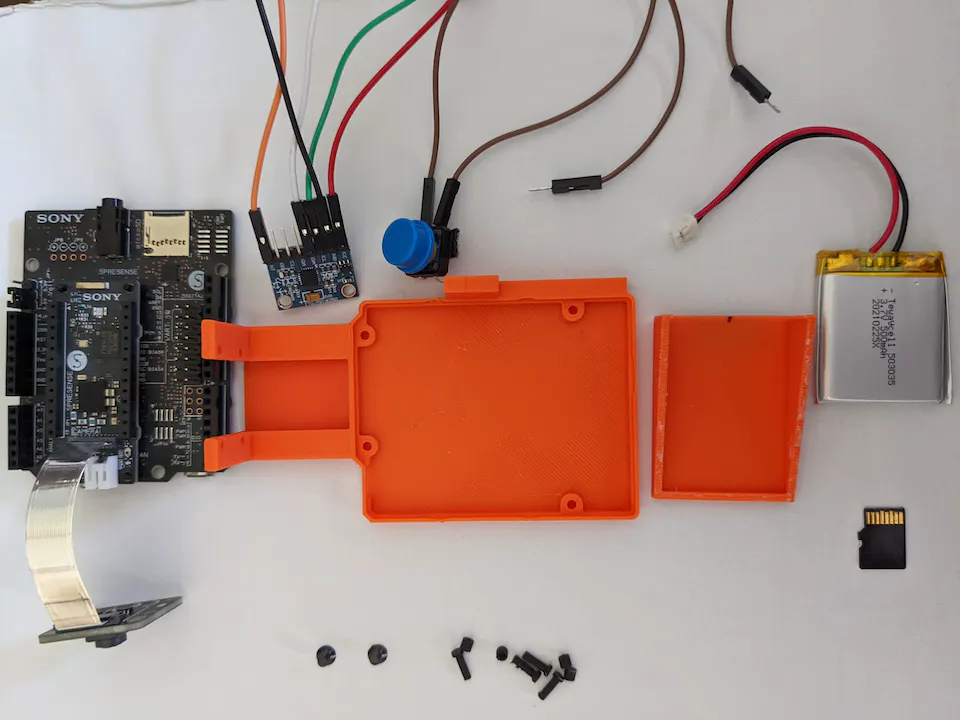

After considering their options, the team settled on a Sony Spresense development board to power their creation. It has a six-core microcontroller that is very well suited to running demanding machine learning algorithms. A Spresense camera was also chosen to allow the device to keep an eye on the driver. Rounding out the core of the device was a six-axis inertial measurement unit (IMU) — this served to detect certain risky driving behaviors that could not easily be recognized visually. To alert the driver of concerns detected by the device, a speaker was hooked up via a standard 3.5 mm jack. The components were assembled and placed in a custom 3D-printed case that was designed to be mounted on the roof of the car, with velcro strips, so that it has a good view of the driver.

The goal was to use image data from the camera as the input to a neural network classifier to detect if the driver’s hands are not at the correct positions on the wheel, or if they are using their smartphone. Additional bad behaviors (tight turns, short stops, fast accelerations, and pothole hopping) were recognized using IMU measurements. Before any of this could happen, training data needed to be collected.

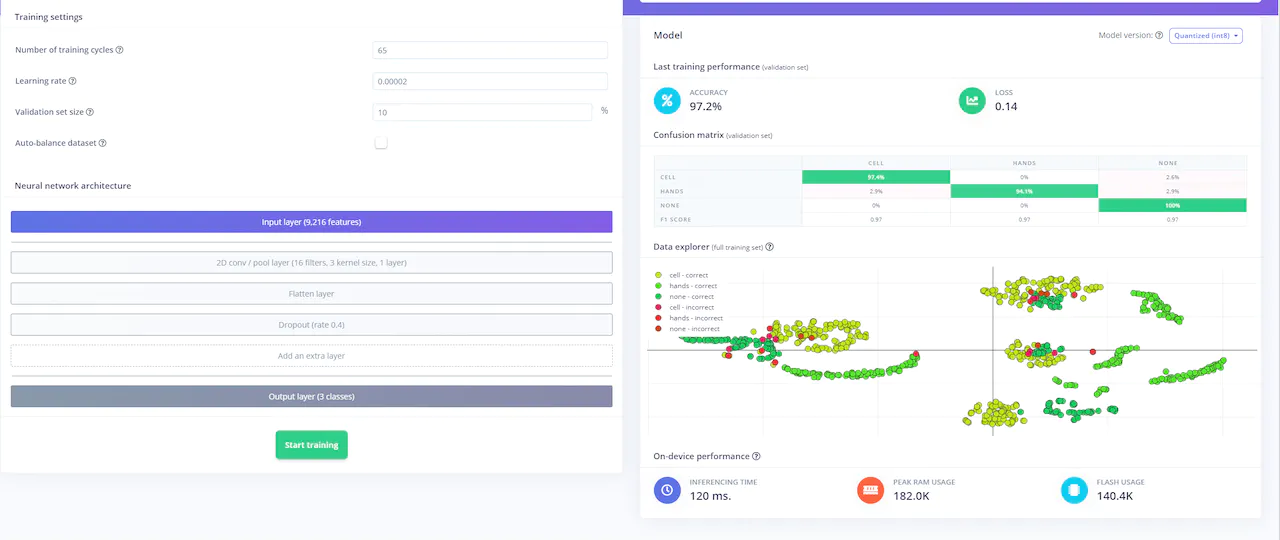

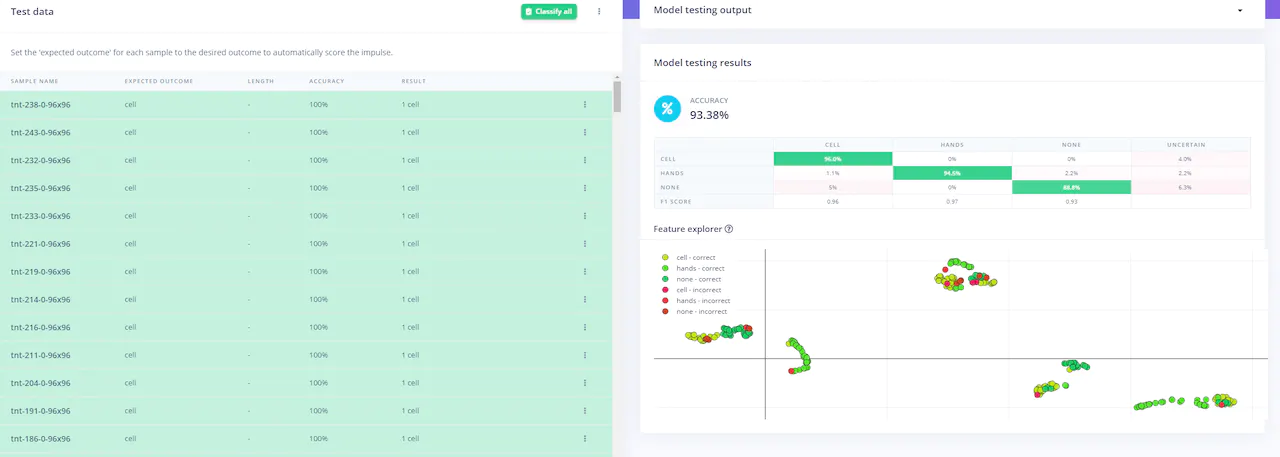

Images were gathered from the Spresense camera and uploaded to Edge Impulse where they were assigned labels. This training data was fed into an impulse that preprocessed the images and then classified them with a convolutional neural network. After the training process had finished, an average classification accuracy of better than 93% was observed when validating the model against previously unseen images.

This result was more than acceptable for a proof of concept, so the team moved on and deployed the machine learning pipeline to the physical device. This was accomplished by exporting it as a self-contained C++ library that could be incorporated into a larger project containing additional logic. That project leveraged the Edge Impulse model to classify images, and performed some basic statistical analyses to interpret the IMU data.

When the finished device is installed in a car, it will sound an audible warning when any bad behaviors are observed, prompting the driver to get back to safe driving practices. Data is also logged so that it can be superimposed onto a map for reporting purposes after the fact. This report calculates a score for various portions of the trip that summarize the level of risky behavior observed. This could be used by both drivers and driving instructors to gain insights and provide action items for future improvements.

Do you know someone that could use a little help behind the wheel? Zoom on over to project’s website.

Want to see Edge Impulse in action? Schedule a demo today.