Concrete is a strong and durable material, but over time, it can crack for a variety of reasons. Cracks in concrete structures can be a serious problem, potentially leading to structural failure and endangering the safety of the public. For these reasons, inspecting concrete structures for cracks is a vital task for engineers and construction professionals. These inspections must occur regularly throughout the life of the structure to detect problems before they turn into a disaster.

Cracks can occur due to age, wear and tear, exposure to extreme weather conditions, and poor construction practices. Identifying these cracks early on can help prevent more significant damage and ensure that the structure remains safe for use. Unfortunately, the inspection practices most frequently used today are inefficient and prone to error.

Visual inspection is the most common and straightforward method of inspection. It involves manual examination of the surface of the structure for any visible cracks, spalling, or other signs of damage. Unfortunately, manual inspections are time consuming and costly, which makes this method impractical for routine maintenance. It can also be challenging to detect some cracks with the naked eye.

The scope of this problem is massive. According to the American Society of Civil Engineers, the average age of infrastructure in the United States is over 50 years old. To ensure the safety and integrity of these aging structures, a more accurate and efficient means of inspecting them is sorely needed.

A blueprint for a potential solution to this issue has recently been developed by a machine learning enthusiast named Naveen Kumar. His device demonstrates how surface cracks in concrete structures can be accurately detected and localized in an automated manner. This solution also has the potential to make inspections less costly and much faster than existing techniques.

It is important that the system not only detect that a crack exists, but that it can also determine exactly where the crack is. This would normally be a job for an object detection algorithm, but in this case, that might be a bit too much work. To train such a model, the cracks in each sample image would need to be manually identified by drawing bounding boxes around them. Machine learning-powered tools do exist that can help to automate this process, but cracks in concrete are not the types of shapes that these tools are usually designed to detect, so they would not be expected to perform optimally.

Kumar came up with a clever plan that sidesteps this annotation issue. He decided to build an image classification model with added Global Average Pooling (GAP) layers. This type of model will detect the presence of concrete cracks, and also provides a heatmap that identifies the locations of detected objects. And most importantly, no annotation of the training data is required.

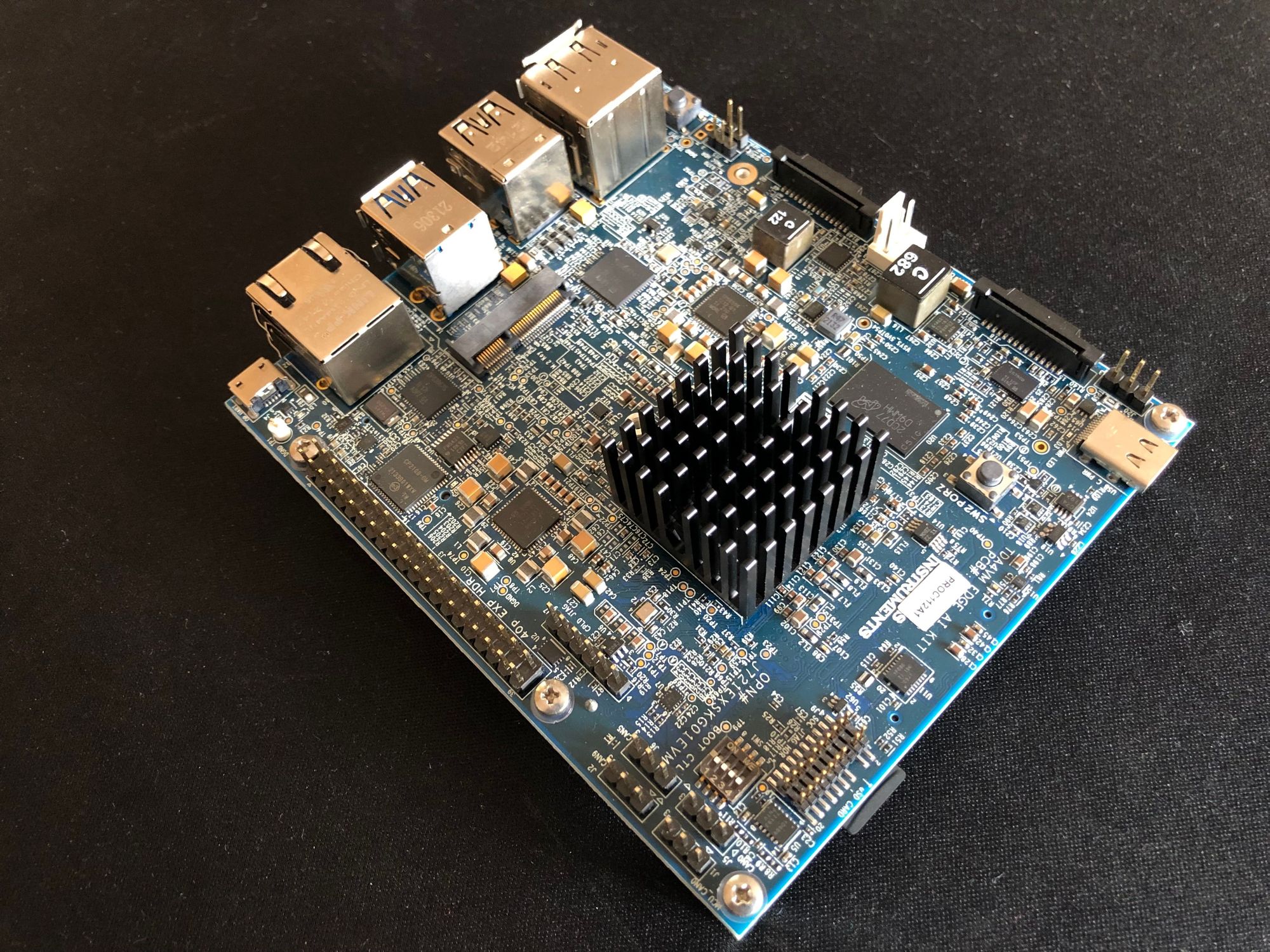

To power this creation, a powerful edge computing platform was needed. Kumar also wanted the device to be portable and inexpensive, which makes for a rare combination of features. After some searching, he found that the Texas Instruments SK-TDA4VM Starter Kit checked all of the boxes for his ideal device design. The TDA4VM processor on this board enables eight trillion operations per second of deep learning performance and hardware-accelerated edge AI processing. That would make short work of an image classification pipeline optimized by Edge Impulse, so the only other thing needed to turn this computer vision powerhouse into a finished device was a USB webcam.

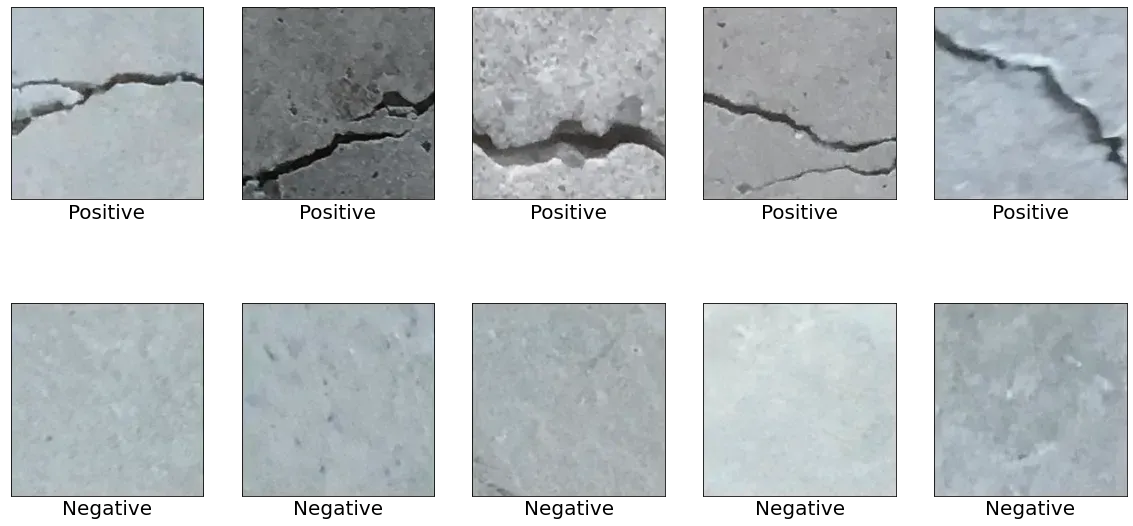

With the hardware platform all squared away, it was almost time to start building the intelligence into the system. But first, sample data was needed to train the classification algorithm. A dataset was located that contains 40,000 images of concrete, half with cracks, and half without. Another 25,000 images of scenes from the natural world were collected to give the system an understanding of when it is not looking at concrete at all. The entire dataset was then uploaded to Edge Impulse Studio using the Edge Impulse CLI. With a few commands entered in the terminal, the images were automatically assigned labels and split into training and testing sets.

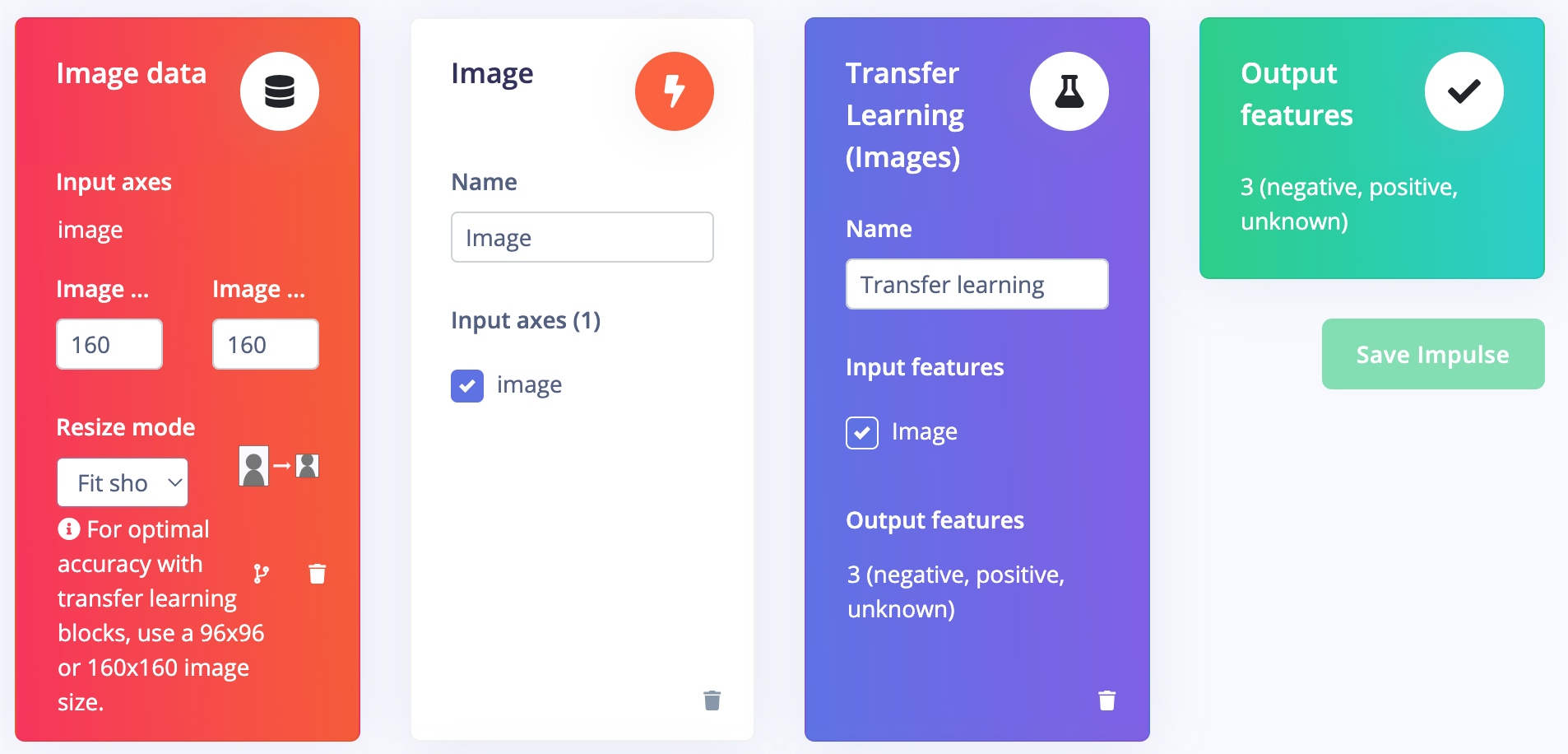

Next, Kumar fired up his web browser and loaded Edge Impulse Studio to design the impulse. The impulse specifies how data is processed, all the way from raw sensor inputs to predictions. Preprocessing blocks were added first — these served to resize the images to meet the model’s input requirements and also to reduce the computational workload and extract the most important features. Those features were then forwarded into a pre-trained MobileNetV2 neural network. By retraining this model, the knowledge previously encoded into it can be leveraged to increase classification accuracy. Using expert mode, additional GAN layers were added to the model for localization of detected objects. This neural network was configured to determine if concrete is detected, with or without a crack, or if some other non-concrete object is in view.

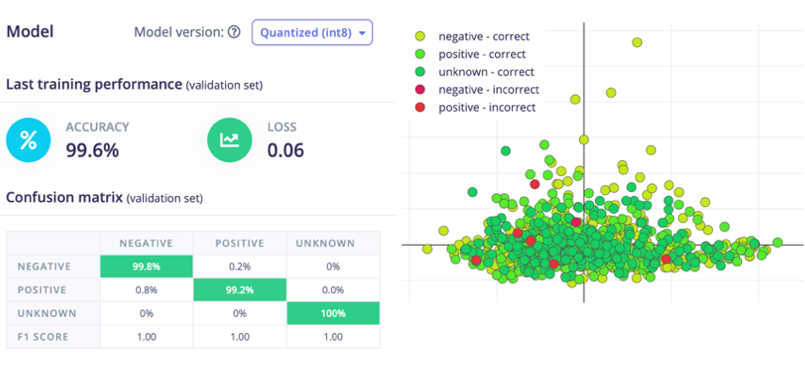

At this point, everything was in place for the surface crack detector, but how did it work? Kumar used the Feature Explorer tool as a first step to make sure the data appeared to cluster well within groups. The results were encouraging, so the model training process was initiated. After training finished, metrics were provided to help in further assessing the model’s performance. The average classification accuracy had already reached 99.6% on the first try. This did not leave much room for improvement, so it was time to wrap the project up.

To eliminate the need for wireless connectivity and avoid the privacy concerns that come with sending data to the cloud, the pipeline was deployed to the SK-TDA4VM Starter Kit hardware. A pre-compiled TensorFlow Lite model was downloaded from the Edge Impulse Studio Dashboard, then transferred to the development board where it was executed with Texas Instrument’s Processor SDK Linux for Edge AI toolkit.

Kumar demonstrated the device in operation by examining a concrete beam with some surface cracks present. The cracks were detected with a high degree of confidence, and the heatmap made it clear exactly where they were located. It is not difficult to see how this system could be integrated into an automated inspection routine that could save a lot of time and money, all while helping to ensure that aging infrastructure is safe for use.

If you are interested in improving your skills in computer vision or machine learning, we would encourage you to take a look at the project documentation and the public Edge Impulse Studio project. Recreating your own copy of this project would be a great way to gain some valuable experience.

Want to see Edge Impulse in action? Schedule a demo today.