For humans, sight is one of our most valued senses 1. But despite the importance of vision virtually all embedded devices are still blind, having to rely on other sensors to recognize events that might be easily perceived visually. To change that, today we’re adding computer vision support to Edge Impulse. This allows any embedded developer to collect image data from the field, quickly build classifiers to interpret the world and deploy models back to production low-power, inexpensive devices.

At Edge Impulse we enable developers to build and run their machine learning on really small devices, and with this release, we’re bringing computer vision to any device with at least a Cortex-M7 or equivalent microcontroller. This means that a whole new class of devices can now accurately predict what they’re seeing - enabling great opportunities from predictive maintenance (’does my machine look abnormal’) and industrial automation (’are labels placed correctly on these bottles’) to wildlife monitoring (’have we seen any potential poachers’).

Can’t wait to get started? Then head over to our tutorial on Adding sight to your sensors - you can even get started with just your mobile phone.

Still here? Great! Here are some of the new features we have added to Edge Impulse to make this all happen:

1. Full support for OpenMV

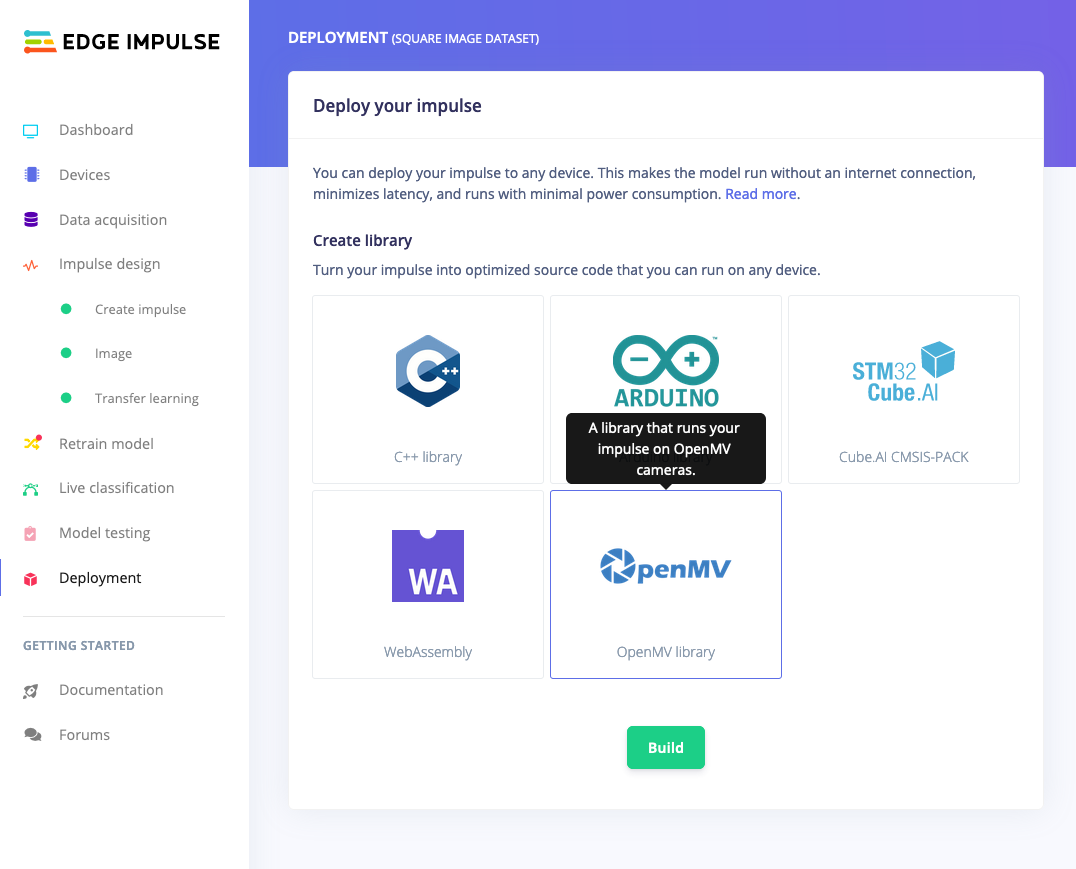

For this release, we have partnered with OpenMV, the leading platform for low-power computer vision. You can now capture data directly from the OpenMV IDE and import it into Edge Impulse, making it very easy to build a high-quality dataset. And you can now deploy your trained impulse directly from Edge Impulse to OpenMV boards like the OpenMV Cam H7 Plus for real-time inferencing.

2. Transfer learning

It’s very hard to build a good working computer vision model from scratch, as you need a wide variety of input data to make the model generalize well, and training such models can take days on a GPU. To make this easier and faster we have added support for transfer learning to the studio. This lets you piggyback on a well-trained model, only retraining the final layers of a neural network, leading to much more reliable models that train in a fraction of the time and work with substantially smaller datasets. We’ve added a number of pretrained, optimized base models that you can select based on your size requirements (models can be as small as 250K!).

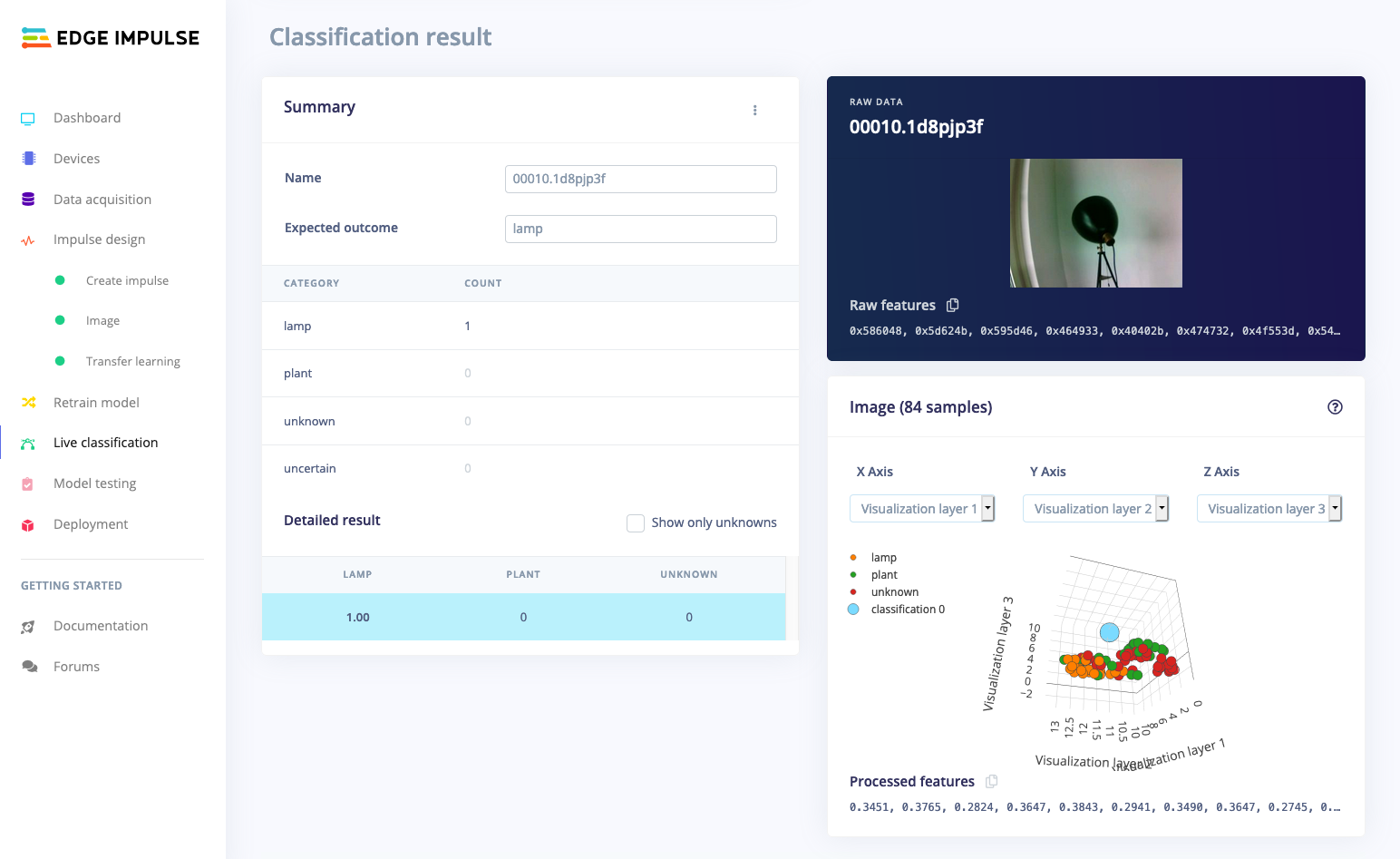

3. Full support for images throughout the studio

All of our tooling has been updated to add support for vision. This means you can view and label all your data in data acquisition, create pre-processing blocks to augment and transform data, visualize your image dataset, and classify and validate models on training data straight from the UI. We also have you covered if you already have a dataset, as the CLI has been updated to allow uploading JPG images, and you can now even upload images straight from the UI (click the ’Upload’ icon on the data acquisition page). And if you want to get started quickly you can now use your mobile phone to quickly build a dataset.

Future plans

This naturally marks a very important milestone, but we’re just getting started! Over the next months, we’ll be adding more transfer learning base models, so we can run computer vision on even lower-powered devices. We’ll also be adding support for more devices, through our partnerships with Arduino (we’ll be supporting the new Portenta H7 development board), STMicroelectronics, and Eta Compute. And we’ll be looking at adding more computer vision applications: today you can build image classifiers in Edge Impulse, but we’ll be naturally also looking at adding anomaly detection, object detection, and object tracking.

In the meantime, we can’t wait to see what you’ll build. If you have created something amazing, or have any questions please let us know on the forums.

Haven’t built your first computer vision model yet? Head over to our tutorial on Adding sight to your sensors, you can build models using an OpenMV camera or your mobile phone. Or if you’d rather feel vibrations or recognize bird sounds, look at our tutorials on continuous motion recognition or recognizing sounds from audio.

Jan Jongboom is the CTO and co-founder of Edge Impulse.