One of the best things about machine learning has got to be the way that it can simplify mundane tasks. The team over at CodersCafe has applied a machine learning-based approach to simplifying one such task — the checkout process after a shopping trip. Using computer vision and a neural network classifier, their device — called AutoBill — will identify anything that has been placed on a counter, automatically generate a bill, and accept a mobile payment. When using AutoBill, there is no need to scan each item, or to try and look up the item code for those lychees while the next person in the self-checkout line taps their foot and checks their watch.

AutoBill may sound like a very advanced build on the surface, but by choosing the right software tools for the job, and common off-the-shelf hardware, the developers built a device that nearly any technically-inclined person could reproduce in a weekend. CodersCafe’s toolkit consisted of a Raspberry Pi 3, a camera module, a weight sensor, and Edge Impulse.

The first step was to assemble a simple, rectangular cabinet, with an exposed top and bottom shelf, from plywood. On top, there is a monitor to display the list of items that are being purchased. The bottom shelf of the cabinet has an acrylic tray attached to the top of the weight sensor — this tray is where items to be purchased are placed. A five-megapixel camera looks down on the tray to capture images of the items. The Raspberry Pi is housed in a box attached to the side of the cabinet.

With the physical device complete, it was time to get started on the software. To simplify the process of building, training, and deploying a machine learning model, CodersCafe used Edge Impulse. As usual, this process began by collecting a dataset. They collected images of each of the items that they wanted AutoBill to be able to recognize; this consisted of three food items for the prototype. The team notes that it is important to capture images of these items from various angles and under different conditions to build a well-generalized model. The collected images were uploaded and labeled under the “Data acquisition” tab in their Edge Impulse project.

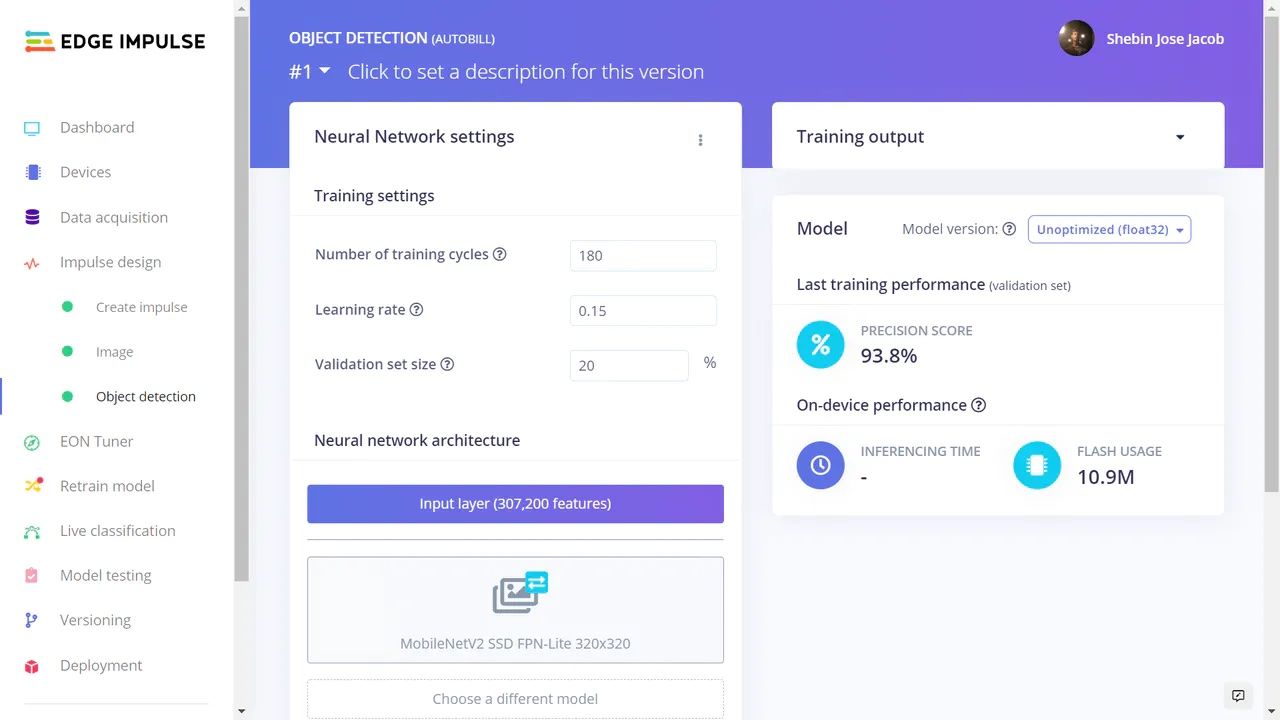

With the training data ready, the next task was to design a processing pipeline called an impulse. This pipeline converts the images to grayscale, and uses signal processing to extract features. These features are fed into an object detection block, which uses transfer learning to retrain a MobileNetV2 model to recognize the food items of interest. After building this pipeline with a few clicks, the model was ready to be trained.

When the training process had finished, the team went to the “Model testing” tab to determine how well their model performed. By analyzing a test dataset from the input images that had not been included in the training data, the model was found to have achieved 93.75% precision. These are impressive results when considering that only about 40 images were captured for each item.

To minimize latency, CodersCafe wanted their model to run locally on the Raspberry Pi. Edge Impulse makes this possible by packaging up the complete pipeline, including the preprocessing steps, the trained model, and the classification code, into a C++ library. This can all be deployed to a target device, such as the Raspberry Pi, by typing a few commands in the terminal.

A backend API and a front-end interface combine to display items detected by the model on a display for the end user to interact with. At the end of the transaction, a QR code is displayed, which the shopper can scan with their phone to pay for the items and complete the transaction. Looking to the future, the team wants to add the ability to generate a digital receipt, record transaction history, and build in multi-store analytics.

Want to see Edge Impulse in action? Schedule a demo today.