Voice recognition technology has seen a surge in popularity in recent years, with more and more people turning to it as a way to control their devices in the home and office. The technology, which allows users to issue commands and make requests simply by speaking, has a wide range of applications and is increasingly being integrated into a variety of different products.

One of the main drivers behind the rise in voice recognition has been the proliferation of smart home devices such as voice-controlled assistants, smart thermostats, and smart lighting systems. These devices let users control their homes with their voice, turning on lights, adjusting the temperature, and more.

Voice recognition is also being used in the office to increase productivity and streamline processes. For example, some companies are using the technology to transcribe meetings and create written summaries, while others are using it to create and edit documents or to send emails.

These successes have not come without some challenges that are still preventing a more widespread adoption of the technology, however. Since these systems must recognize natural language, and a desired action can be asked for in any number of ways depending on the preferences of a particular user, it is common that devices require specific phrasing of requests that need to be learned. This makes the voice recognition system easier to build, but it also makes it less natural for a user to work with, and can lead to frustration and reductions in efficiency.

Another major challenge seen with voice recognition systems is that of preserving user privacy. Having private audio recordings from inside one’s home or office transmitted to a third party’s cloud computing environment is enough to cause many people to avoid such devices entirely. And aside from sending explicit voice requests to the cloud, there is always the concern that additional, unrelated audio recordings may unknowingly be transmitted to a remote server.

To make voice recognition-based devices work better, and to give people confidence that their private conversations are not being eavesdropped on, new system architectures are needed that address these issues. The engineering wizards at Zalmotek have shown one possible way that appliances could be controlled by voice, while enabling users to customize the commands to suit their preferences, and completely sidestepping any privacy concerns. They have prototyped their proposed solution using inexpensive hardware and the Edge Impulse machine learning development platform.

Zalmotek’s core idea was to build a keyword spotting model with Edge Impulse Studio — this afforded them the opportunity to easily train a model that is customized to a particular user’s voice and manner of speech. Doing so makes the resulting voice recognition system more accurate and intuitive, but even more critically, Edge Impulse can produce machine learning algorithms that are very highly optimized for execution on tiny hardware platforms. And that means that the model can run entirely on-device — no audio is sent to the cloud or stored anywhere, so the privacy concerns disappear completely.

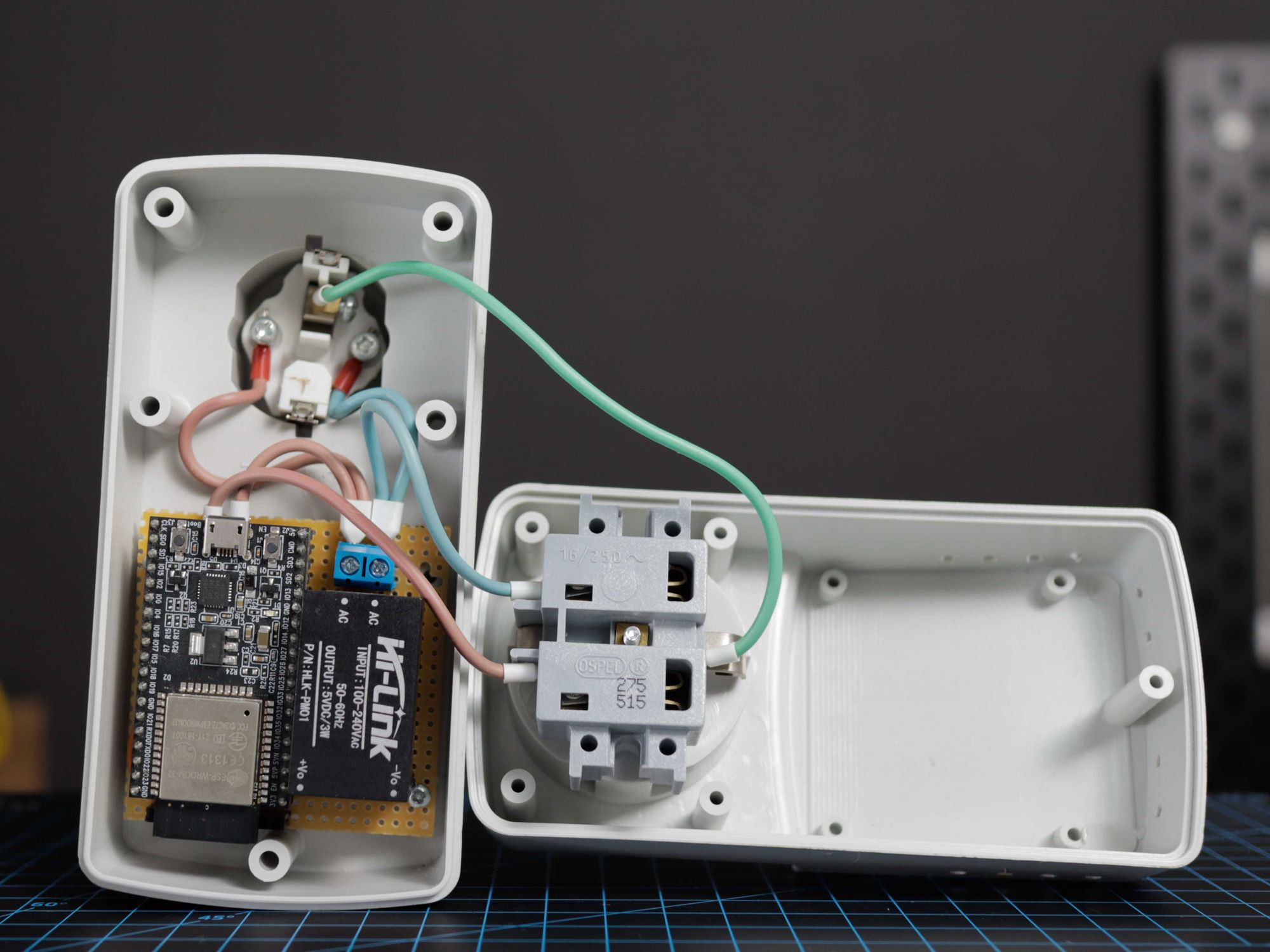

To get the project off the ground quickly, a Nordic Thingy:53 IoT prototyping platform was selected. With a dual-core wireless nRF5340 SoC and 512 KB of RAM, the Thingy:53 has plenty of power to run inferences against Edge Impulse models that have been optimized for tiny platforms. To convert existing appliances into smart appliances, Zalmotek initially focused on adding the ability to turn them on and off. An ESP32-DevKit and a relay were used for this purpose — when the Thingy:53 recognizes the right keywords, it wirelessly sends a signal to the ESP-DevKit, which in turns triggers the relay to turn the power supply of an appliance on or off.

Since one of Zalmotek’s stated goals is to customize the voice recognition system to individual users, they got started by collecting recordings of themselves. After flashing a custom firmware image to the Nordic Thingy:53 and installing the Edge Impulse CLI, the device was linked to an Edge Impulse Studio project. Now any data collected from the on-board microphone would automatically be uploaded to Edge Impulse to use as training data for the model. Initially, the team decided to focus on recognizing the keywords "light," "kettle," and "extractor" to control the corresponding appliances. Additionally, samples of ambient background noises were collected to signify when no voice commands are being issued.

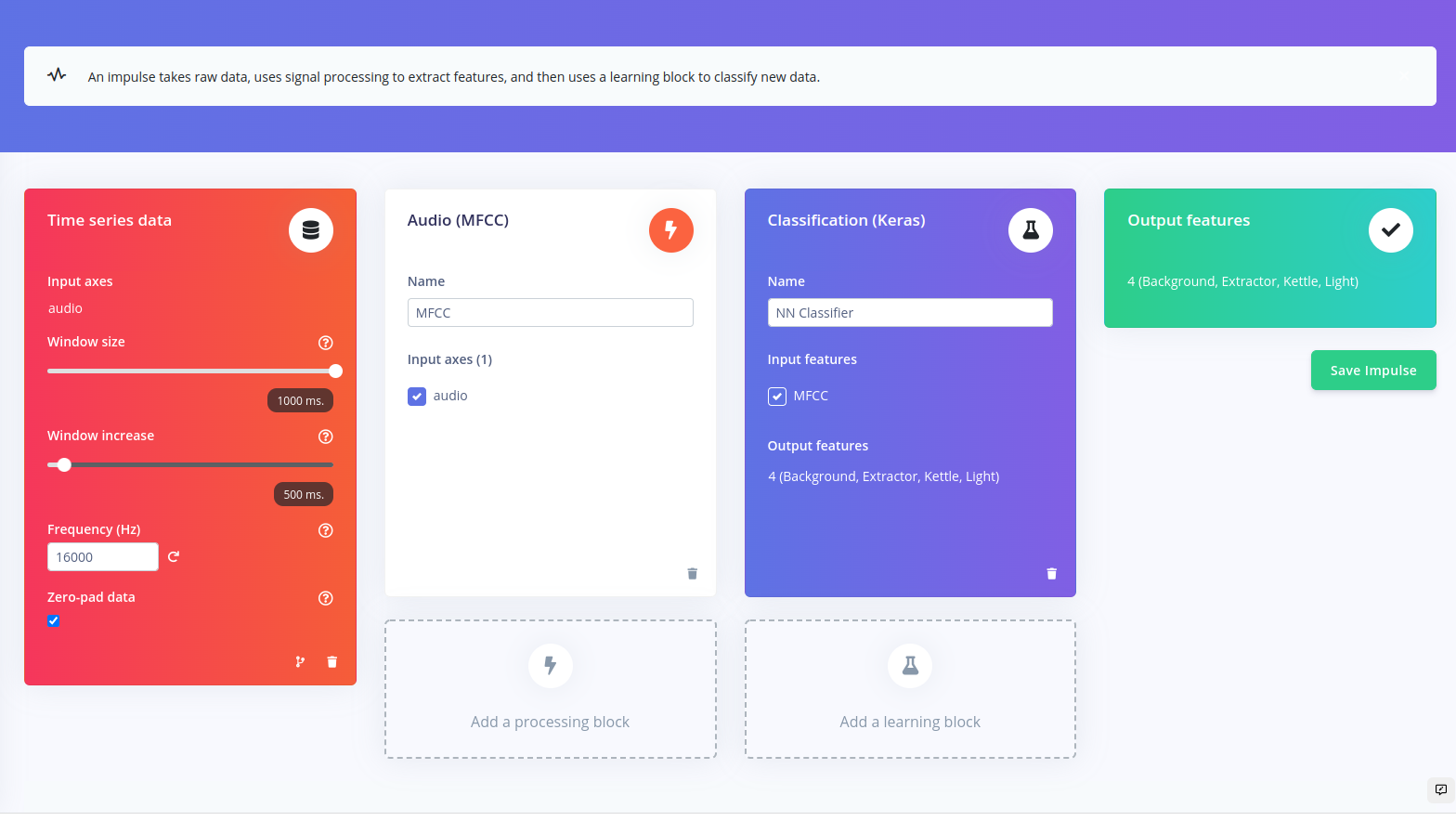

Next, a machine learning keyword spotting pipeline was built in Edge Impulse Studio. The impulse begins with a preprocessing step that slices the incoming audio samples into one second segments, then calculates the Mel-frequency cepstral coefficients, which are very useful in speech recognition applications. Finally, the data is forwarded into a neural network classifier that can distinguish between the target keywords.

The neural network’s hyperparameters were adjusted a bit to suit the task, then the training process was initiated with the click of a button. Shortly thereafter, metrics were presented to help assess how well the model was performing. It was shown that the algorithm was over 99% accurate in recognizing the target keywords. Clearly, this is an excellent result… but perhaps just a bit too good, which made Zalmotek a bit skeptical. So, they ran a series of live tests using the Nordic nRF Edge Impulse smartphone app to confirm the findings. They discovered that the keyword spotter was in fact working exactly as expected, so they moved on to deploy the model to the Thingy:53.

Having already linked the device to the Edge Impulse project, deployment was as simple as tapping a button in the aforementioned smartphone app. To wrap things up, the team 3D printed a case for the device, then wrote some custom software for the ESP32 DevKit that interprets the Bluetooth messages transmitted by the Thingy:53 when a known keyword is spotted. The ESP32 then controls the attached device as requested.

The present design lends itself well to controlling multiple appliances with the same voice control system. In addition to controlling more appliances, Zalmotek is also looking into alternative wireless protocols to make interoperability between different devices and platforms simpler. In the meantime, be sure to check out the excellent project documentation.

Want to see Edge Impulse in action? Schedule a demo today.