Today we’re excited to announce official support of Arduino Pro’s industrial-grade and high-performance product for analyzing and processing images on the edge: the Nicla Vision.

The board is equipped with a 2MP color camera, smart six-axis motion sensor, integrated microphone, and proximity sensor, making it suitable for asset tracking, object recognition, and predictive maintenance. Based on a powerful STM32H747AII6 dual-Arm Cortex-M7/M4 processor, it allows for ultra-fast inference times for image recognition and object detection applications. Measuring just 22.86 x 22.86mm, the compact board can physically fit into most scenarios, and requires so little energy it can be powered by battery for standalone tasks.

With the official ingestion firmware from Edge Impulse you’ll easily be able to connect the Arduino Nicla Vision to the Studio to sample the data from all the onboard sensors, train your machine learning model, and then download pre-compiled firmware with the trained model inside. Alternatively downloading the model as Arduino library will enable you to write your own custom program logic around the machine learning model inference results.

How do I get started?

You can purchase the Nicla Vision directly from the Arduino Store. You only need the board to get started.

Then have a look at our guide or watch the following video tutorial. It will walk through how to flash the default firmware to instantly start the data collection.

The Edge Impulse firmware for this development board is open source and hosted on GitHub. After the firmware is flashed and data is collected, try out some of the newest features available in the studio: active learning pipelines with data sources and data explorer features and project collaboration.

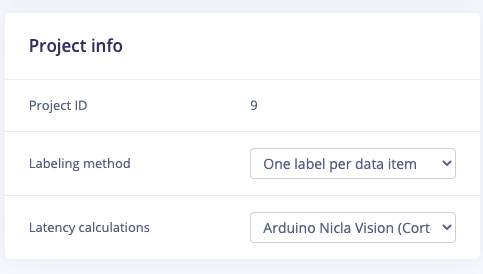

When you start with the project, make sure to select the Arduino Nicla Vision (Cortex-M7 480MHz) in the dashboard view to enjoy the latency calculation feature for this board:

Nicla Vision contains a powerful processor to host intelligence on the edge, so the benchmarks conducted by our team show amazing performance across the whole range of models available for training in Edge Impulse studio. Below is a benchmark table for a few reference models.

| Model | Inference latency |

| Keyword spotting (Conv1D) | 3.84 ms. |

| Continuous gesture (Fully connected) | 0.08 ms. |

| MobileNet v1 alpha 0.1 RGB 96x96 | 24 ms. |

| MobileNet v2 alpha 0.1 RGB 96x96 | 71 ms. |

| FOMO alpha 0.35 RGB 96x96 | 70 ms. |

Which sensors are supported?

Apart from a 2MP color camera, Nicla Vision has a built-in smart six-axis motion sensor, integrated microphone, and distance sensors. Additionally you can connect analog signal sensors to pin A0 and read data from them. What’s more, with the Edge Impulse sensor fusion feature, you can mix and match motion, analog and distance data to your liking!

The standard firmware supports the following sensors:

- VL53L1CBV0FY/1 distance / time-of-flight sensor

- GC2145, a 2MP color camera

- MP34DT05 microphone

- LSM6DSOX six-axis IMU

- Any analog sensor, connected to A0

Once your collected enough data samples, you can follow one of Edge Impulse tutorials:

- Adding sight to your sensors

- Recognizing sounds from audio

- Responding to your voice

- Building a sensor fusion model

- Building a continuous motion recognition system

- Object detection with FOMO

Next, train your model either using Edge Impulse EON Tuner (see Introducing the EON Tuner: Edge Impulse’s New AutoML Tool for Embedded Machine Learning), by dragging and dropping layers in your Neural Network Architecture or directly by writing your Keras code.

Finally, once you are happy with your results, you can deploy your model back to your device. In order to do this, go to the Deployment tab of your Edge Impulse project to build and download a ready-to-go binary that includes your machine learning model for the Nicla Vision. You can also download an Arduino library, which contains several examples to help you write your custom firmware.