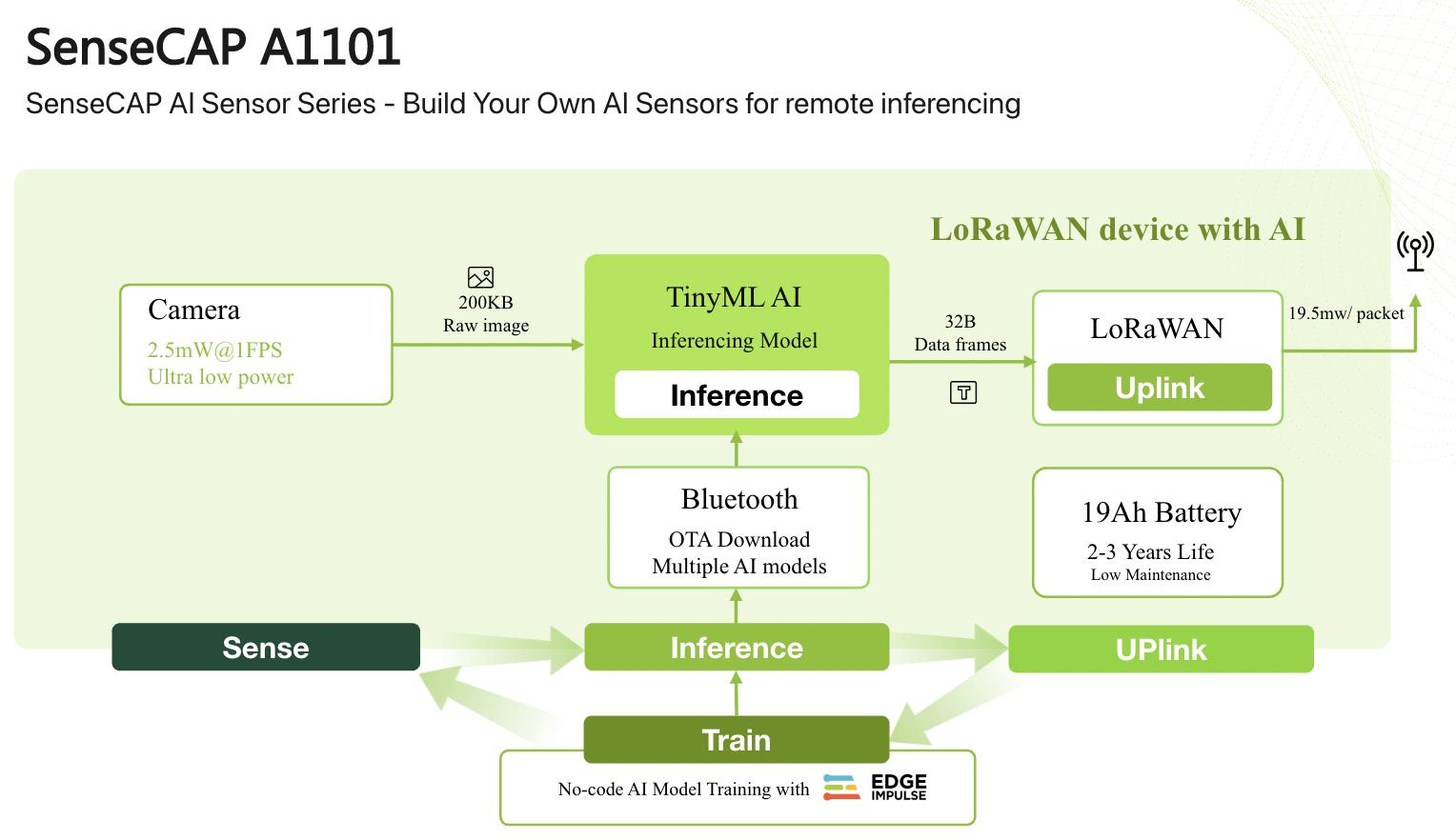

Edge Impulse is partnering with Seeed Studio to launch official support for the SenseCAP A1101 LoRaWAN Vision AI Sensor, enabling users to use it to acquire, develop, and deploy vision-based ML applications with Edge Impulse Studio or the new Edge Impulse Python SDK.

The SenseCAP A1101 is a smart image sensor, supporting a variety of AI models such as image recognition, people counting, target detection, and meter recognition. Equipped with an IP66 enclosure and industrial-grade design, it can be implemented in challenging conditions such as places with extreme temperatures or difficult accessibility. It combines tinyML and LoRaWAN® to enable local inferencing and long-range transmission, which are the two major needs from outdoor use.

What’s more, the sensor is battery-powered. This means that data can be collected in remote locations and transmitted over long distances, without requiring access to an AC power source. This makes it ideal for remote monitoring applications, such as agricultural automation or smart city projects, where power sources might not be easily accessible. This product is designed to deploy widely and be used in distributed monitoring systems without the user needing to worry about maintaining power sources across multiple sites. It is open for customization to meet your unique requirements, including camera, enclosure, transmission protocols, and more. You can also use the SenseCAP app for quick configuration with just three steps — scan, configure, done. Easy peasy.

How do I get started?

You can purchase the SenseCAP A1101 here. Then, follow the SenseCAP A1101/Edge Impulse documentation page for instructions on how to quickly develop and deploy your first vision-based ML application!

SenseCAP A1101 with Edge Impulse in action

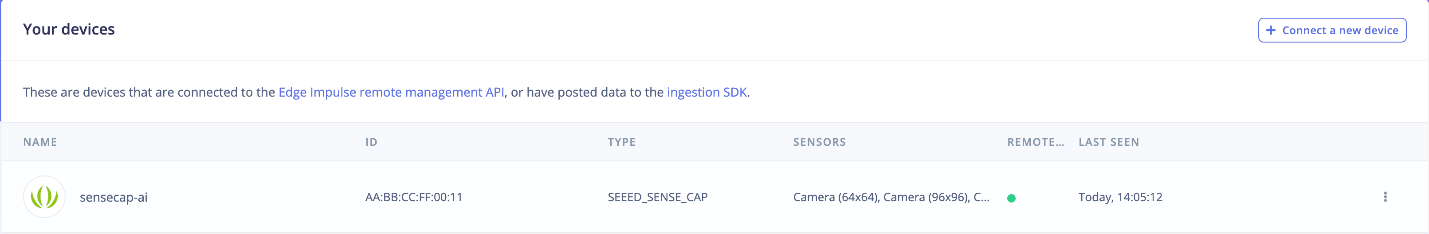

After connecting the SenseCAP A1101 with Edge Impulse Studio, the board will be listed as follows:

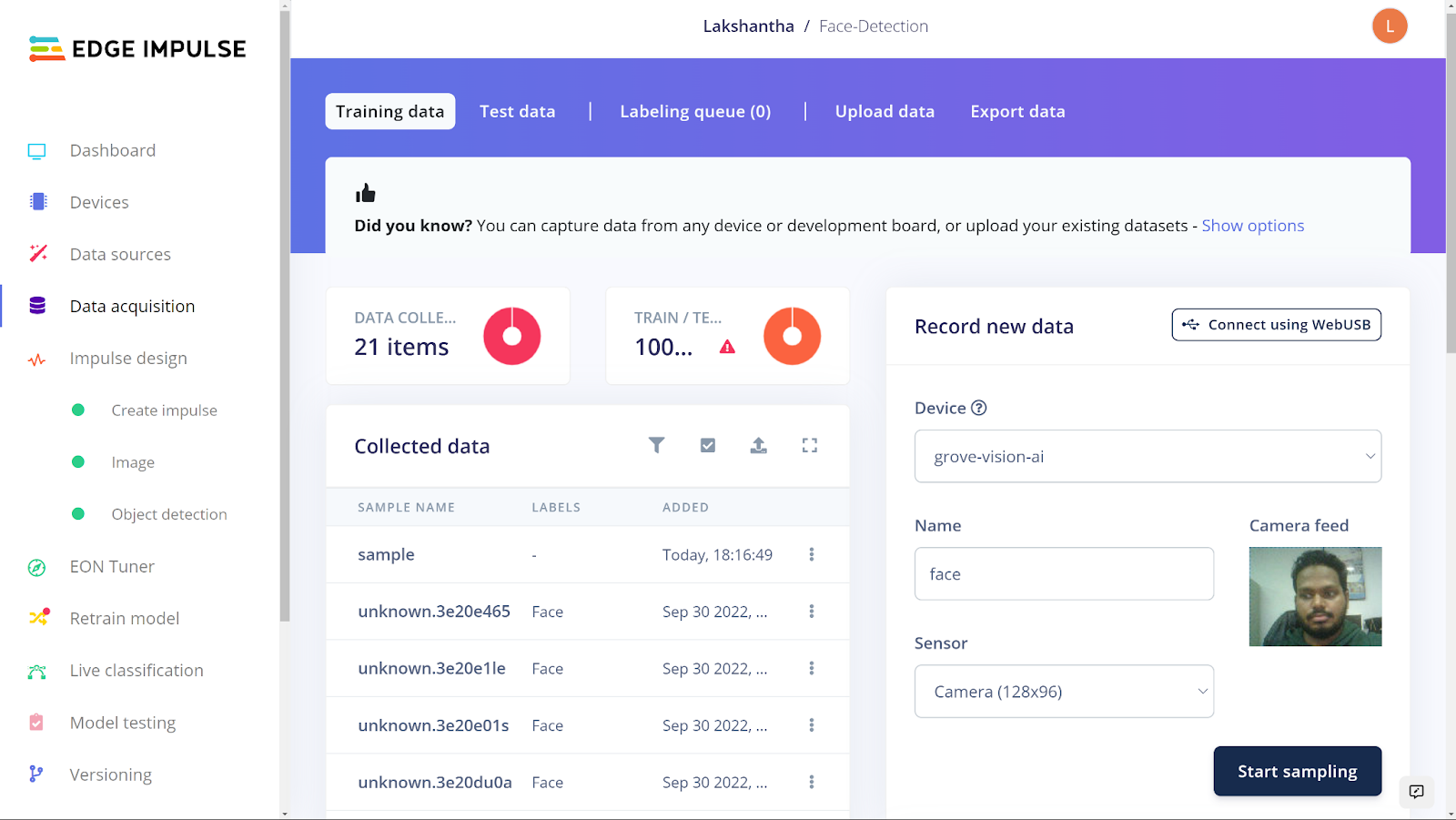

Frames from the onboard camera can be directly captured from Edge Impulse Studio:

Use the captured image data to train a model

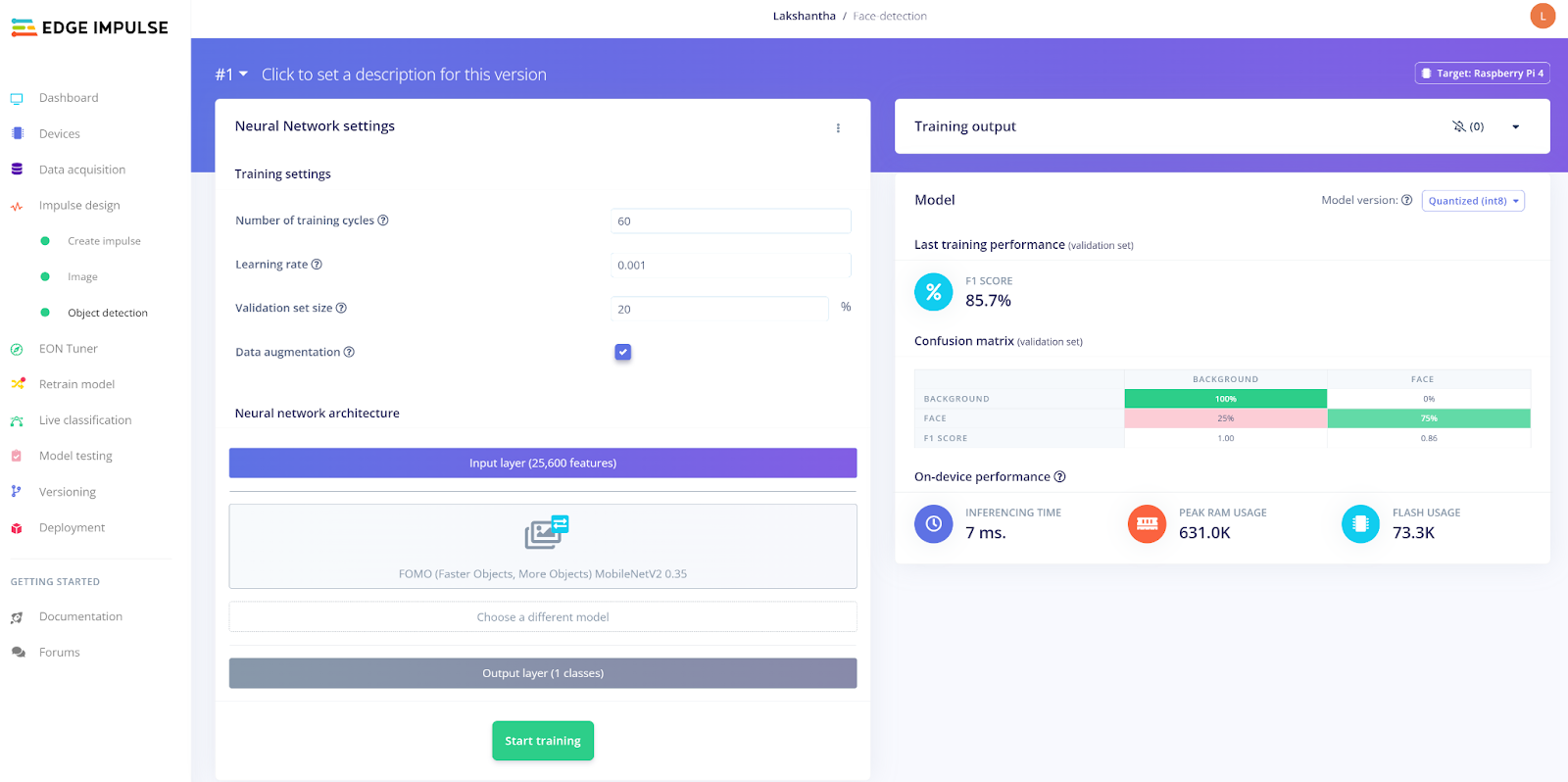

Once a model is trained using an object detection algorithm like FOMO on Edge Impulse Studio, it can be easily deployed to the SenseCAP A1101.

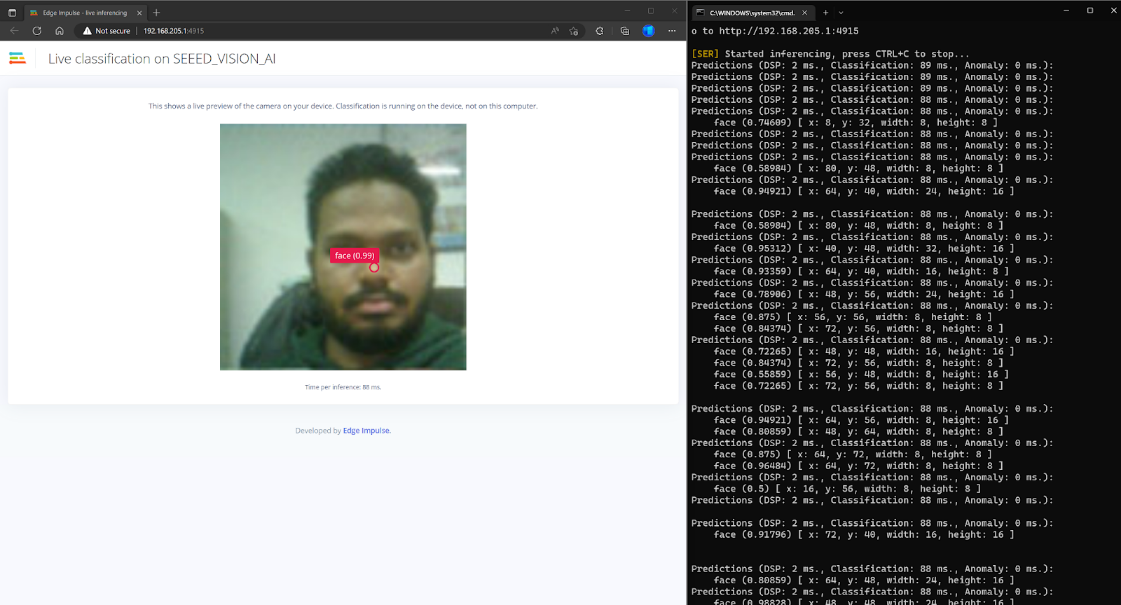

First, start inferencing locally to test it out.

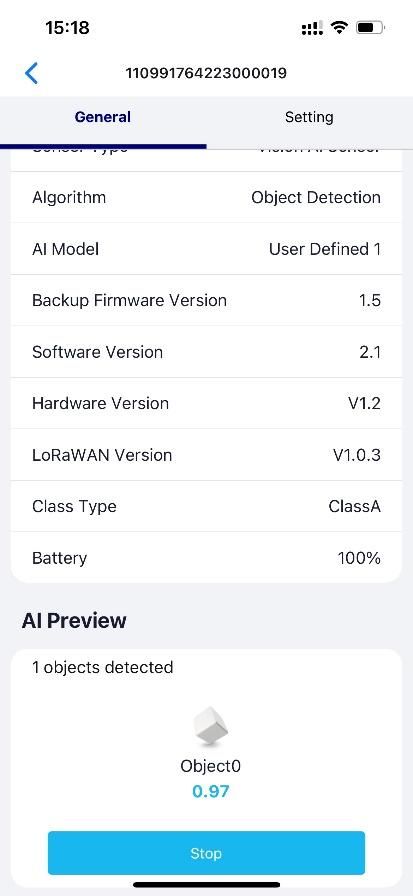

After that, connect the SenseCAP A1101 with a LoRaWAN gateway so that the results can be sent via LoRa. They can then be visualized inside the SenseCAP Mate app!

For general inquiries, please head over to the Edge Impulse forum. For inquiries related with LoRa functionality, please visit the Seeed discord or reach out via email.