It has often been said that familiarity breeds complacency, and that is as true when it comes to driving as it is anything else. Most of us are so accustomed to zooming down the interstate at seventy miles per hour that we forget the peril we would instantly find ourselves in if we momentarily lost control of the vehicle and struck another, or veered off of the roadway. The comfort of familiarity leads far too many to take their eyes off the road to check a smartphone, or even relax to the point of drowsiness.

Drowsy driving in particular is a growing problem on our roads and highways, with the National Highway Traffic Safety Administration (NHTSA) reporting it as a contributing factor in around 100,000 crashes each year, resulting in an estimated 1,550 deaths and 40,000 injuries. The problem is especially prevalent among individuals who work long or irregular hours, such as shift workers and truck drivers. However, drowsy driving can affect anyone, regardless of their occupation or lifestyle. The NHTSA reports that people who get less than six hours of sleep per night are twice as likely to crash as those who get seven or more hours of sleep.

Despite these alarming statistics, most people do not take drowsy driving very seriously. But the fact of the matter is that the dangers of drowsy driving are similar to those of drunk driving, as both impair the driver’s ability to react quickly and make sound decisions behind the wheel. Perhaps the greatest tragedy of it all is that it is an avoidable problem. Machine learning enthusiast Shebin Jose Jacob was recently pondering the problem of drowsy driving and had an idea that might help to reduce its incidence. He used the Edge Impulse platform to rapidly prototype a computer vision-based solution that watches for signs of tiredness in a driver and gives them an audible and visual jolt to restore their alertness when necessary.

This may sound like a challenging device to engineer, but with the tools available to him, it was actually fairly simple to get it up and running. On the hardware front, the tiny Arduino Nicla Vision board was selected. The Nicla Vision’s STM32H747AII6 dual Arm Cortex-M7/M4 processor is well suited for running tiny machine learning algorithms for image processing. The on-board two megapixel image sensor makes this board a complete, all-in-one solution for computer vision applications that is ready to go right out of the box. To put some polish on the design, Jacob made a simple 3D-printed case to house the Arduino, and a buzzer and LED were installed on that case to serve as the alert mechanisms for the driver.

Using this platform, the goal was to mount the hardware in a vehicle and capture a continuous stream of images of the driver’s face. Using an object detection algorithm developed with Edge Impulse Studio, those images would be analyzed to determine if the driver’s eyes were open or closed. Should closed eyes be detected for two or more seconds straight, an alert would be generated.

The next step in bringing the device to life was collecting a set of sample images, consisting of faces with eyes open or closed, to train the object detection model. Since the Nicla Vision is fully supported by Edge Impulse, Jacob was able to link the board directly to his project in Edge Impulse Studio. After doing so, all of the images that he captured with it would be automatically uploaded for use as training data. A small dataset of approximately 100 images was created in this way.

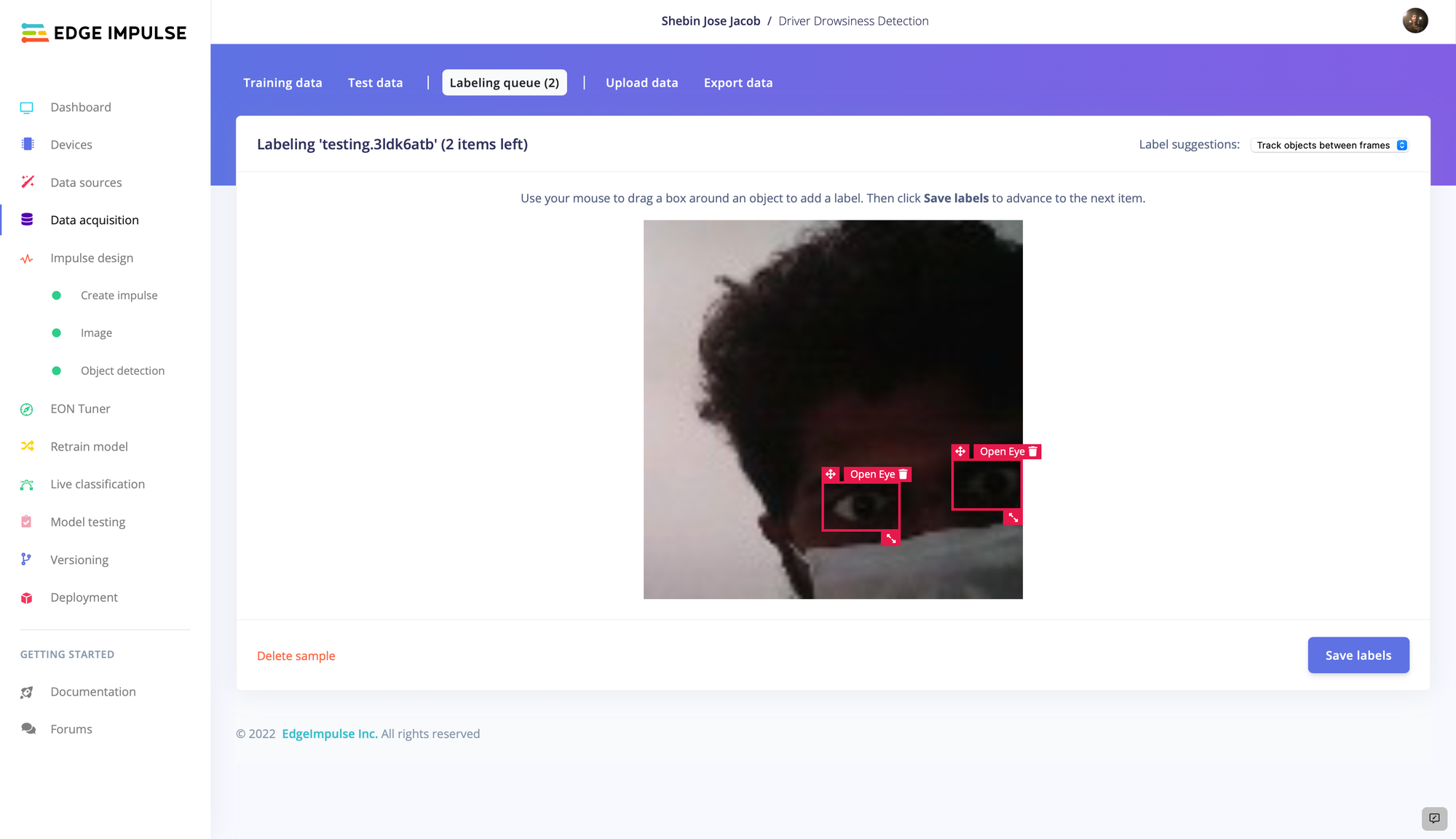

With the data collection process complete, Jacob pivoted to Edge Impulse Studio and launched the labeling queue tool. This tool offers AI-powered assistance in drawing bounding boxes around objects that the detection model is to learn to recognize. Without that assistance, drawing bounding boxes can be a slow, laborious process, especially for a large set of images. But with that help, the tool did most of the work, and Jacob was able to quickly move on to the next phase of the project — designing the impulse.

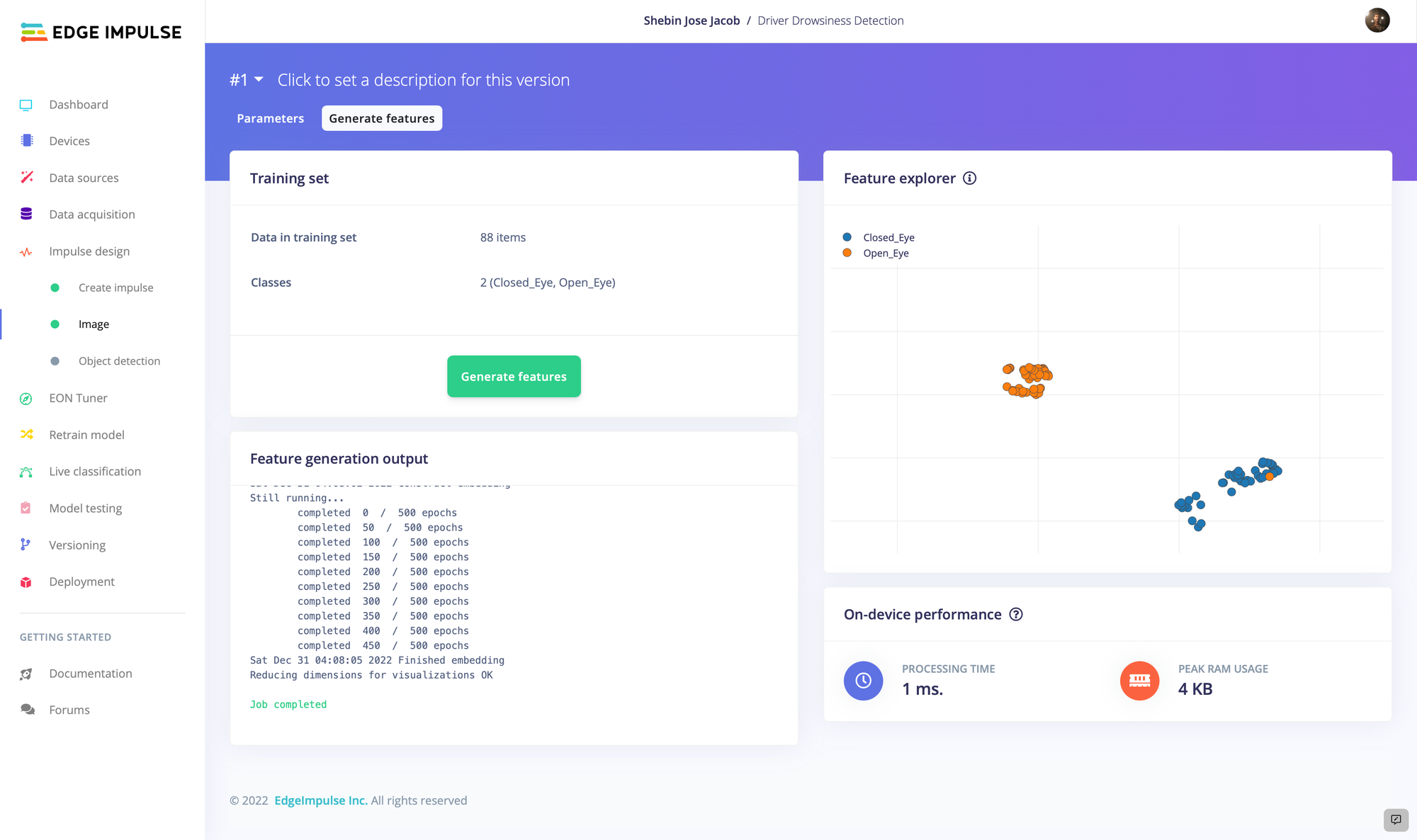

An impulse defines how input data is processed, and what machine learning algorithms are used to analyze it. In this case, a preprocessing step was inserted to resize the images to 96 x 96 pixels. Staring the pipeline in this way reduces the computational resources needed in all subsequent steps, and this is of critical importance when developing for resource-constrained edge computing platforms. Next up, the most important features are extracted from an image, then those features are forwarded into Edge Impulse’s ground-breaking FOMO object detection algorithm. The resultant model will be able to differentiate between open and closed eyes.

Having already explored the training data with the Feature Explorer tool, Jacob knew that the classes were well separated, so producing a well-performing model was anticipated. With that in mind, minimal tweaking of the model’s hyperparameters was done, then the model training process was initiated. After the training cycles completed, it was reported that the model had achieved an average accuracy rate of 100%.

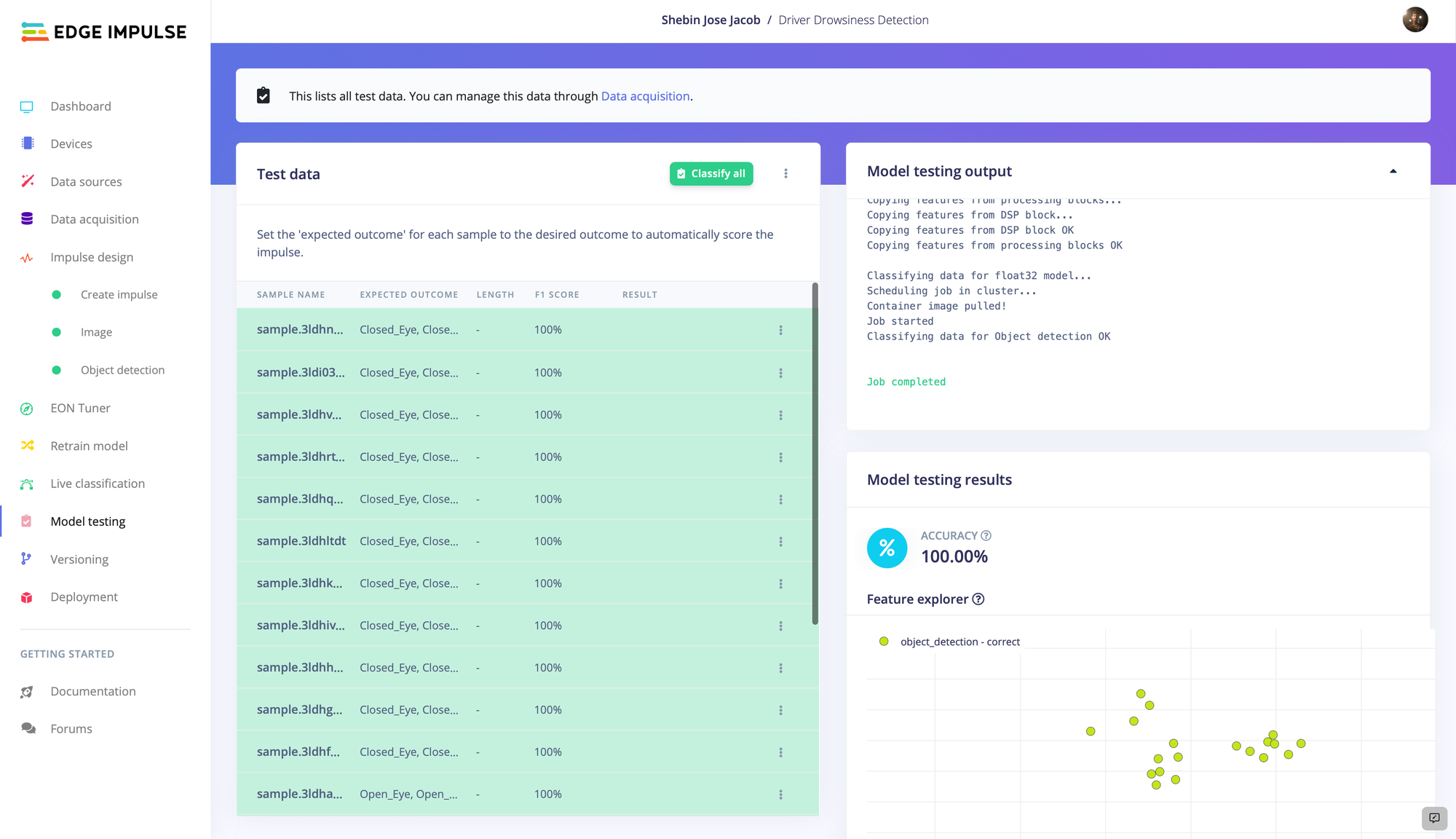

Since a perfect result can be a sign that the model has overfit to the training data, the more stringent model testing tool was also used. This tool validates the algorithm against a dataset that was held out of the training process. Using this tool, it was again reported that the model’s average accuracy rate was 100%. As a final test, the live classification tool was used on images captured by the Nicla Vision in real-time.

The classification results were as expected, so there was nothing left to do but deploy the pipeline to the physical hardware and put it to work. From the deployment tab in Edge Impulse Studio, Jacob chose the “Arduino library” option. This generates a library containing the entire machine learning analysis pipeline that can be opened with the Arduino IDE. There is a lot of flexibility afforded by this option — it was possible, for example, to add the necessary logic to determine when a driver’s eyes have been closed for two seconds, then trigger a buzzer and LED to serve as an alert.

This is a simple project that is both inexpensive and simple to implement, so whether you want to equip a fleet of service vehicles, or just your own personal vehicle, we have got you covered. Check out the project documentation for an overview, then clone the public Edge Impulse project and grab the Arduino sketch to get you on your way.

Want to see Edge Impulse in action? Schedule a demo today.