Computer vision projects such as image classification and object detection can be used in a wide variety of industries, covering applications such as inventory tracking, security and presence detection, wildlife monitoring, occupancy and energy conservation, and many more. However, video and image data is extremely bandwidth-intensive, and can quickly saturate network connections, especially those that are more limited in capacity such as cellular or LoRa. Even faster networks like Wi-Fi or Ethernet can struggle to keep up, depending on the resolution, frame rate, and number of cameras sending data.

While the *actual* image or video might make sense in some cases, there are also times when only the *context* or metadata of what is detected by a camera is truly needed. Thus, rather than sending the full image, only the fact that an object is detected can be sent over the network and streamed to a central data repository, where it can be stored, analyzed, or visualized.

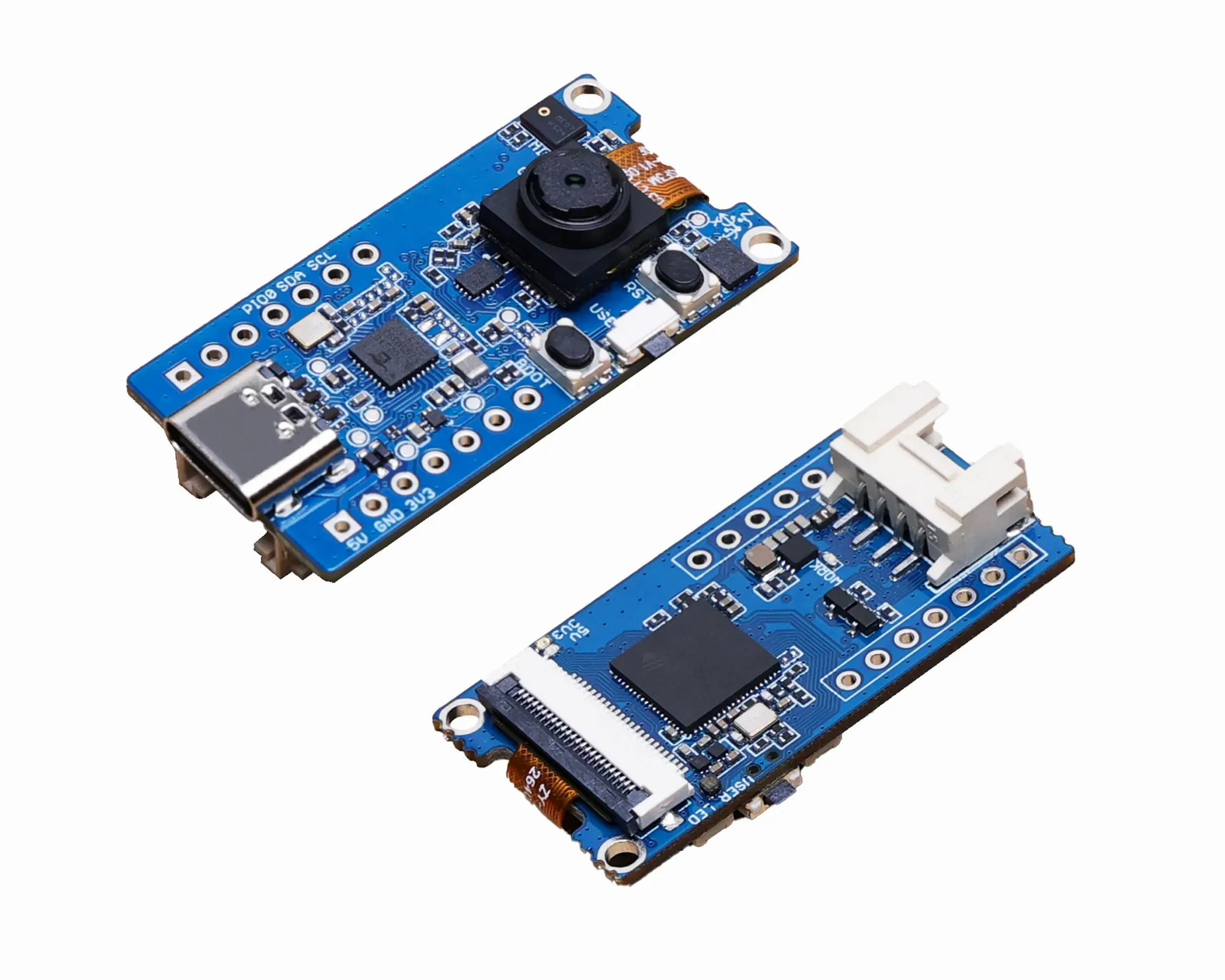

To demonstrate this concept, Edge Impulse’s very own David Tischler used the low-power Grove Vision AI Module from Seeed Studio to perform edge inferencing on a pair of sample objects, collect the inference results, and send them to the cloud to be rendered on a dashboard. The Grove Vision AI contains a Himax HX6537-A processor and an OV2640 camera, giving it the ability to run embedded vision models. As a simple proof of concept and to have some fun in the process, Tischler chose to create an object detection machine learning model in Edge Impulse that is trained on a pair of superhero figures, Batman and Superman.

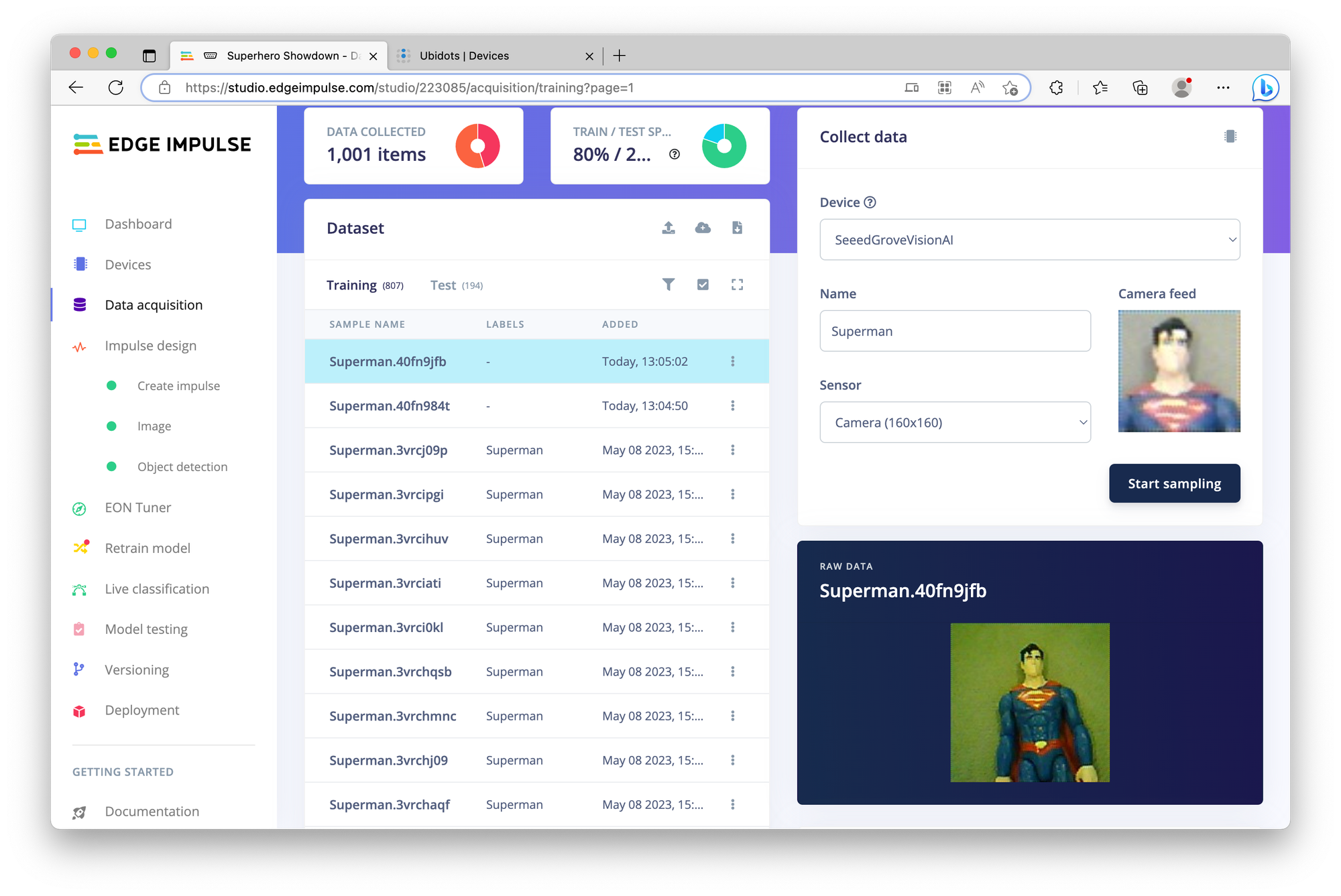

Like most ML projects, the first step was to collect data. That means Tischler had to collect images of the Batman and Superman figures, in varying lighting and background conditions. After taking 500 pictures of each, enough data was collected to develop a robust model. Using the Edge Impulse Studio, Tischler was able to quickly build an impulse that could confidently identify and locate each figure, using the Edge Impulse FOMO object detection algorithm that was small enough to fit in the Grove Vision AI’s onboard memory. Flashing the model to the device was pretty straightforward, as the Studio has the ability to export device-specific firmware ready to flash onto the board.

Once flashed and running, the Edge Impulse CLI was used to capture results of the inferencing, which is the metadata associated with the detected figures. The raw image coming from the devices *can* be viewed, but as the goal here was to *not* send raw video across the network, only the *results* in text format were captured.

The results are collected via a small Python application, and sent to a cloud dashboard running at Ubidots, an IoT data aggregation service. Ubidots can be used to build visualizations, time-series graphing, and other interpretations of IoT and sensor data as it is streamed to the platform.

You can review the detailed project in order to learn more and duplicate the work using your own objects, no superhero required.

Want to see Edge Impulse in action? Schedule a demo today.