Smart shopping carts, commonly equipped with various technologies such as weight sensors and RFID scanners, have been gaining popularity in retail settings in recent years. These carts aim to enhance the shopping experience for consumers and improve efficiency for retailers. They accomplish these goals by allowing shoppers to skip the time they normally spend waiting in checkout lines, and they help manage inventory for retailers, which can reduce the occurrence of out-of-stock items, make store operations more streamlined, and improve customer satisfaction.

Despite the potential benefits of smart shopping carts, they have faced some challenges in adoption. One issue is the cost of implementing the technology, which can be prohibitively expensive, especially for smaller retailers. The carts themselves can be quite pricey, but in addition to that, existing solutions generally require that changes be made to the retail location, and its established operations, as well. These changes can mean installing additional hardware throughout the store, or adding RFID tags on all products, for example.

For smart shopping carts to be more than just a rarely seen novelty, the technology needs to be made both simpler to implement and less expensive. Engineer and serial inventor Kutluhan Aktar believes that he has devised a better path forward for the burgeoning technology that checks both of those boxes. With some inexpensive, simple to use off-the-shelf hardware and the Edge Impulse machine learning development platform, he has built a prototype system that can convert an existing, standard shopping cart into a smarter version of itself. And very importantly, the converted carts do not require retailers to make big infrastructure or process changes during implementation.

After considering his options, Aktar settled on a computer vision-based approach. By visually inspecting and identifying objects as they are placed into, or removed from, the cart, laborious instrumentation of individual products (e.g. with RFID tags) becomes unnecessary, making the cart self contained — and of course that makes everything easier. But wait… computer vision solutions are costly and require significant computational resources, right? Well, that is often true, so that is where Edge Impulse comes in. By designing an object detection model that has been highly optimized for tiny hardware with Edge Impulse Studio, it can be deployed to inexpensive, low-power, resource-constrained hardware platforms.

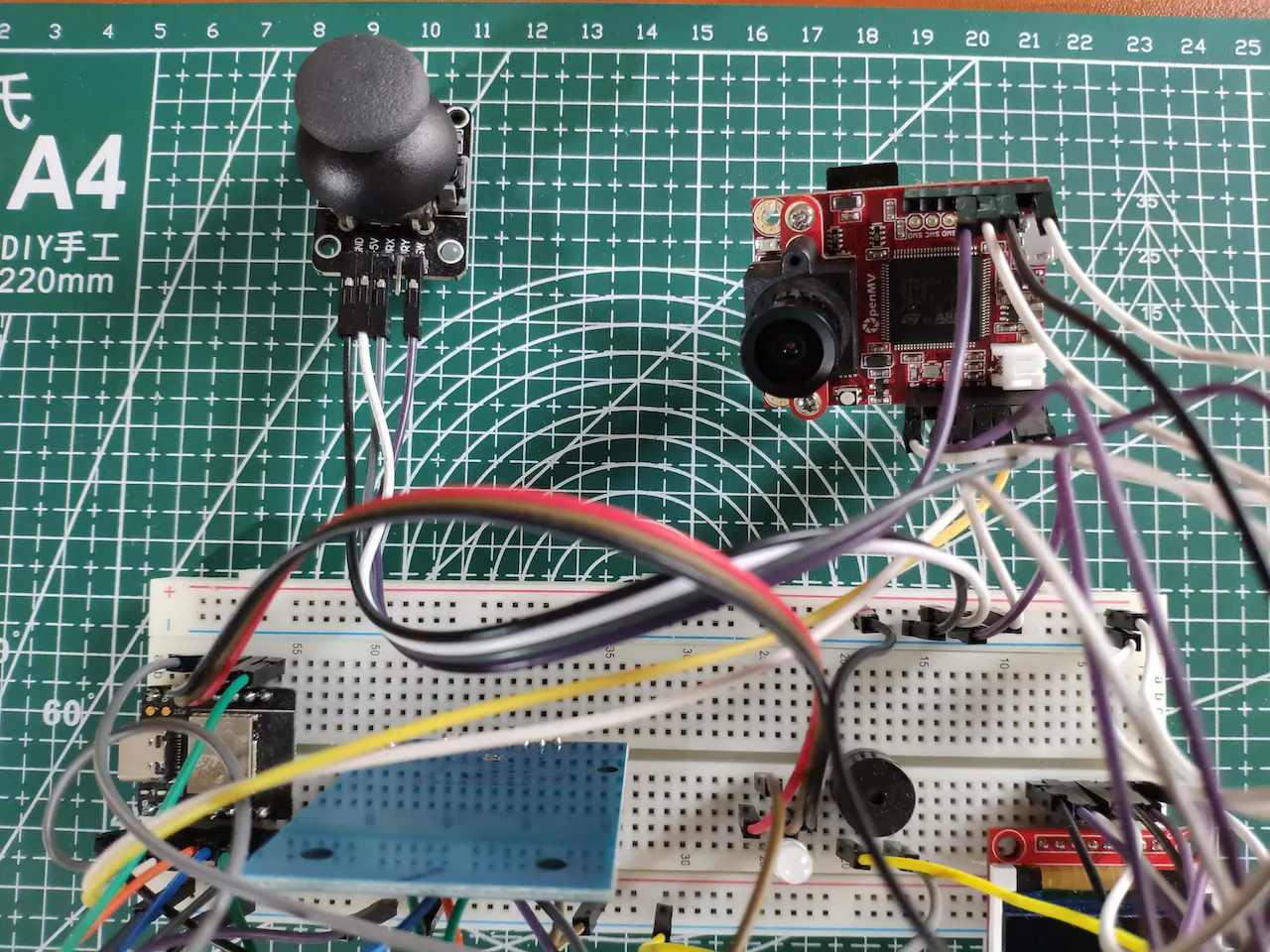

To prove the concept, an OpenMV Cam H7, with an Arm Cortex-M7 processor running at 480 MHz, 1 MB of SRAM, and a built-in image sensor was selected. This tiny, yet powerful, platform has sufficient horsepower to capture product images and run the machine learning object detection pipeline. Since the OpenMV Cam H7 does not have a Wi-Fi radio, a DFRobot Beetle ESP32-C3 development board was also included in the design. This allowed for wireless communication with a website that Aktar built to help shoppers manage their shopping lists and pay for their items online before leaving the store. The website was hosted on a LattePanda 3 Delta 864 single board computer.

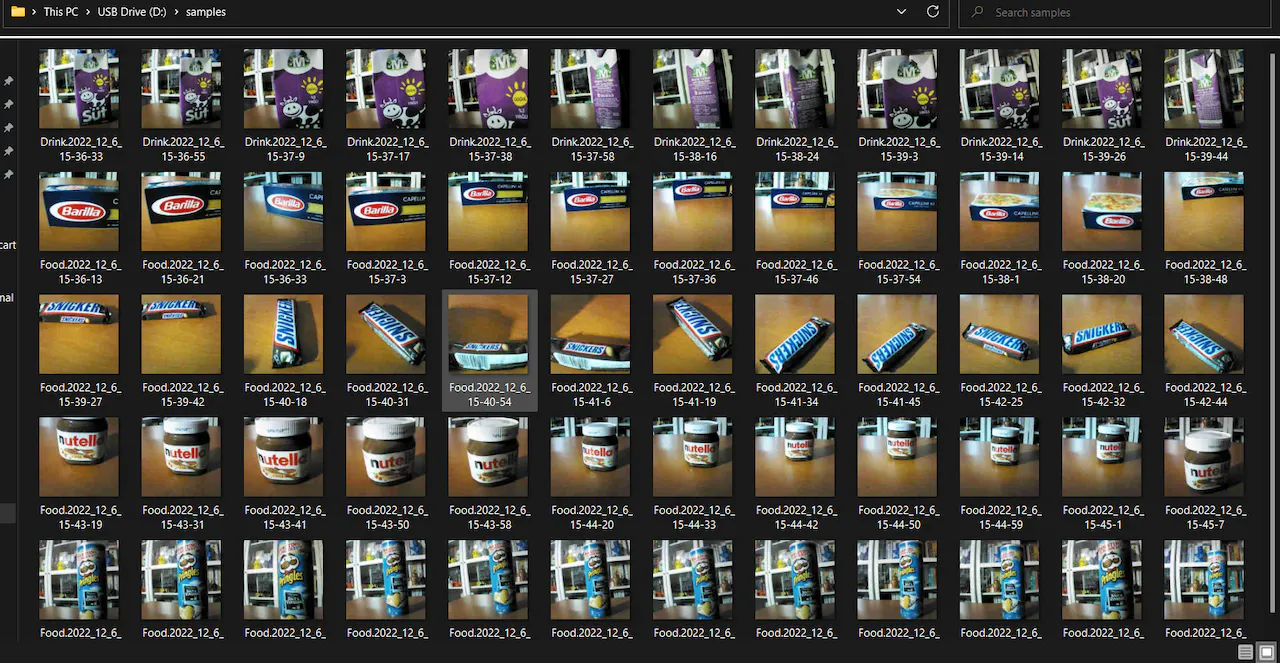

As previously mentioned, Aktar planned to use an object detection model, so before building it he needed to collect image samples to help it learn. For the initial version of the project, just a handful of products were included — Barilla pasta, milk, Nutella, Pringles, and Snickers. Naturally the set of recognized products would need to be expanded before the device could be used in the real world, but this is sufficient to show that the solution would work in principle. The same basic methods could be used in a retail setting, but more sample data would need to be provided to the model.

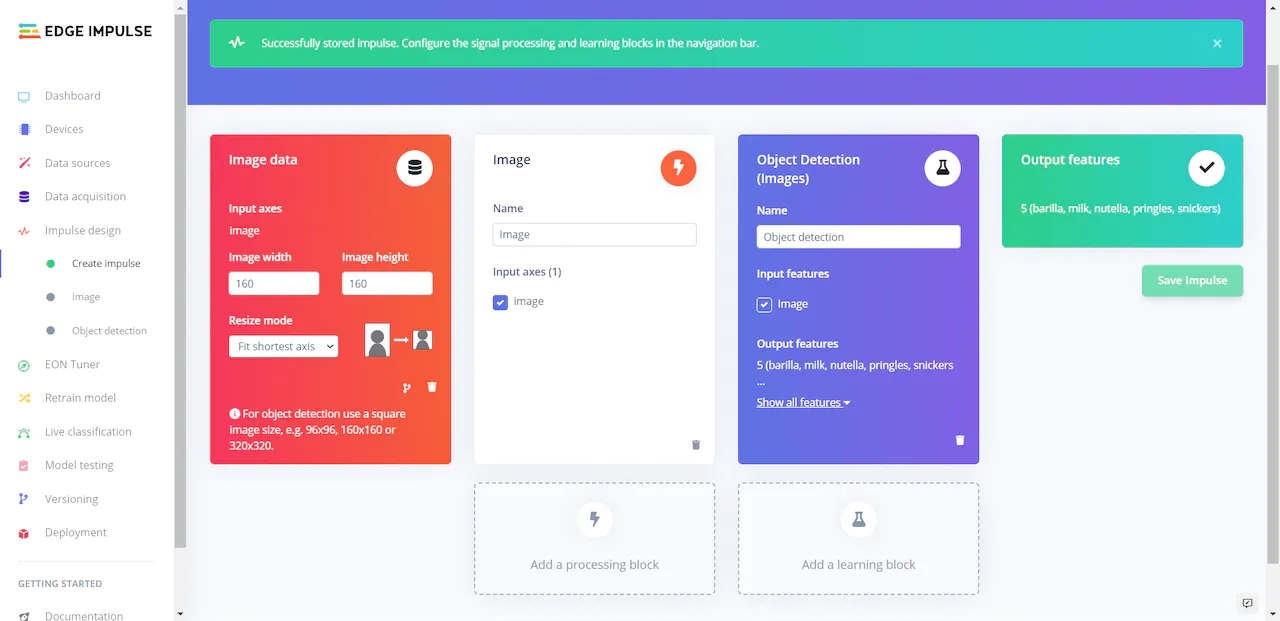

A quite small dataset of about 70 images of the sample products was manually collected then uploaded to Edge Impulse Studio. Once uploaded, the AI-powered data acquisition tool gives assistance in drawing bounding boxes around the objects of interest in the images to identify them. This tool considerably speeds up the data labeling process, which can be very time consuming when doing the work entirely manually. With the data ready to go, the stage was set for building the machine learning analysis pipeline to handle the computer vision tasks.

The impulse begins with a preprocessing step that resizes the images to 160x160 pixels to reduce the amount of computational resources that will be needed for downstream steps. This is especially important when working with resource-constrained hardware. This data was then fed into Edge Impulse’s ground-breaking FOMO object detection algorithm, which can run with just a couple hundred kilobytes of RAM, yet runs inferences about 30 times faster than a MobileNet SSD model.

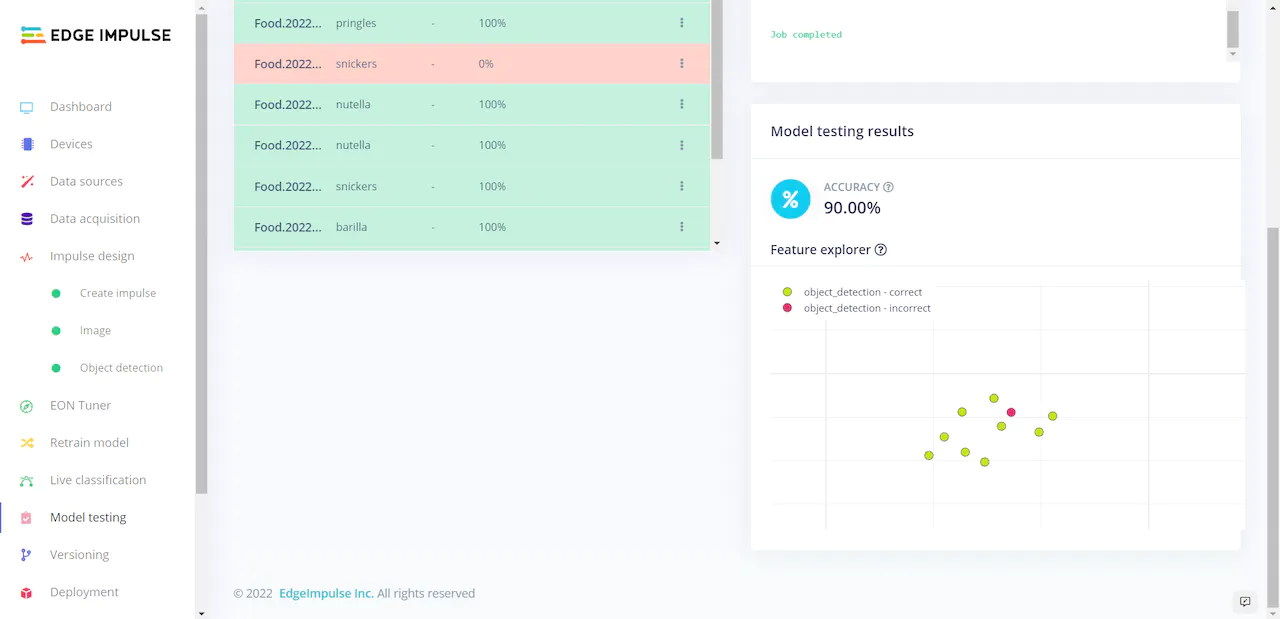

After making a few small tweaks to the model’s hyperparameters, Aktar kicked off the training process. After a short time training was finished, a confusion matrix was presented to assist in understanding how well the model was performing. The average accuracy was reported as being a very impressive 100% right off the bat. That could be a sign of overfitting of the model to the training data, so a second validation was performed. The model testing tool checks the model’s performance against a set of data that was held out from the training process. This showed a very respectable average accuracy of 90%, so it was well within bounds for a successful proof of concept.

With everything looking great, it was time to deploy the model directly to the physical hardware. Doing so eliminates any dependencies on the cloud, and also enhances privacy protections for shoppers. The OpenMV Cam H7 is fully supported by Edge Impulse, so Aktar was able to download a custom firmware image that contained the full machine learning analysis pipeline embedded within it. After flashing it to the board, the smart cart conversion hardware was up and running. Running some real world tests showed it to be working very well, as would be expected given the validation tests that were previously conducted.

To wrap up the build, a slick case was 3D-printed to house the hardware and mount it to a standard shopping cart. There are a lot of moving pieces in this project, but technically, it is surprisingly easy to build, thanks to tools like Edge Impulse Studio and the OpenMV Cam H7. As always, Aktar has written up an incredibly detailed description of his work, so make sure you do not miss out on any of the insights he provides. He has also very graciously made the Edge Impulse Studio project public, so feel free to clone it to give yourself a headstart in bringing your own ideas to life.

Want to see Edge Impulse in action? Schedule a demo today.